Recently, I did a live streaming session for the New York City Microsoft Reactor in which I took concepts from the Quickstart: Deploy Bicep files by using GitHub Actions doc and put them into action. In this session I create an Azure Kubernetes (AKS) cluster with secrets from Azure Key Vault with Bicep and GitHub Actions. The main goal of this is to show the viewer how to utilize an automated process to deploy applications by just pushing to a GitHub repository. If you’d like to follow along with this session, I’ve provided viewers with a GitHub Template with full instructions on how to deploy this solution.

00:00:00 – Opening

00:04:28 – Agenda

00:06:46 – What is Bicep?

00:10:09 – GitHub Actions

00:11:49 – What is Azure Key Vault?

00:13:15 – What is Azure Kubernetes Service?

00:14:42 – Demo time!

00:45:41 – Resources

Here’s a rundown of the components used to build out this automated deployment:

Bicep is a domain-specific language (DSL) that uses a declarative syntax to deploy Azure resources. It provides concise syntax, reliable type safety, and support for code reuse. We believe Bicep offers the best authoring experience for your infrastructure-as-code solutions in Azure.

Here’s an example of using a Bicep template to deploy an Azure Storage account:

@minLength(3)

@maxLength(11)

param storagePrefix string

@allowed([

'Standard_LRS'

'Standard_GRS'

'Standard_RAGRS'

'Standard_ZRS'

'Premium_LRS'

'Premium_ZRS'

'Standard_GZRS'

'Standard_RAGZRS'

])

param storageSKU string = 'Standard_LRS'

param location string = resourceGroup().location

var uniqueStorageName = '${storagePrefix}${uniqueString(resourceGroup().id)}'

resource stg 'Microsoft.Storage/storageAccounts@2021-04-01' = {

name: uniqueStorageName

location: location

sku: {

name: storageSKU

}

kind: 'StorageV2'

properties: {

supportsHttpsTrafficOnly: true

}

}

output storageEndpoint object = stg.properties.primaryEndpoints

GitHub Actions helps you automate your software development workflows from within GitHub. You can deploy workflows in the same place where you store code and collaborate on pull requests and issues. Actions are stored in workflow files written in YAML format and are highly customizable for your needs.

Here’s an example of a GitHub Actions workflow file that creates an Azure Kubernetes Service cluster. You’ll notice there are variables defined like ${{ secrets.REGISTRY_USERNAME }} within the workflow file.

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@master

- uses: Azure/docker-login@v1

with:

login-server: contoso.azurecr.io

username: ${{ secrets.REGISTRY_USERNAME }}

password: ${{ secrets.REGISTRY_PASSWORD }}

- run: |

docker build . -t contoso.azurecr.io/k8sdemo:${{ github.sha }}

docker push contoso.azurecr.io/k8sdemo:${{ github.sha }}

- uses: Azure/k8s-set-context@v1

with:

kubeconfig: ${{ secrets.KUBE_CONFIG }}

- uses: Azure/k8s-create-secret@v1

with:

container-registry-url: contoso.azurecr.io

container-registry-username: ${{ secrets.REGISTRY_USERNAME }}

container-registry-password: ${{ secrets.REGISTRY_PASSWORD }}

secret-name: demo-k8s-secret

- uses: Azure/k8s-deploy@v1.4

with:

manifests: |

manifests/deployment.yml

manifests/service.yml

images: |

demo.azurecr.io/k8sdemo:${{ github.sha }}

imagepullsecrets: |

demo-k8s-secret

Encrypted secrets are defined on the GitHub Actions documentation: Secrets are encrypted environment variables that you create in an organization, repository, or repository environment. The secrets that you create are available to use in GitHub Actions workflows. GitHub uses a libsodium sealed box to help ensure that secrets are encrypted before they reach GitHub and remain encrypted until you use them in a workflow. Azure Key Vault protects cryptographic keys, certificates (and the private keys associated with the certificates), and secrets (such as connection strings and passwords) in the cloud. Rather than transmitting sensitive items like passwords or connection strings in our app deployment in clear text, Key Vault provides a secure storage locker for your secrets. Key Vault’s main goals are to provide you with Secrets Management (securely store tightly controlled credentials), Key Management (storing encryption keys), and Certificate Management (store and provision TLS/SSL certificates for your resources). You can utilize your preferred programming language to access these Key Vault services. There are client libraries for JavaScript, Java, Python and more that you can use to retrieve secrets.

From the Quickstart: Azure Key Vault secret client library for JavaScript (version 4) Microsoft documentation, you can see this example of creating a client, setting a secret, retrieving a secret, and deleting a secret.

const { SecretClient } = require("@azure/keyvault-secrets");

const { DefaultAzureCredential } = require("@azure/identity");

// Load the .env file if it exists

const dotenv = require("dotenv");

dotenv.config();

async function main() {

// DefaultAzureCredential expects the following three environment variables:

// - AZURE_TENANT_ID: The tenant ID in Azure Active Directory

// - AZURE_CLIENT_ID: The application (client) ID registered in the AAD tenant

// - AZURE_CLIENT_SECRET: The client secret for the registered application

const credential = new DefaultAzureCredential();

const url = process.env["KEYVAULT_URI"] || "<keyvault-url>";

const client = new SecretClient(url, credential);

// Create a secret

const uniqueString = new Date().getTime();

const secretName = `secret${uniqueString}`;

const result = await client.setSecret(secretName, "MySecretValue");

console.log("result: ", result);

// Read the secret we created

const secret = await client.getSecret(secretName);

console.log("secret: ", secret);

// Update the secret with different attributes

const updatedSecret = await client.updateSecretProperties(secretName, result.properties.version, {

enabled: false

});

console.log("updated secret: ", updatedSecret);

// Delete the secret

// If we don't want to purge the secret later, we don't need to wait until this finishes

await client.beginDeleteSecret(secretName);

}

main().catch((error) => {

console.error("An error occurred:", error);

process.exit(1);

});

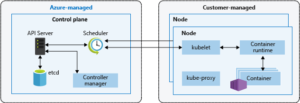

Azure Kubernetes Service Easily define, deploy, debug, and upgrade even the most complex Kubernetes applications, and automatically containerize your applications. Use modern application development to accelerate time to market. AKS provides a control plane to manage your Kubernetes clusters within Azure. You’re able to integrate CI/CD services, use automated upgrades, get access to metrics on your deployments, and even use different types of CPUs/GPUs in node pools.

The left side shows the responsibility of the control plane which is managed by Azure. The right side shows the customer-managed kubernetes services within your AKS cluster. Azure manages the hardware, the monitoring, the security, identity, and access while the user manages the containers, the pods, and what services run within an AKS cluster. You can create your clusters using a number of methods such as Bicep/ARM, Azure CLI, Azure Portal, or even PowerShell.

Here’s an example from the Create Kubernetes Cluster using Azure CLI Microsoft Docs page:

az aks create \

--resource-group myResourceGroup \

--name myAKSCluster \

--node-count 2 \

--generate-ssh-keys \

--attach-acr <acrName>

This command can be executed locally with your authenticated account with the az binary or within your Cloud Shell. The command creates a two node (two virtual machines deployed by Azure to run your clusters) AKS cluster and attaches an Azure Container Registry which can store container images that may be deployed to your cluster.

If you’d like to discuss this more, leave a comment or reach out on Twitter! I am happy to direct you to proper documentation, help you troubleshoot any issues you’re running into, or even send some feedback to our product group.

Useful documentation and more:

Quickstart: Deploy Bicep files by using GitHub Actions

Quickstart: Integrate Bicep with Azure Pipelines

Azure Kubernetes Service (AKS)

Azure Key Vault basic concepts

0 comments