Editors Note:

While working in Australia I was blessed to work with some truly gifted technologists. In the SQL space David Lean was the local legend with the (deserved) reputation of being THE SQL Performance Guru.

David has since left Microsoft but continue his cutting edge work with SQL Server and Application Tuning.

Thanks for the great post on using SQL Azure and performance Testing David!

-Chuck

Data-Driven Load Tests using Visual Studio Online and SQL Azure

Almost everyone who IT Operations for a large company can tell you war stories about apps whose performance died within 18 mths of going live. Many don’t even last 6 mths.

Nearly everyone in corporate IT can tell you war stories about some application whose performance died within 6 – 18 mths of going into production.

Why do they blow up?

More often than not, the root cause is inadequate stress testing, with inadequate data. Either not enough data, or data whose distribution didn’t represent real world. Including errors.

So why don’t we do more testing?

Often it is because of the cost. Consider the costs of H/W (just for load generation), Data Center Floor space, Insurance, Testing S/W, & people. It is easy to burn more than $1 mill just trying to prove a new system can scale to the needs of the business.

So we try to make do. Without those resources, design decisions are often made by a single developer based on timing loops on their desktop.

Benefits & problems of caching will rarely be seen if you only test with one user. (ie: the developer on their laptop). Same goes for inefficient database queries & poor architecture.

Without load, it is impossible to answer the hard questions like.

The Async/Await is great for I/O bound applications, but it adds overhead. Given that IIS’s application pool is designed to handle blocking threads. Would I get better perf by merely increasing the IIS thread pool’s “Max Concurrent” parameters?

If my Web Servers are suffering Thread Starvation due to the synchronous calls blocking on the database. Will rewriting them as Async improve the situation or merely exacerbate the issue by the RDBMS to divert memory to tracking even more user requests? Should I just increase the IIS “Max Concurrent …” values?

The good news is Microsoft has made that investment for us. For around $6 you can simulate 200 users (no wait time) smash your system for 10 mins.

Data-Driven Load Tests using Visual Studio Online

This article shows how to create Load/Stress Tests for both Web Sites & Web API controllers, with the potential to simulate 10’s of thousands of users hitting your solution. Allowing you to compare caching & other techniques in order to optimise the rendering of your site.

Why Data Driven?

In recent years ASP.NET MVC has grown in popularity. And with it, the use of dynamic routing. This has created a problem for testers. Simply recording your mouse & keystrokes into a static test & then replaying them, doesn’t work if your URLs change frequently.

If your Web Site dynamically generates its URL’s based on your data, ie: your product catalogue or recent news events, then you need a load test that can also dynamically generate valid URL’s to click on.

Fortunately Visual Studio’s Web Performance Tests & Load Testing makes this easy. The answer is Data Driven Web Performance Tests.

What if you don’t have access to a dozen or more idle servers you can use to run these performance tests? The answer is in the cloud. Visual Studio Online makes it possible to simulate 1,000’s of users hitting your web site. You can get it running in under 5 mins.

There are 4 common scenarios for Data driven testing.

1. Simulating URL’s that change based on the data in your system.

2. Filling out a form with different data for different users.

3. Calling a Web Service with different parameters.

4. Simulating an AJAX call.

=======================================

Scenario 1: Dynamic URL’s.

Use Case: The logic in your page generates a URL based on some internal data structure. Eg: In an E-Commerce site each Product you sell is likely to have its own URL. For CMS System, each new article may have a unique URL.

In this example we will look at the Horse Racing Industry. The Meetings page (below) shows all the Races. Each cell is a link to a Races Page. Each race only occurs once, so its URL contains the MeetingDate. So each new day a new set of racing links are created. Any test you build today using static URL’s will be broken tomorrow.

**Picture 1. **: Each cell in the table points to a race page.

One approach is to have your test always go to the Meeting page. Then run the javascript on that page to select a valid link. The disadvantage with this approach is you tie up your test agents with meeting page processing. Which reduces the number of clients you can simulate per agent.

Another approach is to pre-calculate all the valid links immediately prior to the test execution & put them in a data store. The Test Agent only needs to ask for a valid URL & send it to the server you are testing.

Part A: Setup your data.

We will need to create table similar to the one below.

Our Test only needs the URL column. But sometimes an ID column is handy for the clean-up task.

Most SQL DBA’s would use SQL Server Management Studio (SSMA), but you don’t need to install SQL Client tools. Visual Studio is just as powerful as I will demonstrate.

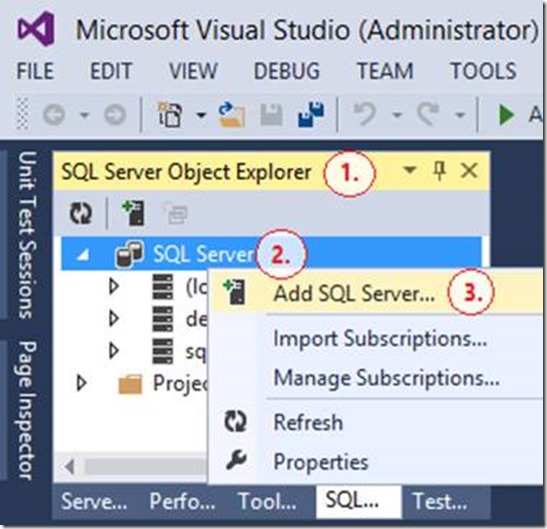

1. Start Visual Studio

2. Click menu: View => SQL Server Object Explorer

3. Right Click SQL Server node

4. Click Add SQL Server …

5. Enter login credentials.

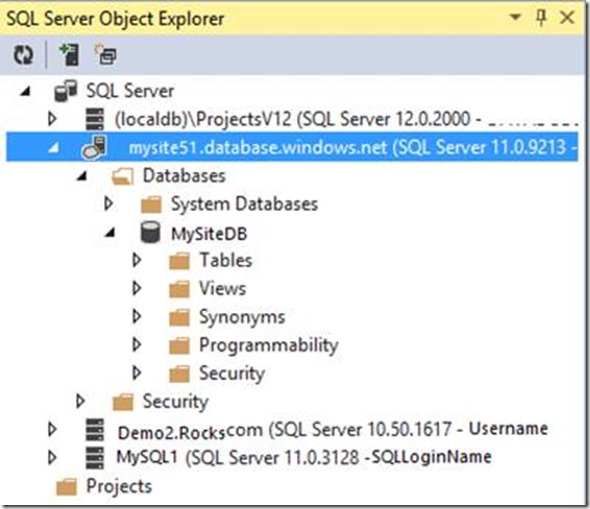

6. You should now see a SQL Object Explorer tree.

7. Tip: I put my test data in an Azure Database.

a. It has the following benefits :-

i. Safer than opening a port thru the corporate firewall to expose an internal SQL Server containing the Test data database.

ii. Closer to the VSO Test agents. So less latency.

iii. Better upload speed than most Home / Small Office LAN’s.

iv. Cheap, only pay for the duration of the testing then delete it. < 17 cents per day.

b. To “remember” your connection strings just copy it from the Azure Portal.

8. Open a SQL Query Window. R.Click your SQL Database => New Query …

9. I prefer to create a Schema to hold all my load testing artifacts. Especially if they aren’t in a dedicated database.

|

CREATE SCHEMA TestData; GO CREATE TABLE TestData.RacePage ( ID INT NOT NULL IDENTITY(1,1) , URL VARCHAR(100) NOT NULL , CONSTRAINT PK_ID PRIMARY KEY CLUSTERED (ID) ); GO |

10. To simplify the load tests Setup & Clean-up Scripts, I prefer to create Stored Procedures. This keeps the logic out of my scripts & closer to the data in case I need to change anything.

|

— ===< Procedure for Load Test’s Setup Script >=== CREATE PROCEDURE TestData.p_SetupRacePageDataTest ( @WebSite VARCHAR(30) = ‘http://mysite.cloudapp.net’) AS — Swap to a new meeting date at 5pm Sydney. (or 6am AEDT) DECLARE @MeetingDate DATE = DATEADD(HOUR, 4, SYSUTCDATETIME()); TRUNCATE TABLE TestData.RacePage; INSERT INTO TestData.RacePage(URL) SELECT @WebSite + ‘/race/’ + CAST(M.MeetingDate AS CHAR(10)) + ‘/’ + CAST(M.TrackId AS VARCHAR(5)) + ‘/’ + CAST(R.RaceNumber AS VARCHAR(2)) AS URL FROM Racing.Meeting AS M JOIN Racing.Race AS R ON R.MeetingId = M.MeetingId WHERE M.MeetingDate = @MeetingDate AND R.RaceStatusId = 1; — 1 = Open Races Enum GO — —< Check it works >— EXEC TestData.p_SetupRacePageDataTest; GO SELECT TOP 20 * FROM TestData.RacePage; GO — ===< Procedure for Load Test’s Clean-up Script >=== CREATE PROCEDURE TestData.p_CleanupRacePageDataTest AS TRUNCATE TABLE TestData.RacePage; GO |

11. Run it

Part B: Connect the data to your Web Test.

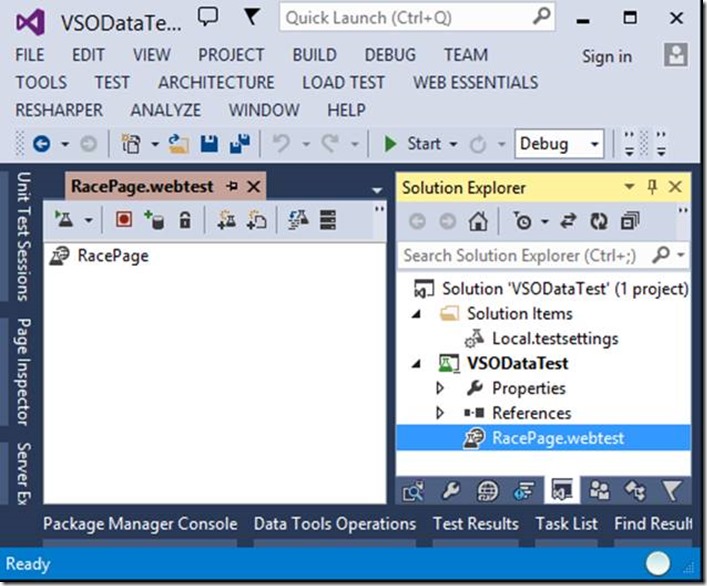

12. Select Menu: File => New Project => Test =>Web Performance and Load Test Project

a. Even if you only work by yourself I suggest you add it to Source Control. It is so handy to be able to roll back any mistakes you make.

b. Personally I prefer to use VSO’s Team Foundation Server. For small teams it is free & great to have a central store where your code is compiled & deployed via Continuous Integration. However you will note from the picture below, Visual Studio also works fine if you prefer to use another Source control system like Subversion or GIT.

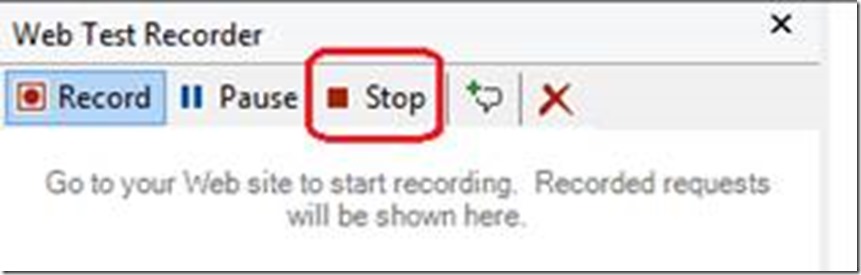

13. The new web test automatically opens a browser, ready to start recording your test. As we plan to get our URL’s from our database, we don’t need to do this. Just hit stop & create a blank test.

14. R.Click WebTest1.webtest & Rename it. eg: RacePage.webtest.

15. Add a Data Source to your WebTest. There are 3 ways to do this.

a. Via the Toolbar

b. Via the Context Menu

c. Via the property menu. (which you’ll see later)

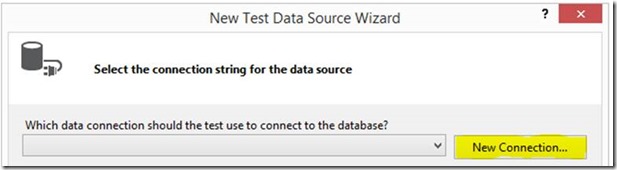

16. This should start the “New Test Data Source Wizard”

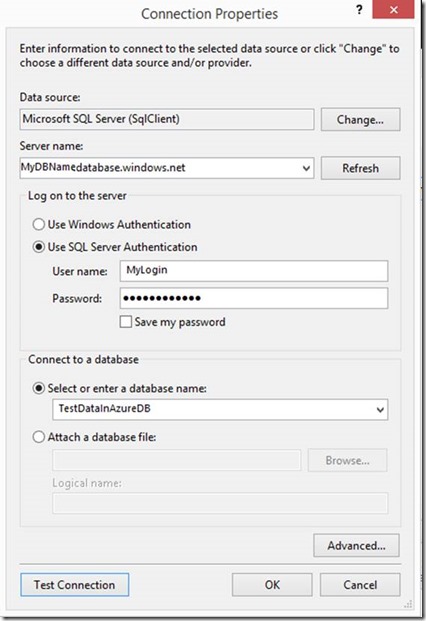

a. Enter the name of your Data Source. Eg: TestDataInAzureDB & choose database.

b. Click New Connection …

c. Enter the Connection details for your Azure Database.

d. Choose the Table(s) you created previously.

e. Note: If you need to add more tables later, just R.Click your data source & click “Edit Data Source”

17. Your Test should look something like this

Part C: Using the Data.

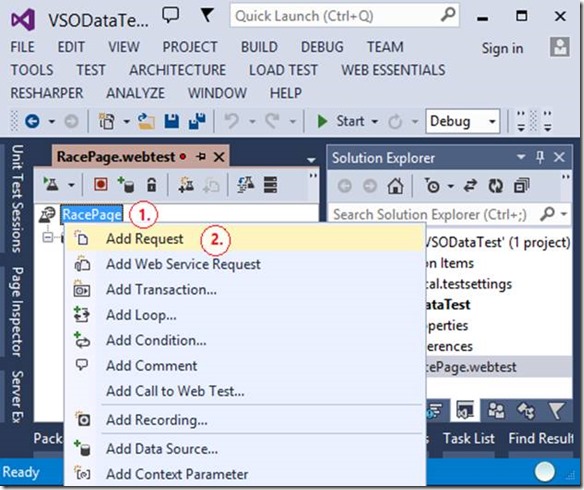

18. **.Add an Http Request that calls your Server

R.Click on your Web Test => **Add Request.

****

****

19. It creates an “empty” link that points to localhost. We will replace it with the URL’s in our Test Data table.

a. Click the HTTP Request, “http://localhost/”

b. Hit F4 to open the Properties pane.

c. Select the Url attribute & click the down arrow

d. Expand your Data Source, select the column in the table that contains your URLs

e. Note: the “Add Data Source” link. This is the third way to add a data source.

20. .Your Test is now complete. It should look similar to this.

. Part D: Adding your Web Test to a Load test.

There is nothing special you need to do for a data driven test. I’ll just repeat the steps here for those new to the process.

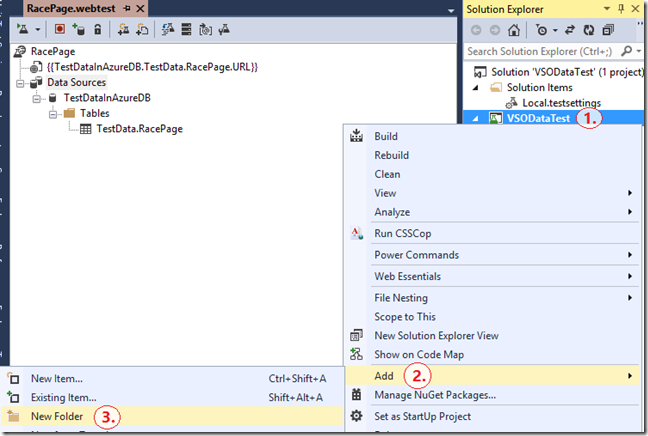

21. Add a Load Test to your Project..

a. R.Click your Project, eg: VSODataTest

b. **Click **Add => **Load Test…

** ****

****

22. You should see the Load Test Wizard Dialog

a. Tab: Scenario

i. Name: RacePage

ii. Think Time profile:

b. Load Pattern & Test Mix Model:

c. Test Mix: Add => RacePage test

d. Network Mix & Browser Mix:

e. Counter Sets

f. Run Settings: Load Test duration.

i. Set the duration time for as long as you want.

ii. At present the “Test Iteration” option is not supported in the cloud. You can only run Fixed Duration tests.

g. Details

i. Sampling Rate is recommended to be > 15 secs. The larger you make it the lower the monitoring overhead. Ensuring your test measurement doesn’t hinder your ability to run the test. For small duration tests with few users, sometimes 5 secs will give you a few more data point without hurting the tests.

ii. Validation level: I recommend: Low

23. Rename your LoadTest. I used **RacePage.loadtest

**

24. Unfortunately when you run your tests in the cloud you lose the Network Isolation & some of the benefits of having your own dedicated, isolated, expensive, test environment. It is a little harder to monitor the performance monitor counters of the system under load. Due to security issues, the On-Premise approach of adding the Performance Counters to your test doesn’t work. Two popular workarounds are :-

a. Perfmon Logs

RDP to each of the Azure Roles in your Solution. Configure Performance Monitor to save its counters to a Log File. So you can examine them once the Load test completes.

b. Application Insights

If you have Visual Studio 2013 Update 2, you will notice an “Applications” node.

i. Configure your project to push info to VSO Application Insights.

ii. R.Click on Applications => Get Performance Data from Application Insights.

iii. Nominate the Servers you will track.

c. The advantage of doing this is that App Insights also captures diagnostic & trace info. So if you stress your solution to breaking point, you can retrospectively examine your internal state to find bottlenecks & bugs.

d. Another advantage is App Insights works well with Azure Load balancing. So if your solution starts to spin up more instances under load, App Insights will automatically capture their metrics too. (It may be possible to do this with Powershell & perfmon but much harder, especially if the instances are deleted before the load test finishes.)

e. A disadvantage is App Insights is designed to minimise its impact on a product solution. So it caches its metrics & uploads it in bursts. This means if your Load Test is shorter than 10 mins. You will probably not see any data at all.

f. Another Disadvantage is it requires your app to be enabled for App Insights & the endpoints to be visible. Given all the other troubleshooting & operational monitoring benefits it provides, I’d recommend installing it even if you aren’t using it for Load Tests.

Part E: Create your Load Test Setup Scripts.

Before your load test starts you may need to generate a new set of test data. Once finished you may need to remove it all, to ensure the load test is repeatable. We can do this with Setup & Clean-up Scripts

25. How you organise your project is a matter of personal preference. I like to create folders to group files by type. eg: Scripts, LoadTests, WebTests. But for large solution consider organising them by the type of Testing eg: Stress Tests, Integration Tests, Smoke Tests. Or maybe the functionality you are testing. eg: Ordering, User Accounts, Stock Management.

26. To place your scripts in a folder called TestScripts.

a. R.Click your project eg: VSODataTest

b. Click Add => **New Folder

**

c. Name:** TestScripts**

d. R.Click The TestScripts Folder

i. Add => New Item…

ii. Search for Text.

iii. Select “Text File”

e. Name: RacePageSetup.cmd

27. There are many ways you can execute TSQL from a batch file. Once of the simplest is the SQLCmd.exe command line app that ships as part of the SQL Server Client tools.

Edit the file RacePageSetup.cmd & add the following command.

|

SQLCMD -U |

a. Notes:

i. In your file, this command should be on one single line.

ii. If you have Windows Active Directory available use “–E” (Windows Auth) instead of using a SQL Username & Password in your file. Alternatively you could write a script that retrieved the connection info somehow.

iii. –Q means run a single command & then exit. As I use a Stored Proc this is usually sufficient. But you can also get it to run a TSQL file.

28. **Tip: **Check your script is OK. To do this, open a command prompt & try running your Setup.cmd job from there. It is much faster to troubleshoot now than wondering why your Load Test failed.

a. R.Click your Script => Open Command Prompt

b. Execute your script file RacePageSetup from the command prompt.

|

E:!BlogVSODataTestVSODataTestTestScripts>dir Volume in drive E is Dev2_E Volume Serial Number is XYZD-0123 Directory of E:!BlogVSODataTestVSODataTestTestScripts 26/05/2014 08:25 PM 26/05/2014 08:25 PM 26/05/2014 07:04 PM 214 RacePageCleanup.cmd 26/05/2014 07:04 PM 210 RacePageSetup.cmd 2 File(s) 424 bytes 2 Dir(s) 6,212,550,656 bytes free E:!BlogVSODataTestVSODataTestTestScripts>RacePageSetup E:!BlogVSODataTestVSODataTestTestScripts>´╗┐SQLCMD -U …. |

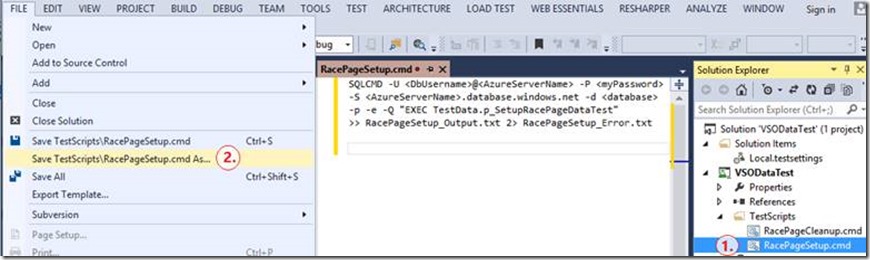

c. Unfortunately you may notice an error. If you look at the RacePageSetup_Error.txt file, you will see an error message. “’SQLCMD’ is not recognized as an internal or external command, operable program or batch file.” It is misleading, that is not the problem. Look closer at the DOS command output. The first command in your file died. It has 3 weird non-printable characters at the front “´****╗****┐****”. This is what happens when you save your .CMD files as “Unicode (UTF-8 with signature) – Codepage 65001.” Which is what Visual Studio uses by default. Those characters are the signature.

d. To fix this, Re-save the file as Unicode (UTF-8 without signature) – Codepage 65001

i. Menu: File => Save <filename.cmd> As …

ii. Select the Dropdown on the Save button, Save with Encoding …

iii. Set Encoding: Unicode (UTF-8 without signature) – Codepage 65001.

1. NB: I’ve not tried this with non-English Windows. You may need to open the file with a Binary editor & see what other formats are valid for your language setting.

iv. Try running it again. If you have no scripting errors it should work now.

29. Click your RacePageSetup.cmd file.

a. Hit F4, to open the Properties pane.

b. Set the “Copy to Output Directory” attribute to “Copy if newer”

30. Repeat the above steps to create your Cleanup Script

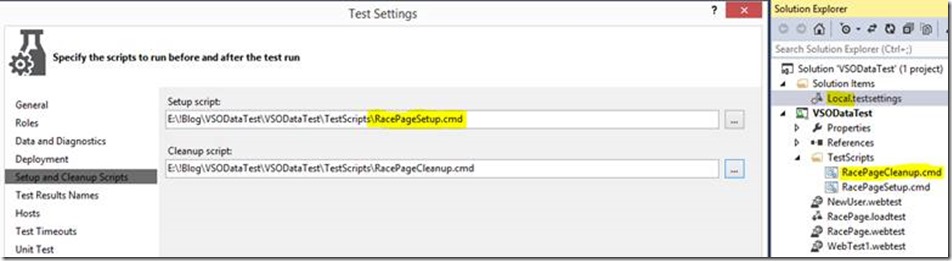

31. Next configure your test parameters to use your Scripts

a. Double Click Local.testsettings.

b. Select tab: Setup and Cleanup Scripts

i. Point the “Setup Script” path to your Setup script.

Part F: Checking your work, locally

Do a quick “sanity check” & run it locally to fix any mistakes. If your test doesn’t run locally it will not run in the cloud. Not only will your catch config & scripting errors earlier, but this give you the ability to check each of the rows in your generated data. Perhaps finding invalid combinations, NULLs, divide by zero issues &/or other error conditions that you had not intended to test.

32. While still in the Test Settings dialog.

a. Click the Web Test Tab.

b. Select “One run per data source row”

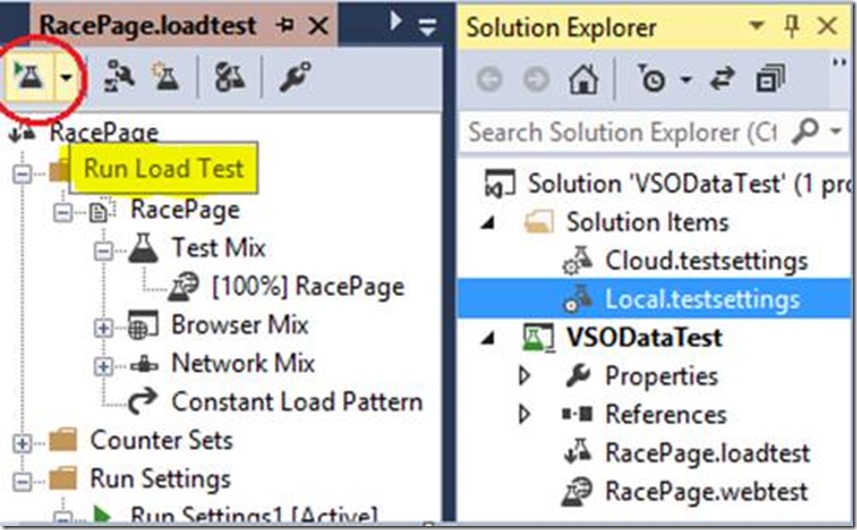

33. Open your Load test & click “Run Load Test”

. Part G: Running it in the cloud.

While you can use your existing TestSettings file. I prefer to create a new one, as it makes it easier to swap back & forward between the two. The Local test is good as some problems are obvious even with a tiny number of users.

34. In Solution Explorer, R.Click the “Solution Items” folder => Add => New Item…

35. Create a new Test Settings file named Cloud.testsettings.

36. Open the Test Settings Dialog

37. Tab: General

a. Name: Cloud

b. Select “Run Tests using Visual Studio Online”

That’s the real magic to running your tests in the cloud.

38. Tab: Setup and Clean-up scripts.

a. At I write this the SQL Setup & clean-up scripts will not work in the cloud. The SQLCMD app is not installed on the Test Agents. I expect this to change soon. So for now, leave this blank & run them manually.

39. Tab: Additional Settings. Run test in 64 bit process on a 64 bit machine.

Clearly this is optional but the default is 32 bit. So I change it

a. Note: the other tabs are not applicable in the cloud at this time.

40. Tell Visual Studio to start any load tests using the Cloud.Testsettings file.

a. R.Click the Cloud.testsettings file. => Select Active Load and Web Test Settings

41. That’s it. Click Run Load Tests & you are done.

![clip_image068[1] clip_image068[1]](https://devblogs.microsoft.com/devops/wp-content/uploads/sites/6/2014/08/8306.clip_image0681_thumb_3710780B.jpg)

a. Tip: The picture above might look like the Local.testsettings would run. At present VS gives you no indication which test settings file is active. I’m hoping this will change in the future. Prior to running any load test I recommend checking the context menu of the relevant testsettings file to ensure it has the little tick next to “Active Load and Web Test Settings”. Thus avoiding the frustration of running the wrong overnight test.

Scenario 2: Using Data to fill out Form fields.

The goal here is to have your test simulate 1,000’s of users filling out your web forms with data for your Web server to process. Useful to ensure your backend can handle the millions of new orders, customer registrations, service requests or wherever it is you do.

Part A: Record a Web Test that has fields that need to be populated with data.

1. Within a Web Performance and Load Test Project.

2. R.Click a Project (eg: VSODataTest) => Add => Web Performance Test …

3. Your default browser will open (I’ve found Internet Explorer to be the best for recording Web Tests)

4. Click Record

5. Click on the pages that will form your Web Test. Shown below is the page with the fields I’d like the test to populate.

6. When you are done, click Stop. Delete any of the links you do not need for your test.

7. Below you can see the Form Post Parameters as I filled them out when recording. This are the fields we will link to our data source.

8. Rename the webtest from WebTest1 to something meaningful. (eg: NewUser.webtest)

Part B: Connecting to the data.

In Scenario 1, we connected to the data first & then build the test. Here we do it the other way around. Just to show that the order doesn’t matter.

9. Create a table with Test data. (if you haven’t got one already.) Usually the web page form will persist the data entered by the user into a specific table. So I start by copying the definition of that table & then add/remove any extra columns as required.

Sample Table

CREATE TABLE TestData.NewUser (

NewUserId INT NOT NULL IDENTITY (1, 1),

FirstName NVARCHAR (30) NOT NULL,

MiddleName NVARCHAR (30) NULL,

LastName NVARCHAR (40) NOT NULL,

BirthDate DATE NULL,

UserName NVARCHAR (25) NULL,

Email NVARCHAR (50) NOT NULL,

Email2 NVARCHAR (50) NULL,

Phone NVARCHAR (14) NULL,

FlatNo NVARCHAR (12) NULL,

StreetNo NVARCHAR (12) NULL,

Street NVARCHAR (200) NULL,

StreetType CHAR (4) NULL,

Suburb NVARCHAR (25) NULL,

[State] NVARCHAR (3) NULL,

PostalCode VARCHAR (4) NULL,

CountryCode CHAR (2) NULL DEFAULT ‘AU’,

ReferralId SMALLINT NULL DEFAULT ‘0’,

ReferralOther NVARCHAR (50) NULL,

CONSTRAINT PK_NewUser PRIMARY KEY CLUSTERED (NewUserId)

);

When complete it may look something like this.

Unlike the Scenario 1: RacePage example, this data is not transient & not dependent on any the values in another table. So it is possible to load it once with SQL’s Bulkcopy or SSIS then leave it. As creating & loading random data into SQL is well documented elsewhere, I will not explain it here.

At the end of a load test, a clean-up script would “somehow” need to reverse any changes. The most common approaches are:-

A. Completely reset the entire database after each test.

i. Backup the database prior to the test, then Restore it when finished. OK for big tests but otherwise time consuming & impacts the work of others.

B. Reverse just the data you have changed.

i. Use a SQL Merge Statement to compare the “test data” table with the rows in the target table & delete them. Very quick & simple, especially if the Web Test only effects one table.

ii. For Unit Tests you can sometimes use a transaction & roll it back when finished. But for stress testing it is not recommended. You are likely to create dead locks & artificial IO bottlenecks.

C. Put an unlikely value in a field of your Test data eg: Middle name = “Test Data”. So you can find & remove those rows quickly without impacting data other developers might be using.

D. If the Target tables contain a set of poor man’s audit columns (eg: CreatedDate, CreatedBy, ModifiedDate), you can remove the rows based on the time they were created. Note: Please do not view this as a recommendation to clutter your DB Schema with “CreatedBy” columns. It is a bad practise that hurts performance. Yet many developers still do it.

10. Add a Data Source (As outlined in Scenario 1)

11. Map each field in your form a column in your Data Source table or view.

12. The rest is the same as Scenario 1.

a. Add this Web test to a Load Test.

b. Test all is OK locally.

c. Run the big test from VSOnline.

Scenario 3: Data-Driven Web Service tests.

The goal is to load your WebAPI2 controller &/or other RESTful servers.

The increase in Mobile solutions & Single-Page Applications (SPA’s) has make the creation of RESTfull services much more common. Testing is very similar to Scenario 1. So I will only highlight the differences here. The most significant difference is the way the data is bound to the query string.

Part A: Create a new Web Test & attach your Data Source

For instructions see Scenario 1, Part B.

Note: If you already have a Web Test Project. You can add another Web Test as follows.

1. Right-Click your Web Performance and Load Test Project.

2. Add => Web Performance Test …

3. It will automatically start the Web Test Recorder in your browser. Hit Stop. You don’t need to record anything.

4. You should now see the Web Test Editor.

Consider renaming it to something more descriptive.

5. Add a Data Source. (See Scenario 1. Part B)

Part B: Connecting to the data.

6. R.Click the test => Add Web Service Request

7. Change the URL from the default http://localhost to your test Service. Hit F4 to view the Properties pane, tweak the properties as needed. Consider changing; Response Time Goal, Think Time & Timeout.

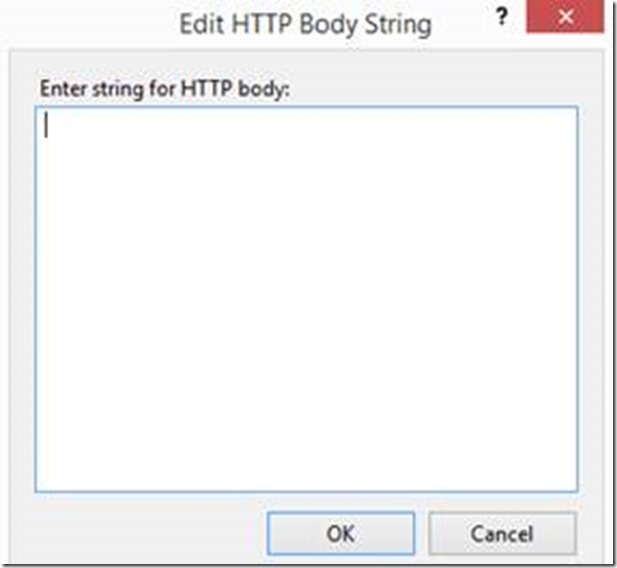

8. Select the “String Body” node. Click the “String Body Property & click the “…” button.

9. This is the confusing bit. Unlike the earlier examples where a property that says “supports data binding” give you some UI to link to your data source. Here you get nothing but an empty window.

10. The trick is to use parameter substitution to create whatever HTTP string your service expects. The syntax for the data bound parameter is {{DataSourceName.TableName.ColumnName}}

11. For example. If your web server expects a SOAP request in the following format.

|

|

12. With parameters it would look like this.

|

|

13. Tip. The above format works well for SOAP, AJAX & similar calls. But it assumes a fairly simple structure where each item can be mapped to a column. And each item only occurs once. Eg: The WinOrPlaceSelections array node below only contains 1 item.

|

{ “BetType”:1,”RaceNumber”:11,”RaceId”:41397,”TrackId”:589 ,”MeetingDate”:”2014-08-07T00:00:00″, “WinOrPlaceSelections”:[ {“Amount”:”2″,”RunnerNo”:8,”Odd”:0.00,”BetProductId”:3} ] ,”AccountId”:1017} |

This gets harder if you want to pass an array of values.

|

{ “BetType”:1,”RaceNumber”:8,”RaceId”:41151,”TrackId”:566,”MeetingDate”:”2014-08-07T00:00:00″ ,”WinOrPlaceSelections”:[ {“Amount”:”1.5″,”RunnerNo”:6,”Odd”:21.00,”BetProductId”:79} ,{“Amount”:”3.5″,”RunnerNo”:2,”Odd”:4.30,”BetProductId”:1} ,{“Amount”:”1.5″,”RunnerNo”:7,”Odd”:7.80,”BetProductId”:151} ,{“Amount”:”2.5″,”RunnerNo”:6,”Odd”:4.50,”BetProductId”:1} ,{“Amount”:”1″,”RunnerNo”:6,”Odd”:12.50,”BetProductId”:3} ] ,”AccountId”:1013} |

While you could create a parameter that contains all the items in array. I’ve found it much easier to generate the entire JSON string in a single column of my database test table. And pass the whole lot in one parameter.

Scenario 4: Data-Driven AJAX tests.

Use Case: Single Page Applications (SPA’s), Knockout, AngularJS are some of the many common apps that make extensive use of AJAX calls. Regardless of what javascript framework or library you use eventually it will result in an HTTPQueryString being sent to your Web Server.

If you are running Visual Studio Test On-Premise or via your own Azure IAAS machines. You could automate the User Interface to run your javascript, which generates & sends the HttpQueryString. At present Visual Studio Online does not support CodedUI Tests. They are really designed for UI testing & don’t scale very well. You could also use other web test frameworks; ie: Selenium, Jasmine, PhantomJS.

Like Scenario 1, for stress testing you may find it undesirable to automate the client code just so you can generate a load on your servers. Often all you really need is a valid HTTPQueryString.

So process is identical to Scenario 3, except that you record your interactions with your web site. Once complete:-

1. Click on the URL’s which contain the AJAX calls.

2. Click on the “String Body” node

3. Open the Properties pane & select “…”

4. Update the String body to a Parameterised Query.

Known Limitations & workarounds

Online Testing helps to reduce gap between the little guy & the well-resourced enterprise dept. It is still a work in progress as MS test suite is quite broad & at the time of posting this article there are many things that the On-premise product offers that the Cloud version does not. Microsoft’s Cloud offering has new features added every month. By the time you read this, these feature gaps may no longer exist.

For instance the Startup & CleanUp Scripts in your Online Load tests can’t call SQL Server

Workaround: Run them manually. This may be resolved soon

Can’t run Javascript

This is annoying. But reflect on what you are trying to achieve, there may be alternatives.

1. If your focus is exercising the client code (Javascript), it is likely you are really running Unit Tests, Cross browser & similar UI functionality tests. It may be just as effective to run these tests locally on a single client machine. The goal of multiple clients is to put a load on the server, or find concurrency issues; locking or race conditions.

2. As your client code is not run on the tests, it is possible form a small number of test agents to simulate many more client interactions. Especially if you generate the client response before the test starts & simulate sending it to the server.

Catch All Workaround

Visual Studio Online tests are powerful, but Visual Studio Test Manager can do even more. So if your multi-million dollar, dedicated test data-center is unavailable. Consider using the cloud by creating Azure IAAS VM instances. Install Windows & configure one as a Test Agent & the other as Test controller.

About the Author

![David Lean Live’s avatar][57]

David Lean is LONG time Microsoft veteran (1990-2009) that has recently retired. He is currently focusing on his passions share/trading applications and assisting MS Customers & Partners with SQL Server related projects & performance tuning.

[57]: lean live&size=extralarge&version=fc21f6d2-a53c-44a4-b4cc-f93af32bd7f9 “David Lean Live’s avatar”

0 comments