This article provides a solution for running Azure DevOps agents (Build/Release agents) on Windows Server Core based containers, hosted on Azure Container Instances (ACI). A solution like this might be useful, when the default Microsoft-hosted agents don’t fit your requirements, and you don’t prefer using “traditional” IaaS VMs for running your self-hosted agents.

One of our customers decided to use Azure DevOps as their primary automation tool for Azure workloads. To achieve this, the following components are needed:

- Private Git repositories hosted on Azure DevOps to store the configuration scripts (e.g. Terraform and ARM templates, and PowerShell scripts).

- Build/Release pipelines to define which activities (e.g. applying Terraform templates or running PowerShell scripts) should be run in which order, with what parameters, in which Azure subscription, etc.

- A place to run these pipelines from. There are 2 options for this: using the Microsoft-hosted agents or implementing a set of self-hosted ones. Reminder: agents provide runtimes for pipelines on VMs.

As this customer wanted to have full control over the agent machines, had concerns about using a shared, multi-tenant platform for this purpose, and was afraid of hitting any of the technical limitations of the platform-provided agents, the default choice of using Microsoft-hosted agents fell quickly into the no-go category.

To fulfill these requirements, namely to enable the customer to run Build/Release pipelines in a scalable, customized and highly secured environment, we clearly needed to implement a set of self-hosted agents.

Requirements summary:

- Scalability: a solution which can both scale-up (i.e. the number of CPU cores and the amount of RAM can be increased) and scale out (multiple instances can be added to support parallel deployments) was desired.

- Customization: this includes adding extra components, such as PowerShell modules, json2hcl or the Terraform executable, and not having unnecessary and unused components installed.

- Security: interactive logons have to be prohibited, incoming requests on all ports of these machines must be denied.

As the customer prefers using PaaS services over IaaS VMs, and has strict security requirements, using the well-documented approach for deploying agents on VMs – what you would normally do – could not come into question.

Luckily, Azure offers this relatively new service, called Azure Container Instances (ACI), using which customers can run containers without managing the underlying infrastructure. As this service is now generally available (GA), it was a perfect choice for this customer.

This solution is simple, yet secure and has multiple benefits over using IaaS VM:

- It does not use public IP’s. It doesn’t need one, as the Azure DevOps agent initiates the communication to the service.

- It does not have any exposed ports. There’s no need for publishing anything.

- Logging in to these containers is not possible (console and network access is not available) – only the configuration script’s outputs can be read, as these are printed to the console.

- There’s no need to maintain a VNET or any other infrastructure pieces, as these ACI instances can exist on their own.

- Has a lightweight footprint.

- Can be provisioned very quickly: to fully configure a container instance with the required components takes 5-10 minutes.

- Is immutable: it does not need patching/management. For version upgrades, the existing instances have to be deleted, and the new ones can easily be re-created by running the provision scripts again.

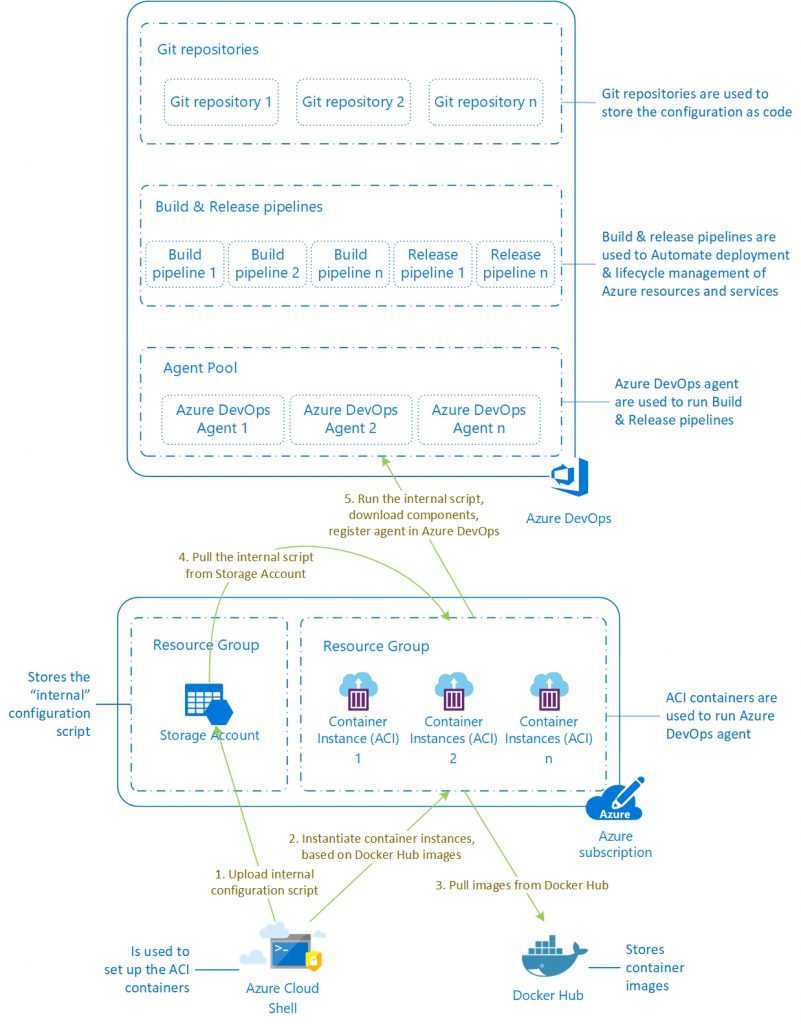

How does the solution work?

The solution consists of 3 scripts – all have to be placed to the same folder:

- Initialize-VstsAgentOnWindowsServerCoreContainer.ps1 – the external, “wrapper” script.

- Install-VstsAgentOnWindowsServerCoreContainer.ps1 – the container configuration script (internal script, runs inside of the containers) – this should never be invoked directly.

- Remove-VstsAgentOnWindowsServerCoreContainer.ps1 – removal script that can be used to delete containers that are no longer required.

1. Initialize-VstsAgentOnWindowsServerCoreContainer.ps1

The wrapper script can be invoked from any location (including Azure Cloud Shell), that has the required components installed (see the prerequisites below).

The wrapper script copies the internal, container configuration script to a publicly available storage container of the requested Storage Account, it creates a new Resource Group (if one doesn’t exist with the provided name), removes any pre-existing ACI containers with the same name, within the same Resource Group, then creates new ACI container instance(s) based on the provided names and invokes the container configuration script inside the container(s).

The container(s) are based on the latest version of the official Windows Server Core image (microsoft/windowsservercore LTSC) available on Docker Hub.

2. Install-VstsAgentOnWindowsServerCoreContainer.ps1

The internal, container configuration script downloads and installs the latest available version of the Azure DevOps agent, and registers the instance(s) to the selected Agent Pool. It also configures the instance(s) with the latest version of Terraform, json2hcl and the selected PowerShell modules (by default AzureRM, AzureAD, Pester).

After the successful configuration, it prints the available disk space and keeps periodically checking that the “vstsagent” service is in running state. Failure of this service will cause the Container instance to be re-initialized. If this happens and the PAT token is still valid, the container will auto-heal itself. If the PAT token has already been revoked, or has been expired by this time, the container re-creation will fail.

3. Remove-VstsAgentOnWindowsServerCoreContainer.ps1

This removal script removes the selected Azure Container Instance(s). It leaves the Resource Group (and other resources within it) intact.

Prerequisites

- Azure Subscription, with an existing Storage Account

- Access to Azure Cloud Shell (as the solution has been developed for and tested in Cloud Shell)

- You need to have admin rights:

- To create a storage container within the already existing Storage Account – OR – a storage container with the public access type of “Blob” has to exist,

- To create a new Resource Group – OR – an existing Resource Group for the Azure Container Instances,

- To create resources in the selected Resource Group.

- Azure DevOps account with the requested Agent Pool has to exist.

- Permission in the Azure DevOps account to add agents to the chosen Agent Pool.

- A PAT token.

- A PAT token can only be read once, at the time of creation.

- PAT tokens cannot be used for privilege escalation.

- To learn more about PAT tokens, visit the Use Personal Access Tokens to Authenticate site.

How to manage the solution’s lifecycle

1. Initialize ACI containers in Azure Cloud Shell

- Get access to the Azure DevOps account where you would like to create the new agents. You might need to have rights to create a new Agent Pool if the requested one doesn’t exist.

- Get a PAT token for agent registration (Agent Pools: read, manage; Deployment group: read, manage). To see detailed description of this step, visit the Deploy an agent on Windows page of Azure DevOps documentation.

- Get access to the Azure Subscription where you need to deploy the ACI containers. See more details in the Prerequisites section above.

- Get access to Azure Cloud Shell. See Quickstart for PowerShell in Azure Cloud Shell for more details.

- Copy the below .ps1 script files to your Cloud Shell area:

- Run the below script to create containers in your selected region

Initialize-VstsAgentOnWindowsServerCoreContainer.ps1 -SubscriptionName "<subscription name>" -ResourceGroupName "<resource group name>" -ContainerName "<container 1 name>", "<container 2 name>", "<container n name>" -Location "<azure region 1>" -StorageAccountName "<storage account name>" -VSTSAccountName "<azure devops account name>" -PATToken "<PAT token>" -PoolName "<agent pool name>" - In case you would like to have containers in any additional regions, re-run the script with a different “Locaion” parameter:

Initialize-VstsAgentOnWindowsServerCoreContainer.ps1 -SubscriptionName "<subscription name>" -ResourceGroupName "<resource group name>" -ContainerName "<container 1 name>", "<container 2 name>", "<container n name>" -Location "<azure region 2>" -StorageAccountName "<storage account name>" -VSTSAccountName "<azure devops account name>" -PATToken "<PAT token>" -PoolName "<agent pool name>" - If the container creation procedure fails, Azure automatically retries creating a new instance.

- Read the logs of each container.

- In case of success, you can decide if you want to delete your PAT token. See more in Revoke personal access tokens to remove access.

- In case any unhandled errors occurred, you can re-run the script for the instance in question, using the “-ReplaceExistingContainer” switch (see the description below in the Update ACI containers section).

- As long as your PAT token is valid, you can remove the agents’ registration on the Azure DevOps portal. This will trigger the container to stop the Azure DevOps (VSTS) service, restart, and reapply all the settings defined in the container configuration script (“Install-VstsAgentOnWindowsServerCoreContainer”).

- If the PAT token has already been removed, in order to update/re-register the containers, you’ll have generate a new PAT token, remove the existing containers, and re-run the “Initialize-VstsAgentOnWindowsServerCoreContainer.ps1” script.

2. Update ACI containers

- If you would like to update your existing ACI containers, you can re-run the same “.\Initialize-VstsAgentOnWindowsServerCoreContainer.ps1” script using the “-ReplaceExistingContainer” switch as follows:

Initialize-VstsAgentOnWindowsServerCoreContainer.ps1 -SubscriptionName "<subscription name>" -ResourceGroupName "<resource group name>" -ContainerName "<container 1 name>", "<container 2 name>", "<container n name>" -Location "<azure region 2>" -StorageAccountName "<storage account name>" -VSTSAccountName "<azure devops account name>" -PATToken "<PAT token>" -PoolName "<agent pool name>" -ReplaceExistingContainer - This will wipe out the existing container(s), and re-register the new one(s) with the same name. Note that this will create a new agent registration in Azure DevOps, as the agent names are generated based on the following pattern:

– - Once the new container(s) have bee provisioned, the old agents become orphaned. These have to be manually deprovisioned on the Azure DevOps portal.

3. Delete ACI containers

- To remove the ACI containers that are no longer required, run the below script:

Remove-VstsAgentOnWindowsServerCoreContainer.ps1 -SubscriptionName "<subscription name>" -ResourceGroupName "<resource group name>" -ContainerName "<container 1 name>", "<container 2 name>", "<container n name>" - Once the containers have been removed, the agents on the Azure DevOps portal become orphaned. These have to be manually deprovisioned (deleted) on the portal.

You can find the GitHub repo here: https://github.com/matebarabas/azure/tree/master/scripts/AzureDevOpsAgentOnACI

Hi, This is very nice atricle. Can i use DSC configuration in ACI for this process?

Hi Pradeep, thanks very much for the positive feedback.

Technically, I believe, it may be possible, but I wouldn't use DSC for containers. A key concept of containers is that they're immutable, so they should be set up in a more dynamic manner, and they shouldn't really be lyfecycle managed by any stateful solutions.

The solution that I described in this article could, and potentially will be further improved by moving the component deployment steps to the image creation phase (this can be fully automated and run on a scheduled basis, e.g. by using Azure Container Registry), thus the instantiation...