Kubernetes and Docker containers have become an important part of many organizations’ stack, as they move to transform their business digitally. Kubernetes increases the agility of your infrastructure, so you can run your apps reliably at scale. At the same time, customers who are using it have started focusing more on adopting DevOps practices to make the development process more agile too, and are implementing Continuous Integration and Continuous Delivery pipelines built around containers.

The new Azure Pipelines features we are announcing today are designed to help our customers build applications with Docker containers and deploy them to Kubernetes clusters, including Azure Kubernetes Service. These features are rolling out over the next few days to all Azure Pipelines customers, in preview.

Getting started with CI/CD pipelines and Kubernetes

We understand that one of the biggest blockers to adopting DevOps practices with containers and Kubernetes is setting up the required “plumbing”. We believe developers should be able to go from a Git repo to an app running inside Kubernetes in as few steps as possible. With Azure Pipelines, we aim at making this experience straightforward, automating the creation of the CI/CD definitions, as well as the Kubernetes manifest.

When you create a new pipeline, Azure DevOps automatically scans the Git repository and suggests recommended templates for container-based application. Using the templates, you have the option to automatically configure CI/CD and deployments to Kubernetes.

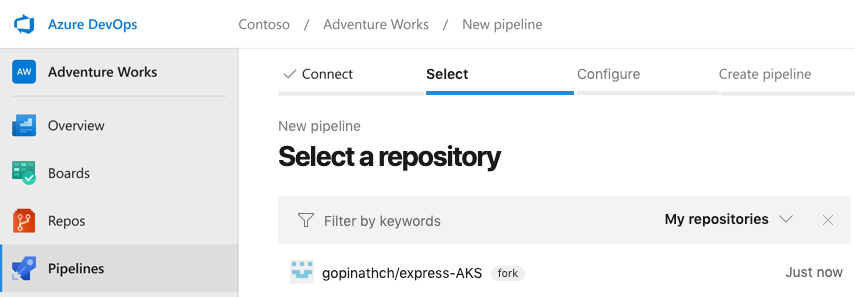

- Start by selecting your repository which has your application code and Dockerfile.

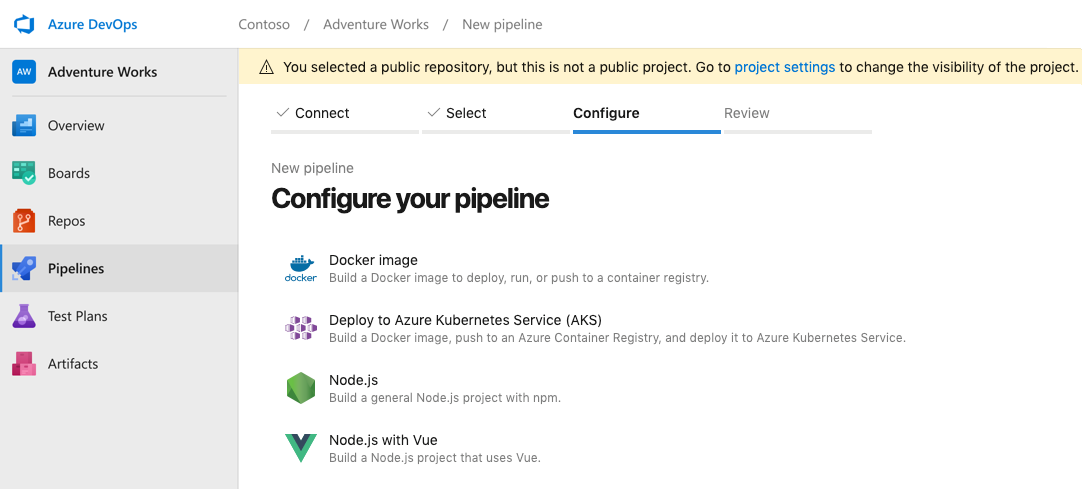

- Azure Pipelines analyzes the repository and suggests the right set of YAML templates, so you don’t need to configure it manually.

For example, here we’ve identified that the repository has a Node.js application and a Dockerfile. Azure Pipelines then suggests multiple templates, including “Docker image” (for CI only: build the Docker image and push it to a registry), and “Deploy to Azure Kubernetes Service” (which includes both CI and CD).

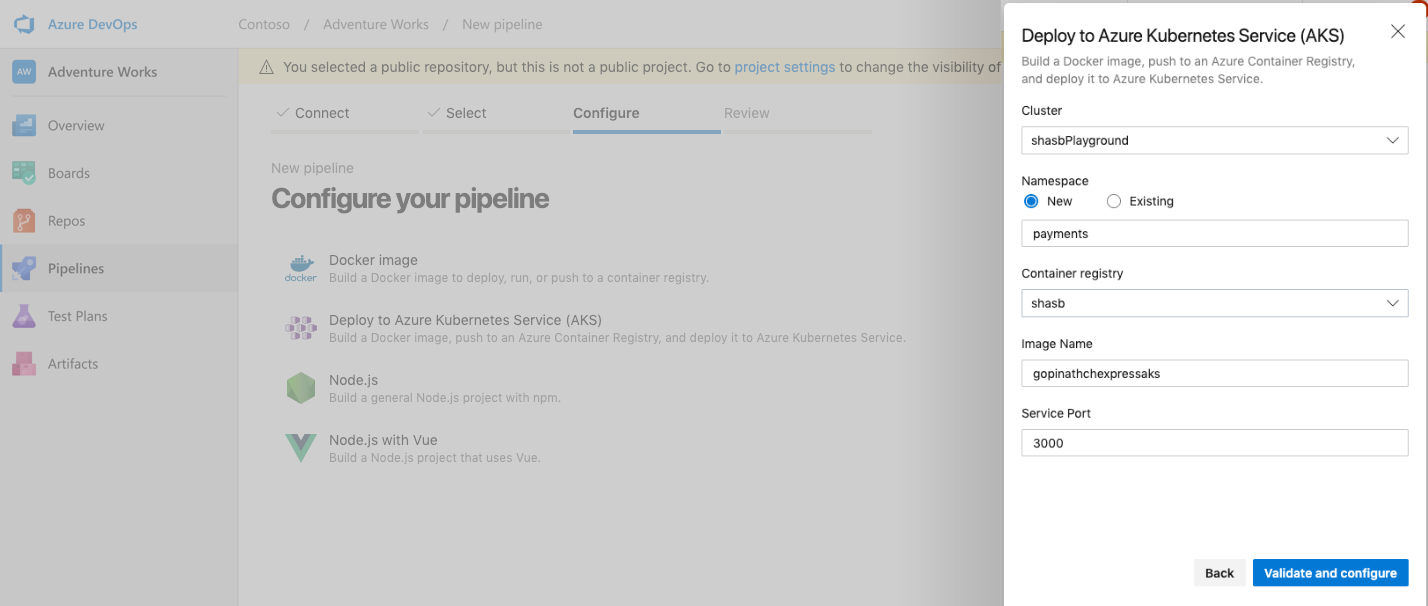

- Once you select the AKS template, you will be asked for the names of the AKS cluster, container registry and namespace; these are the only inputs you need to provide. Azure Pipelines auto-fills the image name and service port.

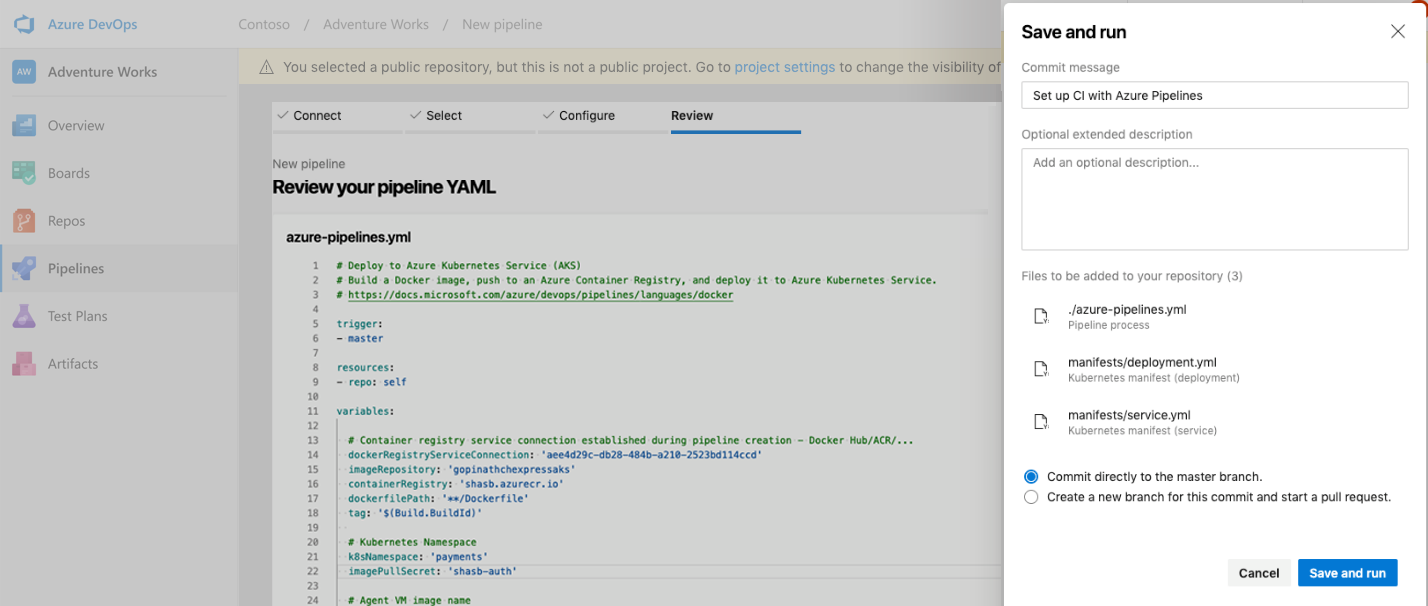

- The platform then auto-generates the YAML file for Azure Pipelines, and the Kubernetes manifest for deploying to the cluster. Both will be committed to your Git repository, so you get full configuration-as-code.

That’s it! You’ve configured a pipeline for an AKS cluster in four steps.

Azure Pipelines offers then a set of rich views to monitor the progress and the pipeline execution summary.

Azure Pipelines’ getting started experience takes care of creating and configuring the pipeline without the user needing to know any of the Kubernetes concepts. Developers only need a code repo with a Dockerfile. Once the pipeline is set up you can modify its definition by using the new YAML editor, with support for IntelliSense smart code completion. You have full control, so you can add more steps like testing, or even bring in your Helm charts for deploying apps.

As we are launching this new experience in preview, we are currently optimizing it for Azure Kubernetes Service (AKS) and Azure Container Registry (ACR). Other Kubernetes clusters, for example running on-premises or in other clouds, as well as other container registries, can be used, but require setting up a Service Account and connection manally. We are working on an improved UI to simplify adding other Kubernetes clusters and Docker registries in the next few months.

Deploying to Kubernetes

Azure Pipelines customers have been able to deploy apps to Kubernetes clusters by using the built-in tasks for kubectl and Helm. It’s also possible to run custom scripts to achieve the same results. While both those methods can be effective, they also come with some quirks that are necessary to make deployments work correctly. For example, when you are deploying a container image to Kubernetes, the image tag keeps changing with each pipeline run. Customers need to ensure they’re using some tokenization to update their Helm chart or Kubernetes manifest files. Simply running the command could also result in scenarios where pipeline run was successful (because the command returned successfully), but the app deployment failed for other reasons, for example an imagePullSecret value not set. Solving these issues would require writing more scripts to check the state of deployments.

To simplify this, we are introducing a new “Deploy Kubernetes manifests” task, available in preview. This task goes beyond just running commands, solving some of the problems that customers face when deploying to Kubernetes. It includes features such as deployment strategies, artifact substitution, metadata annotation, manifest stability check, and secret handling.

When you use this task to deploy to a Kubernetes cluster, the task annotates the Kubernetes objects with CI/CD metadata like the pipeline run ID. This helps with traceability: in case you want to know how and when a specific Kubernetes object was created, you can just look that up with the annotation details of the Kubernetes objects (like pod, deployment, etc).

We have improved the Kubernetes service connection to cover all the different ways in which you can connect and deploy to a cluster. We understand that Kubernetes clusters are often used by multiple teams, deploying different microservices, and a key requirement is to give each team permission to a specific namespace.

The new Kubernetes manifest task can be defined as YAML too, for example:

steps:

- task: "KubernetesManifest@0"

displayName: "Deploy"

inputs:

kubernetesServiceConnection: "someK8sSC1"

namespace: "default"

manifests: "manifests/*"

containers: 'foobar/demo:$(tagVariable)'

imagePullSecrets: |

some-secret

some-other-secret

Now you can connect to the Kubernetes cluster by using Service Account details or by passing on the kubeconfig file. Alternatively, for users of Azure Kubernetes Service, you can use the Azure subscription details to have Azure DevOps automatically create a Service Account scoped to a specific cluster and namespace.

Hybrid and multi-cloud support

You can use our Kubernetes features irrespective of where your cluster is deployed to, including on-premises and on other cloud providers, enabling simple application portability. It also supports other Kubernetes-based distributions such as OpenShift. You can use Service Accounts to target any Kubernetes cluster, as described in the documentation.

Get started & feedback

This feature will be rolled out for all accounts over the next few days. To enable it, go to the preview features page and turn on the toggle for “Multi-stage pipelines”.

You can get started with Azure Pipelines by creating a free account. Azure Pipelines, part of Azure DevOps, is free for individuals and small teams of up to five.

Additionally, if you’re looking for a way to get started quickly with Kubernetes, Azure Kubernetes Service provides a fully-managed Kubernetes cluster running on the cloud; try it out with a free Azure trial account. After that, check out the full documentation available on integrating Azure Pipelines with Kubernetes.

As always, if you have feedback for our Azure Pipelines team, feel free to comment below, or send us a tweet to @AzureDevOps.

Nice post Gopinath. For anybody interested here’s a step by step on how to build a CI/CD pipeline for a containerized Asp.Net Core 3.0 Web API: https://youtu.be/nsG3xbIoeJo

Can you provide some information on how is the “KubernetesManifest@0” task supposed to be used with the new Environments?

Cheers