Background

Azure Video Analyzer enables developers to quickly build an AI-powered video analytics solution to extract actionable insights from videos, whether stored or streaming. It can be used to enhance workplace safety, in-store experiences, digital asset management, media archives, and more by assisting in understanding audio and visual video content without video or machine learning expertise [1]. In this solution we use Azure Video Analyzer and Azure Search to be able to retrieve desired moments in our video archive.

Challenges and Objectives

Our customers needed to be able to search their video archives to find results related to a video for a given moment. This post provides a solution to be able to search for specific features and retrieve the moments related to the searched query.

For the purposes of this post, a moment covers n number of seconds. An example of a moment query is “find moments where Jean Claude appeared in Friends tv show.”

Additionally, our customer required support for custom features in Azure Video Analyzer.

The Challenges:

- Azure Video Analyzer receive a video file and provides one JSON file containing extracted features for that video (e.g., one file containing all the features for a 00:20:10 show). Hence if we use that file as a record in our search index, we cannot resolve the mentioned query without post-processing steps to find the desired moments.

- Post-processing of large records in order to find the relevant moments is too costly and inefficient.

- Azure Video Analyzer, natively does not support custom features.

In this post, we will discuss our experience using Azure Video Analyzer to accomplish this objective in three steps:

- Create a search index, with flexibility to accommodate custom features.

- Upload moments to the index.

- Establish search queries to retrieve related moments from the index.

Solution

We resolved the challenges mentioned in the previous section in two ways:

- Create a search index with all existing Azure Video Analyzer features and flexibility to have more fields as custom features.

- Parse the Azure Video Analyzer JSON output file into small intervals (n seconds) and feed them into the Azure search index.

Each record in the Azure Search index is equivalent to n seconds of a video. When the end-user searches for a query, the most relevant record (for instance moments 00:11:30 to 00:11:40 of a video) will be retrieved. In the next section we provide a deeper explanation of how we accomplished our goals.

Architecture deep-dive

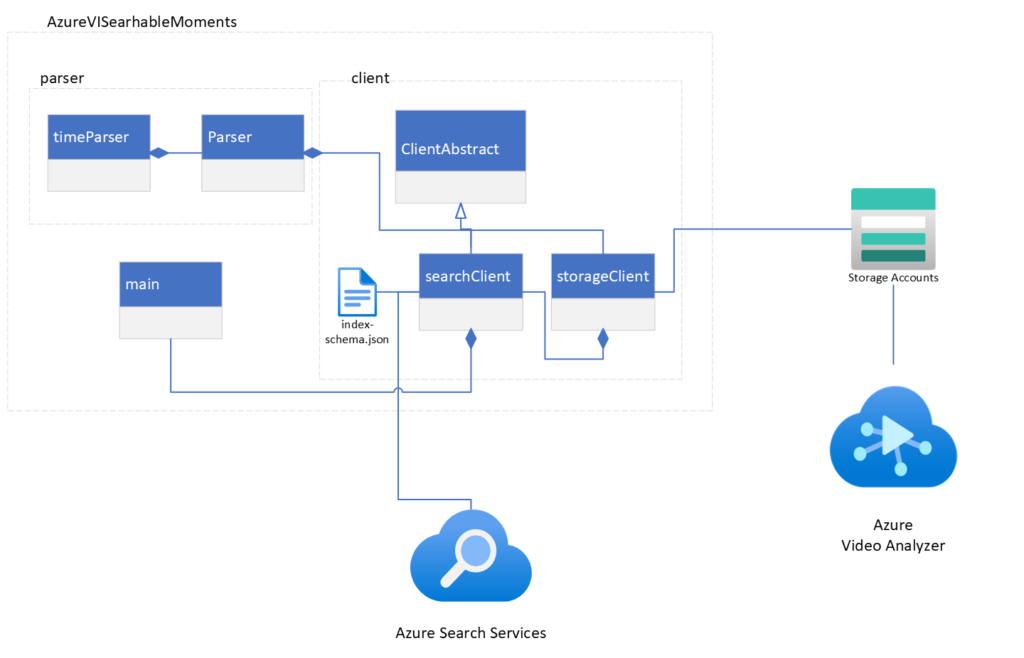

We assume that you have already processed the video files using Azure Video Analyzer and uploaded the output files into a container in a storage account.

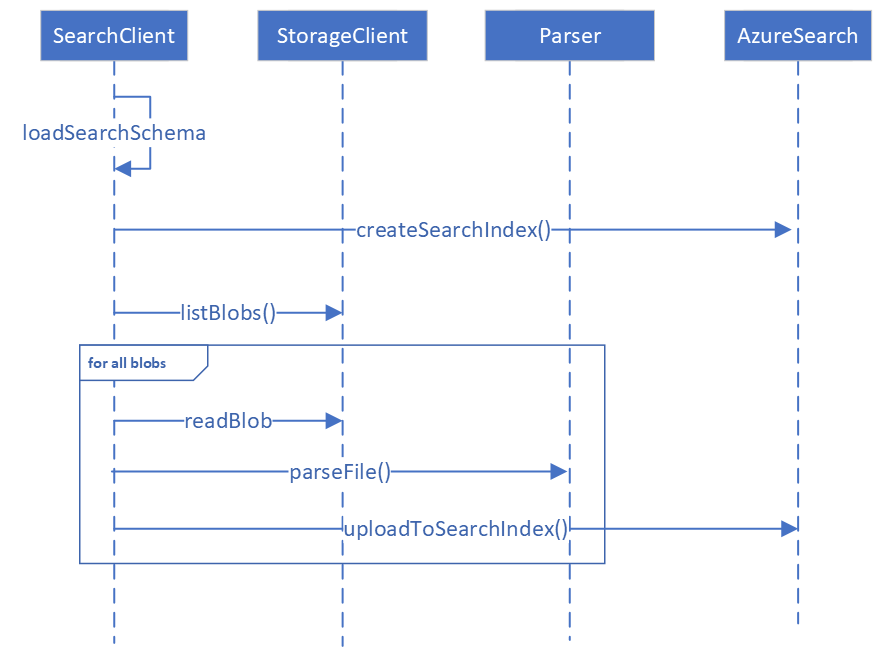

Here is the system sequence diagram of the Azure Video Indexer Searchable moments.

Create Search index:

We should have a search index that supports all video indexer features. Depending on our use case, we may need to add additional fields to our search index to accommodate those custom features not provided by the Azure Video Indexer.

Parse Video Analyzer Output to Moments:

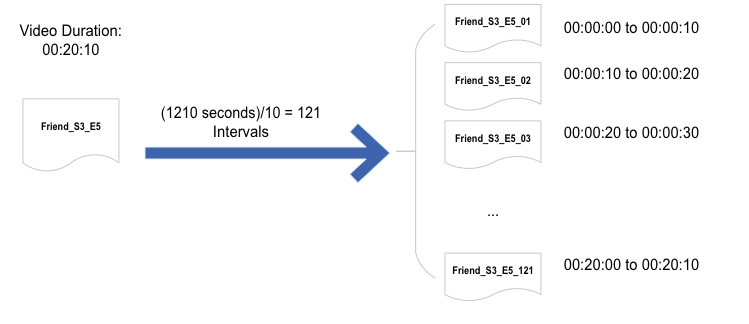

Video Indexer process shows and generates one JSON file per video.

First, we need to store those JSON files into a container in our azure storage account.

Next, we need to parse these JSON files into n intervals (e.g., n=10 seconds interval). This means that if a video duration is 00:20:10, using 10 seconds intervals, we will have 121 documents, each covering 10 seconds of the show.

Finally, we will upload the intervals defined above (e.g., 121 records of a show having duration of 00:20:10 with n=10 seconds interval) into the created search index. You can find the architecture of the Azure Video Indexer Searchable moments library below.

Now that we have uploaded our records into the Azure search index, we can find the right moments in the show related to our search query. Here are some of the examples we could address using this method:

Example 1: “Find the moments where ‘Jennifer Aniston‘ in Friends TV show is happy“

Search Query:

{

"search": "Jennifer Aniston",

"searchFields": "faces/face, name",

"filter": "search.ismatchscoring('friends')and (emotions/any(em:

em/emotion eq 'Joy')) "

}

Example 2: “Find the moments in any show where the transcript is ‘getting old‘“

Search Query:

{

"search": "getting old",

"searchFields": "transcripts/transcript"

}

Example 3: “Find the moments in Friends show where ‘Jean Claude‘ Appears”

Search Query:

{

"search": "Jean Claude",

"searchFields": "faces/face, namedPeople/namedPerson",

"filter": "search.ismatchscoring('friends') ",

}

Summary

Azure Video Indexer provides one file containing features extracted from that video. However, if we upload that file into our search index, we cannot retrieve the moments which correspond to our search query. Instead, we will get the video itself.

In this blog post we propose a method that helps us search for moments in a video by splitting a video into small intervals of n seconds (e.g., n=10 seconds). Then we will upload those records into an azure search index which is compatible with our video indexer intervals. You can find the sample code in this repository.

As a result, when we search for our desired query, the search engine will retrieve the most relevant record (containing n seconds of a video, e.g., n= 10 seconds).

The Team

Below is the Microsoft team that worked with me on this project.

- Maysam Mokarian , mamokari@microsoft.com

- Jit Ghosh , pghosh@microsoft.com

- Mona Soliman Habib , Mona.Habib@microsoft.com

- Jacob Spoelstra , Jacob.Spoelstra@microsoft.com

- Nick Landry , Nick.Landry@microsoft.com

- Anna Zietlow , Anna.Zietlow@microsoft.com

- Samantha Rouphael , saroup@microsoft.com

- Chris Mayo , cmayo@microsoft.com