Introduction

During pandemics, the ability to let people know if they have been exposed to a contagious disease is essential for public health practitioners. This is known as contact tracing and the COVID-19 pandemic is no exception. Effective contact tracing increases the volume of traced contacts and slows the spread of communicable diseases. As part of this goal, our team had the pleasure of working directly with engineers from Google, Apple, and the Association of Public Health Laboratories (APHL) to build a “national key server” that enables COVID-19 exposure notifications to be shared across all US states and territories.

In this post I describe the journey of our Microsoft team to deploy an exposure notification server on Azure in a highly repeatable, scalable, and privacy-preserving manner. First let’s cover some background.

Exposure Notifications and Contact Tracing

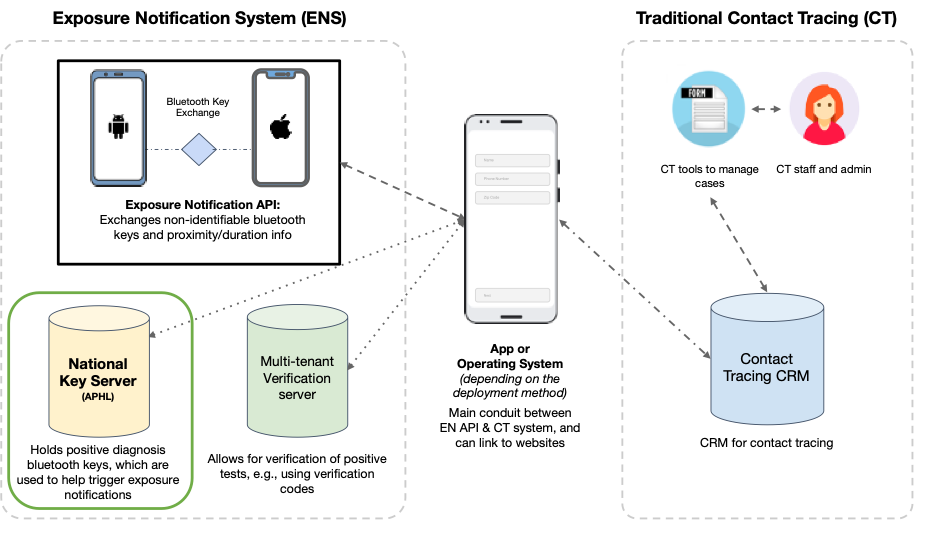

The Google | Apple Exposure Notification (GAEN) solution is a truly anonymous way for users to find out if they may have been in proximity with someone who is diagnosed with COVID-19. To use the system, you download an app from your state public health agency, and then agree to turn on the exposure notifications (EN) on your phone. To complement this option, Exposure Notifications Express (ENX) was added to the GAEN solution to allow users to sign up without an app.

At this point your phone begins exchanging anonymous identifiers (random numbers) with other participating phones that are nearby. We called these identifiers “temporary exposure keys” (TEK). Should a participant be diagnosed with COVID-19, they can share their diagnosis in their Exposure Notification app or express interface.

Next, with user consent the EN client uploads totally anonymous TEKs (no identifying info, no location) to a central server. Every phone enrolled to EN will download all positive keys from this server on a daily basis. The phone will scan the local identities it has stored to see if any of them match with the downloaded keys. If there is a match, your phone will notify you that you were potentially exposed. It will also provide information for you to determine the appropriate next steps.

Historically, US State public health agencies (PHAs) have had a distributed model for contact tracing. While states can host their own key servers, a centralized national key server (NKS) allows notifications to be shared with users from all states. With this in mind, APHL agreed to host a national key server for the GAEN solution. This would allow anonymous keys to be pooled together and support interstate scenarios. Operational concerns such as knowledge of key server updates could be in one place. There would be one central place for PHAs and state client developers to coordinate onboarding and view any reporting.

The national key server is an implementation of an exposure notification key server. We will refer to this key server implementation as ENS for the rest of this post.

(Note: GAEN solution is also running internationally, where each country has its own apps and servers.)

Challenge and Objectives

A top goal for the Microsoft team was to operationalize the national key server for APHL and enable the Exposure Notifications and Contact Tracing. Operationalizing means:

- Continuous integration and deployment of the national key server

- Easily observable microservice and infrastructure health

- A well-defined path to high availability and national-level scale

- Security fundamentals are fully implemented

Since the Azure cloud was new to APHL, being able to support APHL in learning how to own and operate production services on Azure was a key objective. Moreover, some US states were using proprietary key servers. These states would need to be migrated on to the national key server. Lastly, close communication with Google, Apple, and state level public health agencies to align with iOS/Android releases of EN and ENX was critical. This would involve testing the national key server with state level developers in pre-production environments to verify the protocol.

Choosing the Architecture

We chose to run the national key server on Azure Kubernetes Service (AKS). Running the NKS on Kubernetes would allow fine-grained management of services. We can easily configure role-based access control (RBAC), horizontal scaling, and deployment in a declarative manner using YAML files. Out of the box we would get full observability into the infrastructure, network, and pods using the Azure Monitor integration in AKS.

The reference key server solution uses PostgreSQL, so Azure PostgreSQL was a natural fit along with Azure Storage to persist TEK blobs.

With so many components, a centralized store to control secrets was needed. Azure Key Vault was the first choice for securely storing and accessing secrets. Azure Key Vault has integration with AKS and the ability to audit secret management. These behaviors were congruent with our privacy and compliance goals for an Azure based system.

The national key server is all about high availability. Azure Front Door made sense as an entry point for the reporting of positive exposure keys from mobile clients. Front Door supports the routing scenarios (e.g. failover to another region) we anticipated and has a web application firewall built in.

Finally, when mobile clients download positive keys to do on-device matching, they will be downloading files with the help of Azure CDN for last mile delivery of static content. Content delivery networks are great for content that won’t be changing. Azure CDN’s edge network within the US ensures that all mobile clients experience low network latency.

| Tool Used | Reason |

| Azure Kubernetes Service | Great computational power for model training and allows for scalability |

| Azure PostgreSQL | Key Server reference solution uses PostgreSQL |

| GitLab | Customer uses GitLab for source control |

| Azure Front Door | Enables the solution to easily adopt geography and availability-based routing |

| Azure Storage | Persistence for the TEK files that devices will download to do on device matching, works well with CDN |

Overview of National Key Server on Azure

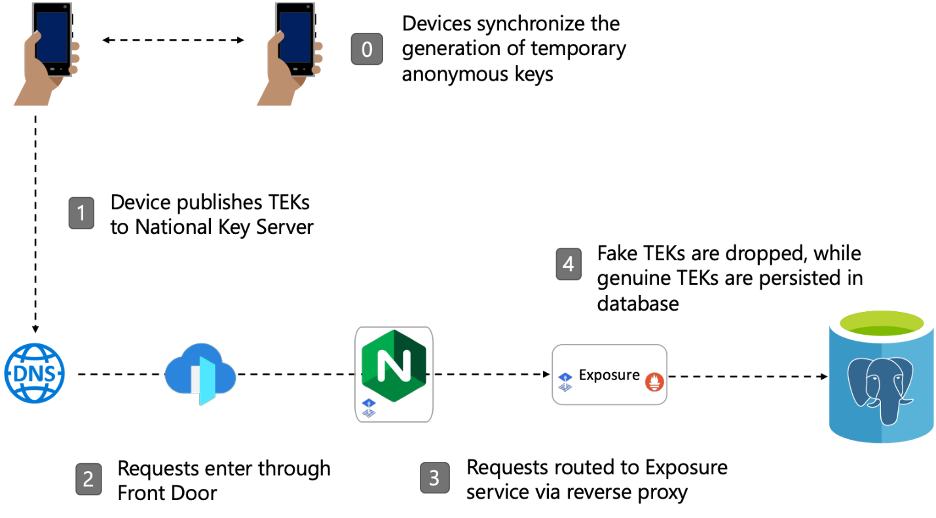

Publishing Keys from Phones

The first use case of the NKS is to allow clients to publish keys. To ingest the anonymous keys (TEKs) we set up an Azure Front Door with a custom DNS. The Front Door routes requests to a NGINX ingress controller hosted within Azure Kubernetes Service. TEKs are ingested from the Exposure microservice and persisted in an Azure PostgreSQL database.

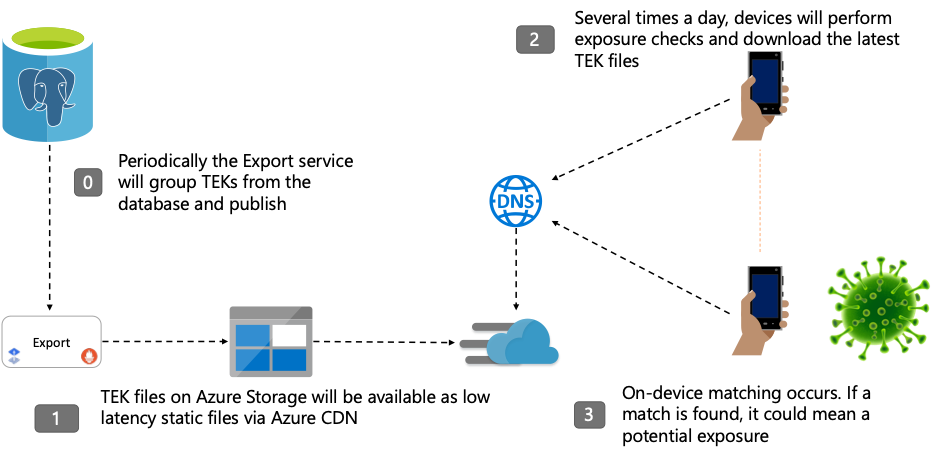

Downloading Keys to Phones

Over time various other microservices will run at scheduled times to group, prune, and export TEKs. When the mobile device downloads the positive keys, it uses an Azure CDN endpoint with a custom DNS. The CDN abstracts an Azure Blob storage instance that hosts static TEK files.

National Key Server Rollout

Infrastructure Rollout

For Azure cloud infrastructure creation and modification, we chose Terraform to match the reference solution’s GCP Terraform implementation. Infrastructure as code solutions like Terraform had many benefits for us; this was due to familiarity and common testing patterns.

We were able to bundle the definition of Azure cloud resources in a template and create a pipeline to support greenfield deploys and Day 2 operations (such as key rotations on cluster upgrades). An example of this flexibility is being able to configure the necessary permissions for Kubernetes pods to access secrets from Azure Key Vault using AAD. Explicit permission and cloud resources must be orchestrated for this to work. If something needs to change in the environment it is easily observed in a git pull request.

If an environment happens to drift (e.g. disaster or manual configuration) from what is stored in the remote Terraform state file, the environment can easily be recreated.

Kubernetes & GitOps

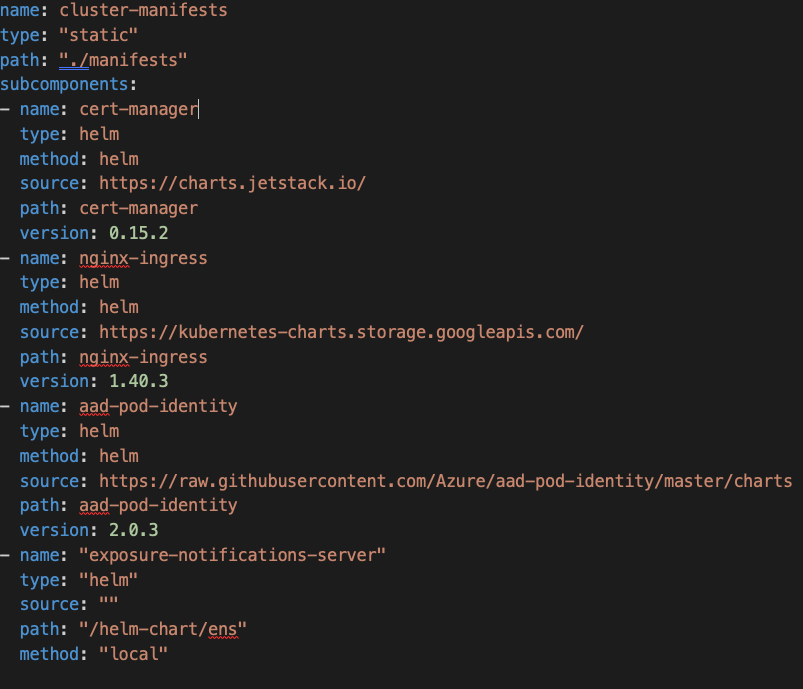

We also wanted highly-effective deployments of the NKS and dependent services to our AKS cluster. A GitOps based deployment was a natural fit for us. We’ve seen success with this approach across many partners and captured our experiences in an OSS project called Bedrock.

Bedrock’s GitOps pattern includes a tool named Fabrikate that helps with presenting a higher-level configuration of what applications are being installed per environment. An example below shows a Fabrikate encapsulation of the Helm charts we used as the basis of a GitOps deployment.

After creating a Helm chart of the NKS, we found it easy to switch between multiple environments to integrate with PHAs prior to production. This approach matches the model of an APHL operations engineer.

To make pull requests very clear to the operator we created a simple bot that would comment on the high-level definition repository pull request. This comment on the pull request would show a review what the downstream manifest YAML files would look like when a high-level abstraction change was made. This resulted in fewer mistakes getting to the Kubernetes cluster.

Moreover, Terraform is compatible with GitOps. This means infrastructure changes and application changes can happen in a single pull request. This is a powerful way of operating and deploying Kubernetes-based workloads.

Validating Security and Privacy

Our work in operationalizing the NKS also meant we went through a security design lifecycle process that included threat modeling, security, privacy, and compliance reviews.

Fortunately, the ENS is a privacy-preserving model where we don’t need to store in PII or PHI. This greatly reduces risk of identifiable data being exposed. We still needed to minimize the attack surface to prevent scenarios like DDOS attacks.

The team started with a layered security design. We included obvious items like RBAC with Kubernetes, Azure network security groups and enabling private link for Azure PostgreSQL. Next we prioritized ways to minimize operational risk such as utilizing Azure Bastion from a peered Azure virtual network.

Layered security design of NKS

| Azure Features Used | Reason |

| RBAC with Kubernetes | Granular user access to Kubernetes APIs and resource is gated by declarative roles |

| Azure Network Security Groups | Filter incoming and outgoing traffic to Azure resources, provide data exfiltration protection |

| Private link for Azure PostgreSQL | Encourages network isolation by allowing access to Azure PostgreSQL securely from within an Azure Virtual Network (e.g. no need to expose public IP addresses of PostgreSQL) |

| Azure Bastion from a peered Azure virtual network | DevOps engineers can securely administer the NKS from Azure Portal without needing any public IP addresses of resources being exposed |

Observing the World

After deploying the NKS we needed to validate the system was operating correctly. We built end to end tests that simulate the publishing of TEKs and verify that they can be downloaded from the CDN endpoint. These happy path tests were the first line of observability on any new build of the ENS. Next, we validated the onboarding of state client app developers. Using Azure Monitor Workbooks we developed a custom dashboard that would extract out service logging, event tracing, and key metrics of the cloud infrastructure. The dashboard was deployed as part of our Terraform templates and stamped out observability for each environment.

This single pane of glass was a central operation tool. We could continuously monitor our security posture and developer onboarding, while seeing load test results and monitoring the cost of the cloud resources. For instance:

- Knowing how many requests on the Azure Front Door we are getting

- Identifying if a DDoS event is occurring

- Knowing if Flux had any syncing issues while deploying YAML manifests

- Getting a real time count of the number of positive exposures

Moreover, we could configure alerts on query-based reporting and analysis (including AKS pod logs and metrics). This turned the dashboard in to a proactive operations center and gave us peace of mind that the NKS was highly available. We could view the increase in TEK publishing and downloading as more US states got onboarded. We knew the bounds of the system, knew when to scale, and could see the big picture.

Summary

This article covered the technical decisions used to operationalize a production-ready NKS that enabled anonymous Bluetooth based contact-tracing in the US. We utilized many Azure managed resources.

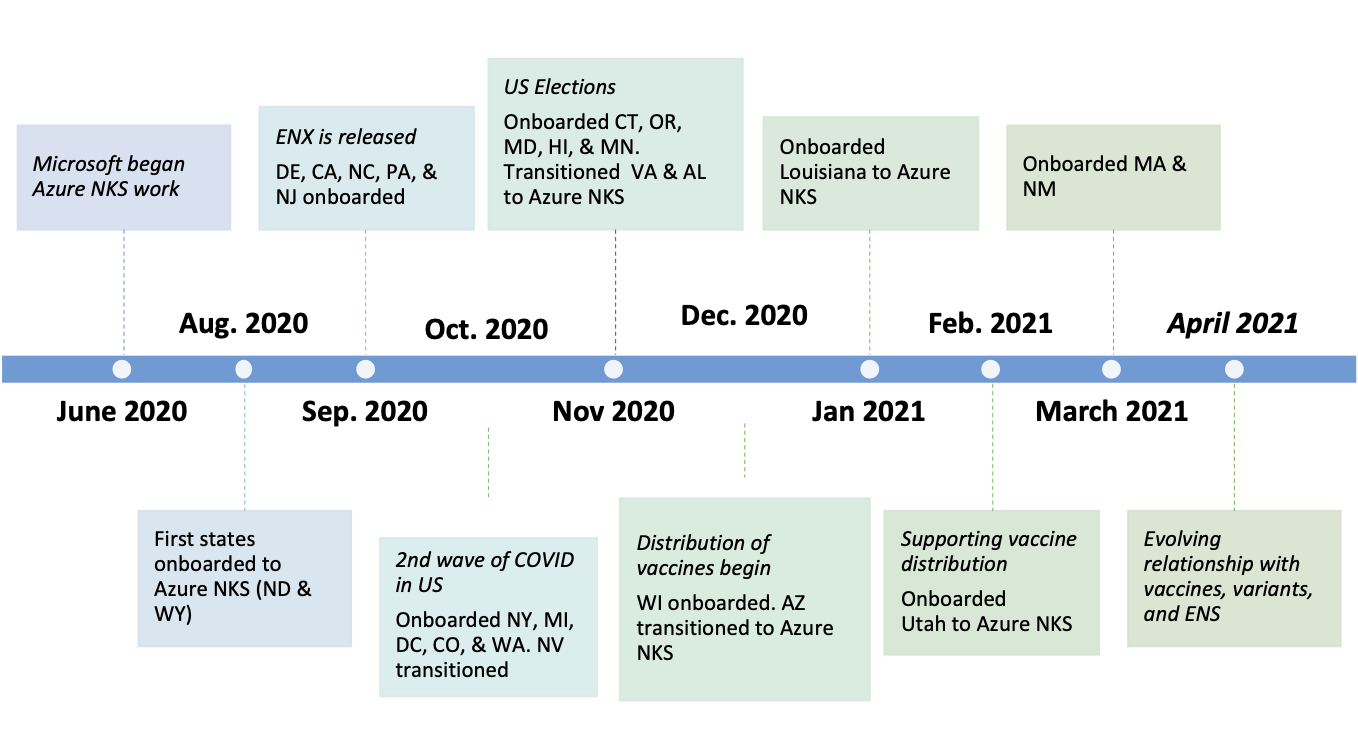

The NKS continues to support tens of millions of iOS and Android devices to help evaluate COVID-19 exposure. We took the reference implementation of the ENS Key Server to production on Azure in five weeks by using fully hosted components. The timeline above chronicles the development and onboarding journey in delivering the NKS.

The result was over 50% of US states participating, and we hope that number will grow. The COVID-19 pandemic was an unprecedented event that demanded partnership across organizations that may usually be competitors. We collaborated with Google, Apple, APHL, and even the CDC to land this critical application. The learning between all organizations involved will be important not just for the rest of the COVID-19 pandemic but also for future global healthcare initiatives.

We hope our experience will be useful for your own deployments on Azure. Feel free to reach out on LinkedIn or Twitter with feedback.

Acknowledgements

Special thanks to the folks at APHL, Google, and Apple.

Also, a big thank you to all contributors to our solution from the Microsoft Team (last name alphabetically listed): Jeff Day, Nick Iodice, Alok Kumar, Todd Lanning, Tim Park, Justin Pflueger, Dhaval Shah, Jarrod Skulavik, James Spring, Archie Timbol, Matt Tucker, and Anthony Turner.

Resources

- APHL Exposure Notifications

- Bringing COVID-19 exposure notification to the public health community

- Google Exposure Notification Server Reference Implementation

- Bedrock: Deployments for Kubernetes

- Fabrikate: Higher level definitions for Kubernetes

- Hub and spoke network topology

- Responsible Data in the Time of Pandemic