Over the years, due to the wide adoption of Amazon S3, the S3 API has become the de facto standard interface for almost all storage providers. These S3-compatible competing service providers use the standard programming interface to help customers migrate and to enable customers to write cloud-agnostic solutions. Customers use the S3 API to connect to many S3-compatible storage solutions such as Google storage, OpenStack, RiakCS, Cassandra, AliYun, and others. Currently, Azure Storage does not natively support the S3 API. This post explains in detail how we added S3 API support to Azure Storage, and how you can leverage this solution to enable your applications to store and retrieve content from various cloud storage providers.

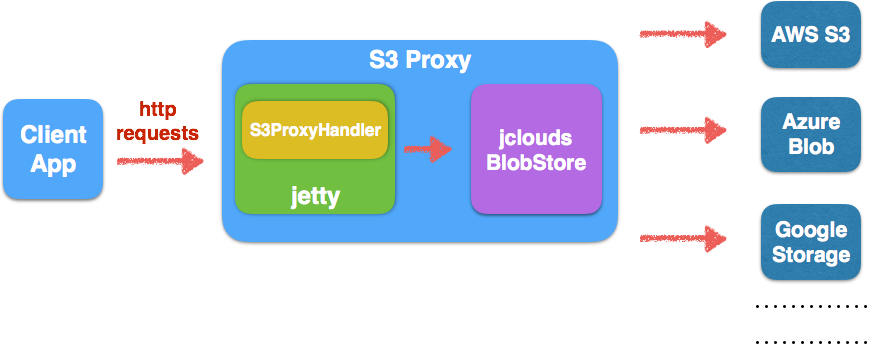

S3Proxy allows applications using the S3 API to access storage backends like Microsoft Azure Storage.

The Problem

To connect to Azure Storage, customers are required to update their existing code to use the Azure Storage SDK, which for enterprises can take awhile and S3-compatibility is one of the major features customers look for when they evaluate storage solutions side by side. This affects anyone who is currently using the S3 API and is considering Azure Storage as an alternative or hybrid solution to their existing storage backend. Companies ranging from enterprises to startups use the S3 API because it abstracts the storage backend from the apps.

S3Proxy represents a path for customers who are blocked during onboarding and would otherwise be unwilling to rewrite basic blob management code against the Azure Storage APIs or SDKs.

Overview of the Solution

We partnered with Bounce Storage, the maintainer of S3Proxy, and Apache jclouds, to enable Azure Storage support for S3Proxy. As a result of our engagement, customers can now leverage S3Proxy to reuse existing Java code with the S3 Java SDKs against Azure Blob Storage. We also provided different deployment options to allow S3Proxy to run as a containerized application anywhere, including Dokku and Cloud Foundry.

Let’s first look at how S3Proxy was implemented. Then, let’s take a look at changes you need to make in your own application to store and retrieve content from Azure Storage with the S3 API.

How S3Proxy works

S3Proxy leverages Apache jclouds BlobStore APIs to communicate with Azure Blob Storage. Like other storage providers, Azure Storage includes a Blob Service REST API responsible for performing CRUD operations against containers and blobs. S3Proxy layers the S3 API on top of Azure Blob Store leveraging the abstraction that the jclouds BlobStore APIs provides. S3Proxy runs as a Java web application that uses an embedded Jetty web server. Jetty is ideal for a solution like this as it is open source, can be embedded in the solution itself, has an extremely small footprint, and has scalable performance under heavy load.

Running S3Proxy Anywhere

Users can use S3ProxyDocker to test, deploy, and run S3Proxy instances as Docker containers.

Prerequisites

- Understand fundamentals of Docker and setup docker locally

- Understand fundamentals of S3Proxy: configuration and setup – S3Proxy

Getting Started

- The below steps assume you have Docker Toolbox installed on your local machine. If you have not done so, please follow these steps.

- Update s3proxy.conf with your own storage provider backend. The default s3proxy.conf is for Azure Storage.

- If you have a need for

s3proxy.virtual-host, update s3proxy.conf with your own docker ip.

To find the docker ip:

$ docker-machine ip [docker machine name]

Sample output:

192.168.99.100

- Build docker image:

$ make build - Run S3Proxy container:

$ docker run -t -i -p 8080:8080 s3proxy

If you cannot get to the internet from the container, use the following:

$ docker run --dns 8.8.8.8 -t -i -p 8080:8080 s3proxy

Verifying Output

Sample output should be something like this:

I 12-08 01:35:30.616 main org.eclipse.jetty.util.log:186 |::] Logging initialized @1046ms

I 12-08 01:35:30.642 main o.eclipse.jetty.server.Server:327 |::] jetty-9.2.z-SNAPSHOT

I 12-08 01:35:30.665 main o.e.j.server.ServerConnector:266 |::] Started ServerConnector@7331196b{HTTP/1.1}{0.0.0.0:8080}

I 12-08 01:35:30.666 main o.eclipse.jetty.server.Server:379 |::] Started @1097ms

docker ps output should be similar to this:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

789186d1debf s3proxy "/bin/sh -c './target" 5 seconds ago Up 4 seconds 0.0.0.0:8080->8080/tcp tender_feynman

Since we mapped port 8080 to 8080, you can navigate to [docker ip]:8080. For example: http://192.168.99.100:8080/

Updating Hosts File

If you are running this locally using a local ip, you will need to update your /etc/hosts file to add entries for the subdomains, which represent the containers or buckets names in your storage accounts.

For example, if the root of the site is running at http://192.168.99.100:8080/, then make sure you add an entry in the /etc/hosts file for each subdomain.

If the Azure Storage container (or S3 bucket) name is demostoragecontainer, then add a subdomain as follows in the hosts file.

192.168.99.100 demostoragecontainer.192.168.99.100

To verify, navigate to [STORAGE CONTAINER NAME].[DOCKER MACHINE IP]:8080. For example: http://demostoragecontainer.192.168.99.100:8080/

Enabling Your Application

To run a sample application against your S3Proxy instance, refer to the AWS Java sample app repo to test your S3Proxy deployment. It is a simple Java application illustrating usage of the AWS S3 SDK for Java. Follow the instruction in the readme to run it. This sample application connects to an S3 API compatible storage backend.

- It creates a container (on Microsoft Azure) or a bucket (on AWS S3).

- It lists all containers or all buckets in your storage account.

- It creates an example file to upload to a container/bucket.

- It uploads a large file using multipart upload UploadPartRequest.

- It uploads a large file using multipart upload TransferManager.

- It lists all objects in a container/bucket.

- It deletes an object in a container/bucket.

- It deletes a container/bucket.

In summary, first make sure your S3Proxy instance is running correctly. Then run the following in your terminal to test the app:

mvn clean compile exec:java -Dkey=<STORAGE ACCOUNT KEY> -Dsecret=<STORAGE ACCOUNT SECRET> -Dbucketname=<CONTAINER NAME TO CREATE> -Ds3endpoint=<S3PROXY URL> -Dfilepath=<FULLPATH LOCATION OF DEMO.ZIP FILE>/demo.zip

Here is a run of the sample Java application using the S3 SDK performing basic CRUD operations against Azure blob storage using S3Proxy. On the left is a verbose log of a running S3Proxy instance. On the right, we have the sample application using S3 Java SDK doing basic CRUD operations against Azure blob storage via S3Proxy.

Updating Your App

Here are a few updates you may need to add to your own app to make it work with S3Proxy.

- Since Azure Storage does not return the MD5 sum for ETag, make sure to include the

disablePutObjectMD5Validationflag in your app to disable MD5 check. Otherwise, it will end in errorInput is expected to be encoded in multiple of 2 bytes but found: 17.

System.setProperty("com.amazonaws.services.s3.disablePutObjectMD5Validation", "1");

- Due to S3Proxy’s limitations with AWS signature V4, add the following in your app to ensure v2 signature is used instead. Otherwise, you will get the

Unknown header x-amz-content-sha256exception.

ClientConfiguration clientConfig = new ClientConfiguration().withSignerOverride("S3SignerType");

- To tell the AWS S3 Java SDK to use S3Proxy instead of going against an AWS region, update your

AmazonS3Clientcode to pass in the S3Proxy URL as the endpoint. For example, if you passed in-Ds3endpoint=:http://localhost:8000to run this sample app, the endpoint used forAmazonS3Clientishttp://localhost:8000.

AWSCredentials credentials = new BasicAWSCredentials(s3proxydemoCredentialKey, s3proxydemoCredentialSecret);

clientConfig.setProtocol(Protocol.HTTP);

AmazonS3 s3 = new AmazonS3Client(credentials, clientConfig);

s3.setEndpoint(s3proxydemoEndpint);

Other Deployment Options

You can push S3Proxy as a docker app to various platforms.

Deploying to Platforms like Dokku

Dokku is a Docker powered open source Platform as a Service that runs on any hardware or cloud provider. Dokku can use the S3Proxy Dockerfile to instantiate containers to deploy and scale S3Proxy with few easy commands. Follow the Deploy-to-Dokku guide to host your own S3Proxy in Dokku.

Deploying to Platforms like Cloud Foundry

Cloud Foundry is an open source PaaS that enables developers to deploy and scale applications in minutes, regardless of the cloud provider. Cloud Foundry with Diego can pull the S3Proxy Docker image from a Docker Registry then run and scale it as containers. Follow the Deploy-to-Cloud-Foundry guide to host your own S3Proxy in Cloud Foundry.

0 comments