MongoDB is a popular document database that offers high performance, scalability, and flexibility. Many organizations use MongoDB to store and process large volumes of data for various applications. However, managing and maintaining MongoDB clusters can be challenging and costly, especially as the data grows and the demand increases.

Azure Cosmos DB for MongoDB, particularly the vCore-based model, offers several advantages over traditional MongoDB. It provides a fully managed, globally distributed database service with a 99.99% high availability SLA, ensuring robust performance and reliability. The vCore-based model supports high-capacity vertical and horizontal scaling, making it ideal for workloads with long-running queries, complex aggregation pipelines, and distributed transactions. Additionally, it integrates seamlessly with the Azure ecosystem, allowing developers to leverage Azure’s security features and other services without needing to adapt to new tools. This makes vCore-based Azure Cosmos DB for MongoDB a compelling choice for scalable, secure, and efficient database solutions. By migrating MongoDB to vCore-based Azure Cosmos DB for MongoDB, you can reduce the operational overhead, improve the availability and reliability, and leverage the native Azure integration and features.

There are several methods to migrate MongoDB to Azure Cosmos DB, tailored to your specific needs and preferences. For smaller datasets (less than 10GB), you can use MongoDB’s native tools like mongoimport and mongorestore. These commands are straightforward and effective for basic migrations.

For larger datasets, the Azure Data Studio (ADS) extension offers a user-friendly, self-service tool that supports both online and offline migrations. While the ADS extension is suitable for most scenarios, it has some limitations, such as handling complex migration requirements and private endpoint situations.

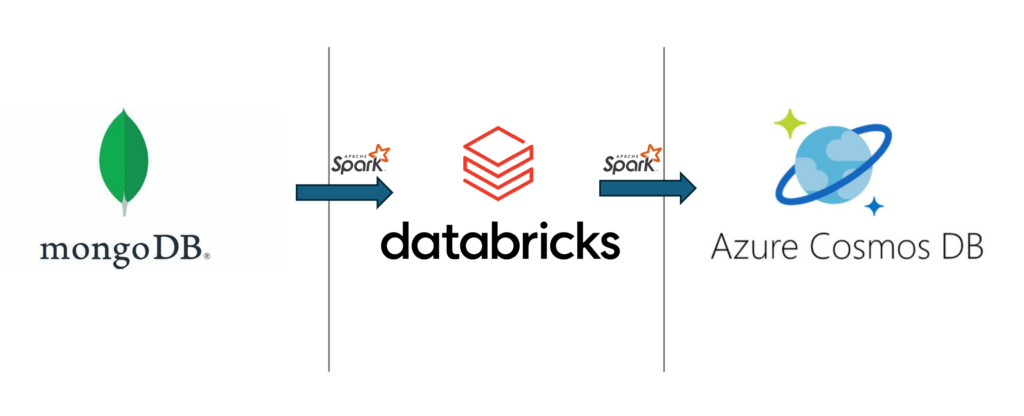

If you need a flexible solution which also works in private endpoint situations, consider using the Spark-based Mongo Migration tool on Databricks. Additionally, it allows you to control the migration speed and parallelism and customize configuration settings to meet your specific needs.

A new tool for complex and secure migrations

The Spark based MongoDB Migration tool is a JAR application that uses the Spark MongoDB Connector and the Azure Cosmos DB Spark Connector to read data from MongoDB and write data to VCore-based Azure Cosmos DB for MongoDB. It can be deployed in your Databricks cluster and virtual network, and run as a Databricks job. You can access the GitHub repository with the necessary binary files, sample configuration files, and detailed instructions on how to use the tool.

The Spark based MongoDB Migration tool has the following features and benefits:

- It supports both online and offline migrations, with the option to resume the migration from the last checkpoint.

- It can migrate data from MongoDB Atlas or MongoDB on-premises or AWS Document DB.

- It can migrate to both vCore and RU offerings of Azure Cosmos DB for MongoDB.

- It can migrate data to vCore-based Azure Cosmos DB for MongoDB with private endpoints and virtual networks.

- It can handle index conflicts during the migration.

- It can perform data transformations and generate unique Ids.

- It can perform error handling and logging, skipping invalid documents, and recording the errors and warnings.

- It can control the speed and parallelism of the migration, such as setting the batch size, the number of partitions, and the number of threads.

Follow these steps to use the Spark based MongoDB Migration tool to migrate MongoDB to VCore-based Azure Cosmos DB for MongoDB:

- Sign up for Azure Cosmos DB for MongoDB Spark Migration to gain access to the Spark Migration Tool GitHub repository.

- Download the JAR file and the sample configuration files from the Spark Migration Tool GitHub repository.

- Edit the configuration files according to your source and target database settings and your migration preferences.

- Upload the binary files and the configuration files to your Databricks cluster.

- Create a Databricks job and configure it to run the Spark based MongoDB Migration tool with the configuration files as arguments.

- Run the Databricks job and monitor the migration progress and status.

- Verify the migration results and troubleshoot any issues.

The Spark based MongoDB Migration tool is a powerful way to migrate MongoDB to vCore-based Azure Cosmos DB for MongoDB. It can handle complex and secure migration scenarios and provide you with more control and flexibility over the migration process. Sign up for Azure Cosmos DB for MongoDB Spark Migration to gain access to the Spark Migration Tool GitHub repository.

About Azure Cosmos DB

Azure Cosmos DB is a fully managed and serverless distributed database for modern app development, with SLA-backed speed and availability, automatic and instant scalability, and support for open-source PostgreSQL, MongoDB, and Apache Cassandra. Try Azure Cosmos DB for free here. To stay in the loop on Azure Cosmos DB updates, follow us on X, YouTube, and LinkedIn.

0 comments