We are excited to announce the GA of Azure Cosmos DB dynamic scaling – among multiple new features (Binary Encoding, Reserved Capacity) released recently to make your Azure Cosmos DB workloads even more cost efficient. Dynamic scaling is an enhancement to autoscale which provides cost optimization for nonuniform workloads. In the earlier version of autoscale, all partitions scaled uniformly based on the most active partition, which caused unnecessary scale-ups if only one or a few partitions were active. With this new feature, partitions and regions now scale independently, improving cost efficiency for non-uniform large-scale workloads with multiple partitions and/or multiple regions. We’ve seen that dynamic scaling has helped customers save between 15% to 70% on autoscale costs, depending on workload patterns.

Ideal use cases for dynamic scaling

We recommend this feature to all customers interested in scaling the throughput (RU/s) of their workloads automatically and instantly based on usage. However, there are two specific use cases where we see the most benefits:

- Database workloads that have a highly trafficked primary region and a secondary passive region for disaster recovery.

- With dynamic scaling, you can now save costs as the secondary region will independently and automatically scale down while idle. Then automatically scale-up as it becomes active and while handling write replication from the primary region.

- Multi-region database workloads.

- These workloads often observe uneven distribution of requests across regions due to natural traffic growth and dips throughout the day. (e.g. a database might be active during business hours across globally distributed time zones).

Real World Scenario

To understand the benefits of this feature, let’s look at a simplified real-world scenario and see the outcome with just autoscale versus dynamic autoscale:

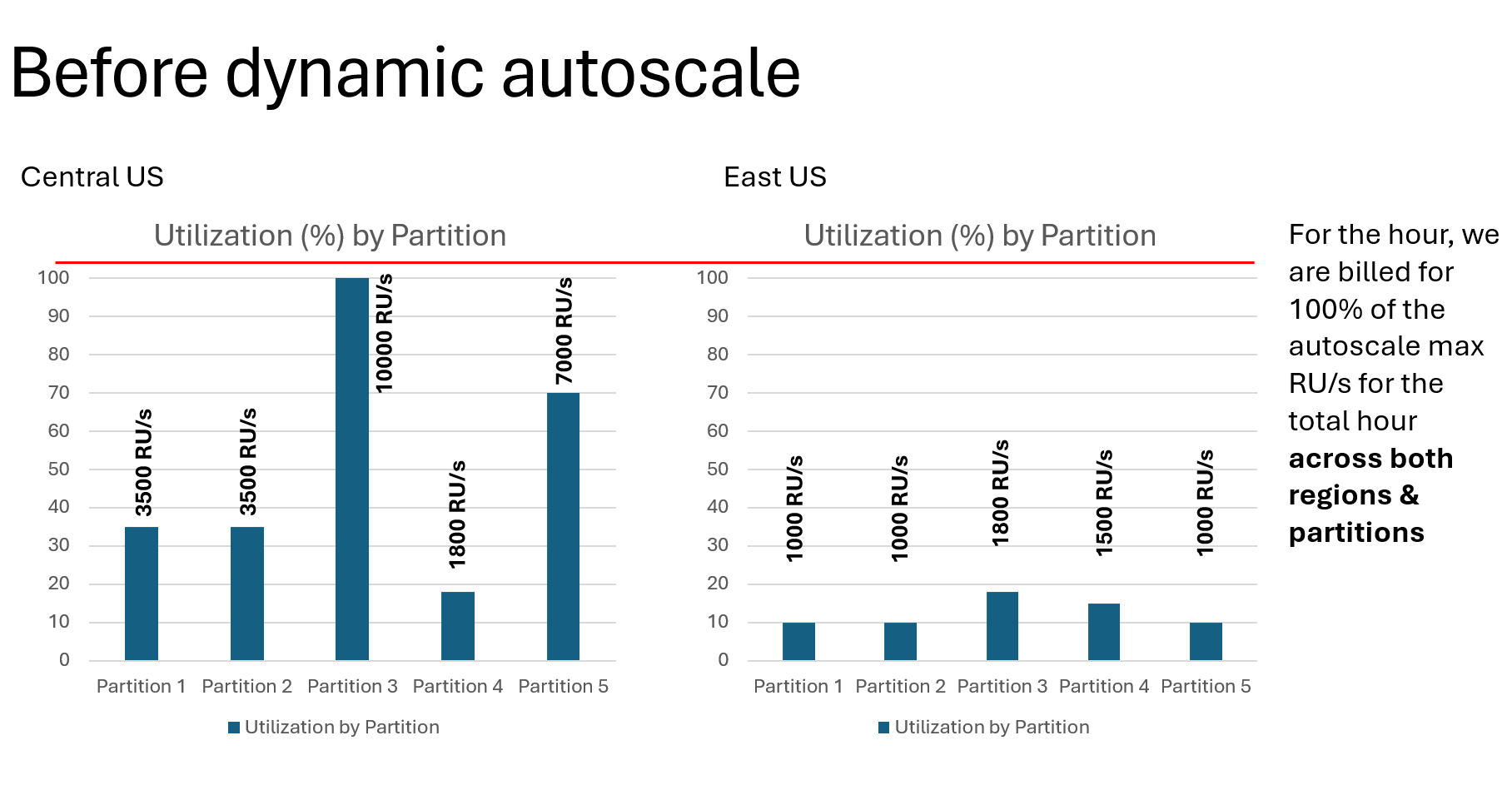

Contoso operates a multi-tenant application with primary write region in Central US and secondary read region in East US. Contoso currently manages 1000 tenants, utilizing TenantId as the partition key. The primary region handles both writes and reads, while the secondary region is configured for high availability and remains largely inactive. This customer’s autoscale workload includes a collection with 50,000 RU/s and five physical partitions—each capable of scaling up to 10,000 RU/s. These 1000 tenants are distributed across five physical partitions. Although most tenants exhibit uniform workloads, occasional spikes from one or two tenants cause the complete collection in both regions to reach maximum RU/s. Furthermore, despite the lack of activity, the secondary region incurs the same charges as the primary. The distribution of RU/s and utilization across the five partitions and two regions is depicted below.

Primary Region – Central US

| Region | Partition | Throughput | Utilization | Consumed RU/s & Operations |

| Write | Partition 1 | 1000 – 10000 RU/s | 35% | 3500 RU/s – All Reads |

| Write | Partition 2 | 1000 – 10000 RU/s | 35% | 3500 RU/s – All Reads |

| Write | Partition 3 | 1000 – 10000 RU/s | 100% | 10000 RU/s (1800 RU/s – Writes, 8200 RU/s – Reads) |

| Write | Partition 4 | 1000 – 10000 RU/s | 18% | 1800 RU/s (1500 RU/s – Writes, 300 RU/s – Reads) |

| Write | Partition 5 | 1000 – 10000 RU/s | 70% | 7000 RU/s – All Reads |

Secondary Region – East US

| Region | Partition | Throughput | Utilization | Consumed RU/s & Operations |

| Read | Partition 1 | 1000 – 10000 RU/s | 10% | 1000 RU/s |

| Read | Partition 2 | 1000 – 10000 RU/s | 10% | 1000 RU/s |

| Read | Partition 3 | 1000 – 10000 RU/s | 18% | 1800 RU/s – Replication RU/s |

| Read | Partition 4 | 1000 – 10000 RU/s | 15% | 1500 RU/s – Replication RU/s |

| Read | Partition 5 | 1000 – 10000 RU/s | 10% | 1000 RU/s |

Let’s look at the hourly utilization of Contoso in autoscale mode:

At one point during the hour, the Central US region reached 100% utilization for a specific tenant. As a result, the autoscale feature scaled the provisioned throughput to 10,000 RU/s across all five partitions in both regions, as that was the most active partition during that hour. Subsequently, we calculate the hourly RU/s consumption as follows:

Most active partition hourly RU/s (request units) * Number of partitions * Number of Regions

(i.e.) 10000*5*2 =100,000 RU/s

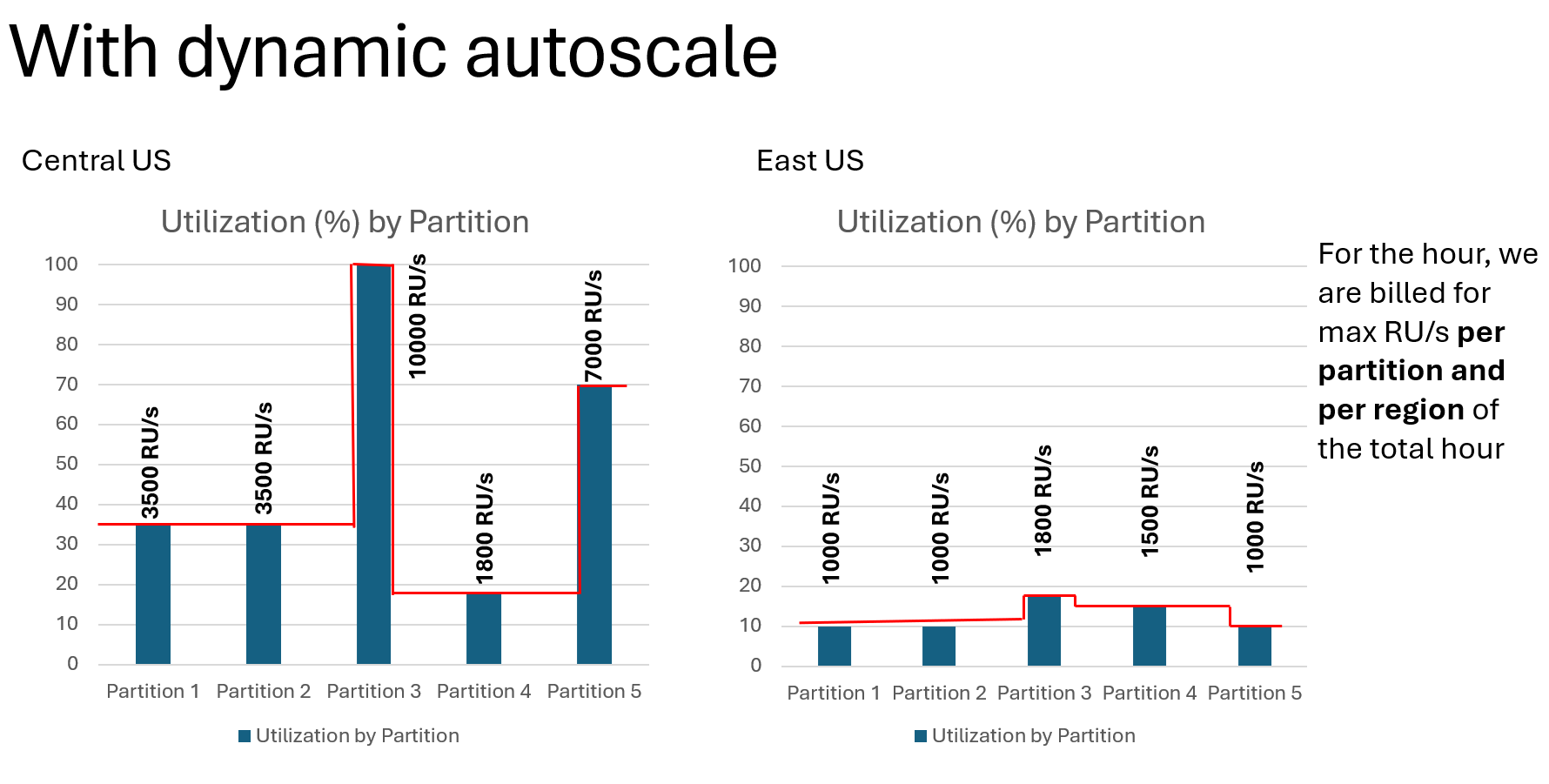

Now, let’s see how dynamic scaling improves the cost efficiency of Contoso’s workload. The hourly utilization that we see in dynamic autoscale mode is as below:

In dynamic autoscale, we sum up the maximum RU/s utilized per hour for each region and partition, as each partition and region scale independently. Therefore, we calculate the hourly RU/s consumption as follows:

Sum up max RU/s utilized per hour per region per partition (i.e.) ((3500+3500+10000+1800+7000) + (1000+1000+1800+1500+1000)) = 32,100 RU/s

This example demonstrates how dynamic autoscale helped Contoso save 68% in RU consumption for the same workload.

Benefits observed by Azure Cosmos DB customers after enabling dynamic scaling:

- Each region and partition scales independently, leading to significant cost optimization for non-uniform autoscale workloads.

- Adding secondary regions has become more cost-efficient because each region now scales independently based on actual usage. This allows you to achieve 99.999% high availability for reads and writes with multi-region writes at a lower

- The impact of hot partitions due to suboptimal partition keys is minimized, as only the hot partition is scaled to the maximum, unlike traditional autoscale where all partitions scale based on the hottest partition in the collection.

- In the public preview, customers have seen cost optimizations ranging from 15% to 70% on their actual autoscale costs, depending on their workload patterns.

What are customers saying about dynamic scaling?

In November 2023, we announced the public preview of dynamic scaling (per region and per partition autoscale). We want to give a major thank you to our customers who enabled this feature during the public preview. Check out what some of them had to say:

“Since enabling dynamic scaling within Azure Cosmos DB in May of this year, we have realized an impressive 65% reduction in our monthly Azure Cosmos DB spend. The Microsoft Product Team’s engagement and support throughout our journey has been excellent and has helped us improve a critical application’s performance and associated cost.” – Joe MacKinnon, Director IT – Business Enablement of SECURE Energy Services

“The introduction of Azure Cosmos DB’s dynamic scaling feature couldn’t have come at a better time. We were already working to optimize our cloud infrastructure for scalability and cost. Dynamic scaling just made it so much easier. The flexibility it offers for managing our bursty workloads is unparalleled. This not only streamlined our operations but also led to substantial cost savings, reinforcing our commitment to Azure Cosmos DB and the Azure Platform.” – Apurv Gupta, founder of Mailmodo

“With dynamic scaling for Azure Cosmos DB, we have been able to achieve 15% cost savings on average with zero intervention. dynamic scaling provides some much-needed relief by limiting the effects of hot partitions, which coupled with autoscale, enables us to safely scale our workloads based on our demands without having to worry about cost.” – Vineeth Raj, Director Engineering of Udaan

“Moving our Azure Cosmos DB autoscale workloads to ‘autoscale per partition/region’ has transformed our use of Cosmos. We were skeptical at first about the benefits, but the improvements of having our regions and partitions scale independently had a significant impact on our consumption cost for Cosmos. We achieved a remarkable 60% decrease in consumption cost for all our Azure Cosmos DB workloads which has now opened up use cases for other projects which were previously cost prohibitive.” – Clemence Thia, Senior Cloud Engineer Global IT – Infrastructure & DevOps of BDO Netherlands

Getting started

Start by creating a new Azure Cosmos DB autoscale account. Use this guide to learn how to enable autoscale on a new or existing workload.

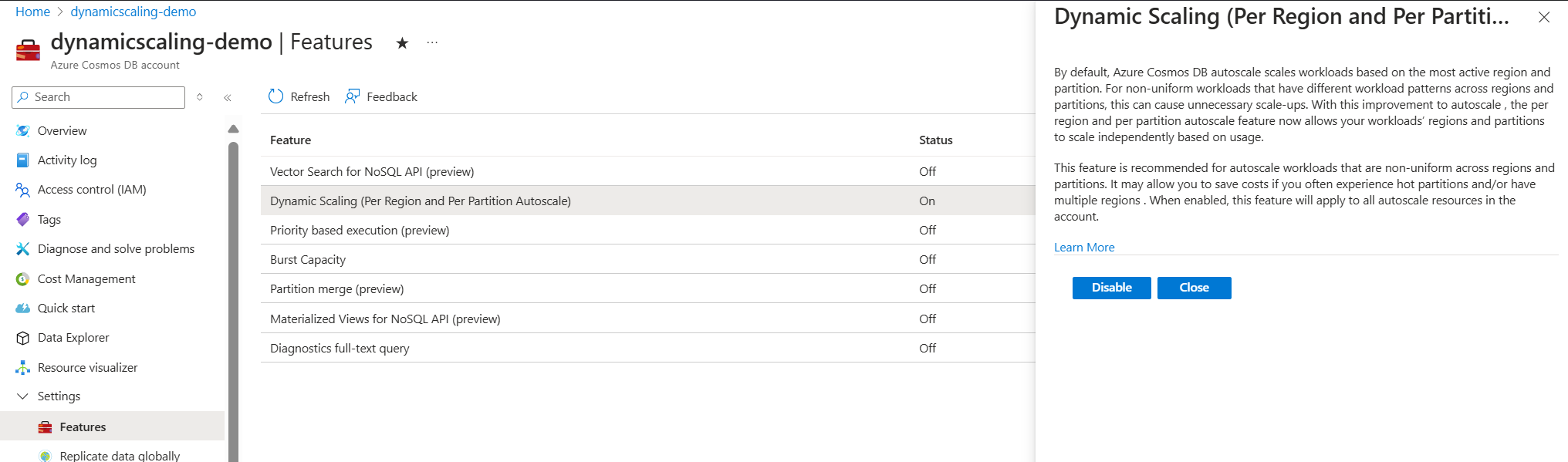

- Azure Cosmos DB enables dynamic scaling by default for accounts created after 25-Sep-2024. For older accounts created before this date, head over to the Features pane in Azure Portal. Here, you will be able to enable the feature. Please note that this enables the feature on all autoscale collections and autoscale shared databases within the account. Enabling this feature has zero downtime or performance impact.

For more information, please visit our documentation on

Dynamic scaling (per region and per partition autoscale)

Frequently asked questions about autoscale provisioned throughput in Azure Cosmos DB

Watch a demonstration!

Watch Azure Cosmos DB TV episode, “Cost Optimizations Provided by Azure Cosmos DB Dynamic Scaling” for a demonstration.

Leave a review

Tell us about your Azure Cosmos DB experience! Leave a review on PeerSpot and we’ll gift you $50. Get started here.

About Azure Cosmos DB

Azure Cosmos DB is a fully managed and serverless NoSQL and vector database for modern app development, including AI applications. With its SLA-backed speed and availability as well as instant dynamic scalability, it is ideal for real-time NoSQL and MongoDB applications that require high performance and distributed computing over massive volumes of NoSQL and vector data.

Try Azure Cosmos DB for free here. To stay in the loop on Azure Cosmos DB updates, follow us on X, YouTube, and LinkedIn.

0 comments