This is my second post about “Updating data using Entity Framework in N-Tier and N-Layer Applications”. If you wanna read the basics, go to my first post here:

So!, I finished my first post saying that the code I showed does not cover scenarios where we want to manage “Optimistic Concurrency Updates & Exceptions”, I mean, let’s say any other guy changed some data, regarding that same data customer, during the timeframe after I performed my Customer query but before I actually updated it to the database. I mean, someone changed data in the database just before I called to context.AttachUpdated(customer) and context.SaveChanges(). Ok, then, the initial original user won’t be aware that he might be over writing that new data that the other second user updated… In many cases this can be dangerous depending on the application’s type.

The goal is: “How to Update data in N-Tier and N-Layer applications using Entity Framework with detached entities and managing Optimistic Concurrency Updates and Exceptions”.

I think this is a great subject and the way you gotta do it is not very clear in official documentation neither in most of the Entity Framework books and articles (at least, at this time..).

Cool! 🙂 let’s see how we can achieve this new scenario!.

Fisrt of all, the Entity Framework can enforce optimistic concurrency when generating the update and delete SQL sentence. It does this by including original values in the WHERE clause for any entity property with the ConcurrencyMode attribute value set to Fixed. But by default, if you just create an EF model, entity fields are not set as concurrency fields.

Ok, so first thing would be to change that attribute to any entity field!. and which field do we use?, well, you can use whichever you like (Name, IDs, etc.) and you even can set several fields to have ConcurrencyMode attribute value set to Fixed.

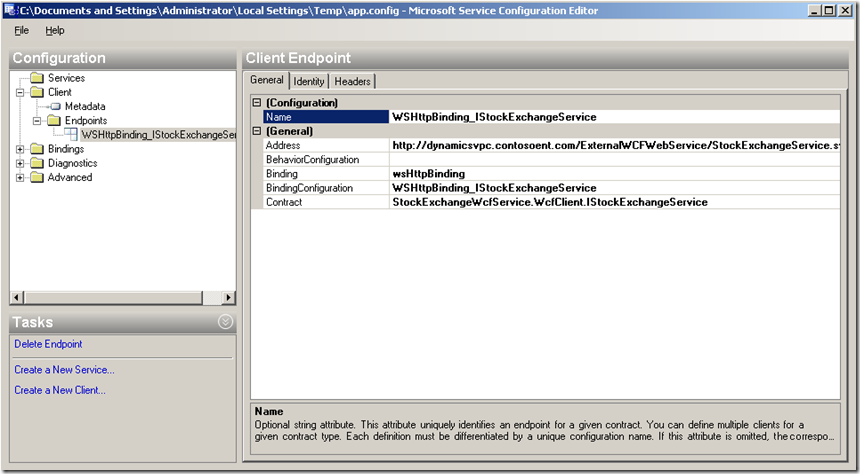

Here you can see how to change the EDM in VS.2008:

|

If you change this “Customer” entity editing the EDM, you could see how the SQL sentence update is modified adding that column in the WHERE clause for any Update or Delete Commands:

In this case I’m using the “FirstName” field (even several fields), but, what I usually use is a TimeStamp field added to each table and entity. In that way I always can check in the same way (same field) if the record has changed.

Then, an OptimisticConcurrencyException is raised if the original row is not found.

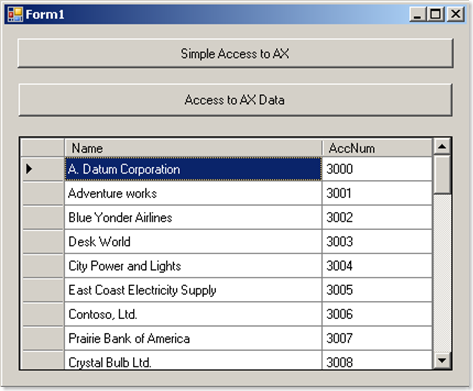

Here you can see that exception being raised when I modified the customer’s name in the database just before updating from the application using Entity Framework:

|

“Store update, insert, or delete statement affected an unexpected number of rows (0). Entities may have been modified or deleted since entities were loaded. Refresh ObjectStateManager entries.” |

Cool!, this is great, so when we get this exception we can decide if we want the user to know it, refresh de form and maybe start again, or we could show the differences, or we could even just catch the exception, log the problem in a trace and just save the last operation. Ok!, but think!, what is wrong when we are using detached entities?. Sure! you got it!, the problem is that in order for the EF context to to know the original values, you’d need to use the same context since the very moment we started quering the database, updating, and so on. But, remember we are in a scenario (N-Tier and N-Layered apps) where EF contexts have a short life because we use stateless server objects and we detach the EF entity objects, and when we attach again the updated entity, we do it on a completely new context!, that new context knows nothing about the original query and the original values!! (if you wanna see more about this, just read my first post).

For instance, if we just set the concurrency mode to FIXED to any field and we use the code I showed in my first post about EF and data updates in N-Tier apps, it won’t work!. Why?, because when the business class is getting the updated data entity back, the context does not know the real original values, and if we use the first method I explained in my first post (using the AttachUpdated() custom extensor method and the context.TryGetObjectByKey()), the WHERE clause is going to compare it with current database values (which are not the real original values). Or if we use the second method I showed (using just the SetAllModified()), it is even worse, in this case it does not even know anything about the current database values and it is going to place in the WHERE clause exactly the same value we want to update, so in this second case, it will always throw an exception!!!.

So, what do we need in order to make this to work?, of course, we’d need to “artificially” re-construct the context like if we had the same context!, I mean, we need to keep somewhere the original values we got the first time we queried the database, and attach it to the second short lived context, right before we attach the updated entity. In this case, it works like a charm!. 🙂

Let’s see the code. Regarding the query business methods and WCF Service when we are just querying, we don’t need to change anything, we return data to the client tier in the same manner. But then, when we get the data in the client tier (let’s say in a WPF, Silverlight or WinForms app) we need to keep that data in a safe place. I mean, we need to clone the entity data so after the user has changed the data fields in the form and presses the Save button, we’ll actually send the updated data entity plus the original values, so the server components will be able to re-create a context who will know how to handle optimistic concurrency.

But, before getting deep into the server “update methods”, I wanna notice another problem. Entity Framework actually lacks of a “Clone()” entity method. First option (a really bad one) would be querying twice to the server WCF services so we get two data entities. But this approach is ugly because we loose performance and also there is a small possibility that while we query again, the data in the database has chaged. So, no way!, we need to clone our detached entity in the client side!

OK, for that, I’m using my own custom method (as a utility method in the client layers) which is based on memory streams copies:

|

//(CDLTLL) |

You could find faster ways to clone EF detached entites, but the thing I like about this is that it is based just on a few lines of code.

For instance, Matthieu MEZIL has made an entity cloner based on Reflection:

http://msmvps.com/blogs/matthieu/archive/2008/05/31/entity-cloner.aspx

Take a look. It could be faster (I have not compared it), but it is a lot of code…, well, you can choose. 🙂

Then, we get to the fun stuff, let’s see how we handle the server code regarding updates and optimistic concurrency.

First of all, we need a slightly different WCF Service method, because we need to get two entities (original values and updated values):

|

public void CustomerBll_UpdateCustomerEx(Customer newCustomer, Customer originalCustomer) |

And then, our business class method, the “core” of this post!! 🙂

|

public void UpdateCustomerOptimisticConcurrency(Customer detachedCustomer, Customer originalCustomer) //(CDLTLL)Apply property changes to all referenced entities in context // Apply entity properties changes to the context throw (e); } |

Here we first tell to the context what were the original values in the database when we first queried, then it is when we tell to the context about our updated data and we actually do it not just regarding our entity, we also apply changes to all referenced entities within our EF graph (using our custom extensor method called context.ApplyReferencePropertyChanges) so when we finally call to “context.SaveChanges()“, the EF will smoothly handle the “Optimistic Concurrency Updates and/or its Exception”!, it will actually look for original values set in the WHERE clause of our Update/Delete final sentence. Cool!. 🙂

FINAL THOUGHTS

It would be nice to have a way to actually hide the original values (hidden cloned entity) within the regular detached entity object. Doing so, it would be transparent for the developer who is working on the client layers…

You can also take a look to ENTITYBAG (by Danny Simmons). I’m not using it at all in the code I’ve been talking about.

Perseus: Entity Framework EntityBag

http://code.msdn.microsoft.com/entitybag/

EntityBag – Wrap-up and Future Directions

http://blogs.msdn.com/dsimmons/archive/2008/01/28/entitybag-wrap-up-and-future-directions.aspx

EntityBag Part II – Modes and Constructor

http://blogs.msdn.com/dsimmons/archive/2008/01/20/entitybag-part-ii-modes-and-constructor.aspx

EntityBag Modes with the Entity Framework (John Papa)

http://nypapa.com/all/entitybag-modes-with-the-entity-framework/

Coming to the Entity Framework: A Serializable EntityBag for n-Tier Deployment

http://oakleafblog.blogspot.com/2008/01/coming-to-entity-framework-serializable.html

The EntityBag concept is actually quite neat and it solves many points I talked about, but it has also several restrictions:

First of all, there’s the fact that it requires us to run .Net and the EF on the client, and it also requires that the code for your object model has to be available on the client, so, you loose the great discover and interoperability we have with basic Web Services (or advanced WCF wsHttp or basicHttp services, etc.), so I guess ENTITYBAG is not interoperable with Java or any non .NET language.

0 comments