Introduction

In the law enforcement community, the number of media assets continue to grow by the day. From video captured from body worn cameras as well as audio recordings captured from 911 call dispatch and prison inmate phone calls, the prevalence of media assets within the law enforcement community is becoming a critical business asset to improving the quality of law enforcement services and business processes necessary to keep our communities safe.

Law enforcement media presents some compelling scenarios for Azure Media Services, particularly with respect to media discovery and analysis. Azure Media Services provides a market differentiated content extraction feature called the Azure Media Indexer, formerly known as the Microsoft Audio Video Indexing Service (MAVIS), that can be used to search inside audio and video files. This is a powerful capability and can serve as the core of a media indexing and analysis solution. Leveraging the Azure Media Indexer in combination with rich analytics services within the Azure platform, such as Azure Machine Learning, Azure Stream Analytics, and Power BI, can allow customers a comprehensive media solution that not only provides rich media playback capabilities, but also allow for the creation of business solutions that enable organizations to use media assets to make business decisions or provide situational awareness.

I recently lead a proof-of-concept engagement with a customer where we explored Azure Media Services in detail and produced a prototype that showcased the power and flexibility of the Azure platform in delivering a media indexing and analytics solution for law enforcement. I also engaged the services of Microsoft 2015 Data Analytics Partner of the Year, Neudesic, to support development of the analytical components of the solution. It was truly a team effort! I want to share a few learning’s with you, dear reader, in case you encounter a similar project scenario and are considering the Microsoft Azure platform.

The Scenario

The customer, herein referred to as Contoso Sherriff Department, was looking to design and develop a solution that will enable them to better predict when and where crimes occur within its jurisdiction in order to gain better situational awareness and improve response to criminal events as well as optimize its distribution of officers to “trouble areas” to prevent future crimes from occurring.

The goal of the proof-of-concept was to build a media ingestion, search/discovery, and analytics solution hosted in Azure Government, and complimented by additional analytics services currently only available in Azure Public, to deliver a business solution that allows staff in the department to ingest and analyze audio content and provide useful outputs of the analysis to enable staff to better predict future criminal activity.

Customer Data

The sample data provided was audio recordings originating from the Contoso Sherriff Department. Characteristics of the data included the following:

- 15 TB of audio files (little over 3.4MM files)

- Audio is Prison Communication System (PCS) calls between inmates and person(s) outside of the sheriff department (e.g. girlfriends/boyfriends/significant others, friends & family, etc.)

- During PCS call, caller and callee are notified that the call is being recorded.

- Audio quality is poor. In some instances, caller or callee dialect is difficult to understand (for example: mumbling of words or lowered voice volume).

The Solution

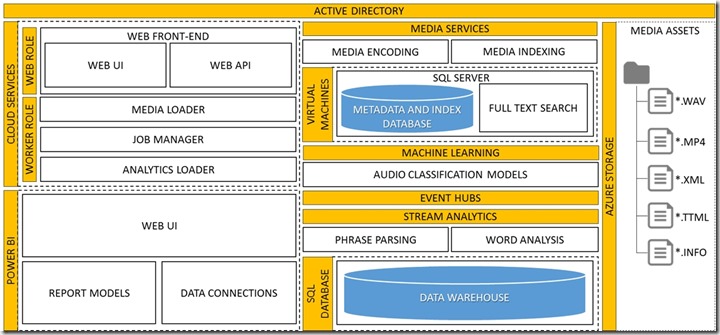

The solution needed store the audio files in Azure Storage (block blobs), encode them (using the Azure Media Encoder and used for media playback leveraging the Azure Media Player), index them (using the Azure Media Indexer), perform analytics against the indexed output, and display that output via a business intelligence dashboard. Components of the solution included the following:

- ASP.NET MVC front end to be used to upload, search, listen to encoded audio files, and view audio captions as well as create/update metadata associated with uploaded files (e.g. Title, Description, Language, Date Uploaded, etc.)

- ASP.NET Web API for providing a RESTful interface for performing CRUD operations of audio files and associated metadata

- Azure Media Services for encoding and indexing of audio files

- Azure Machine Learning for pattern matching, identification, determination of slang and/or code words

- Azure Virtual Machines for storing metadata and search index of uploaded/processed audio files

- Azure SQL Database for hosting a data warehouse leveraged by analytics processing backend

- Azure Event Hubs/Stream Analytics for concurrent data stream processing and routing, recognition/detection of keywords and/or terms (based on output from Azure Media Indexer) and publishing into data warehouse

- Azure Active Directory for providing user authentication/authorization

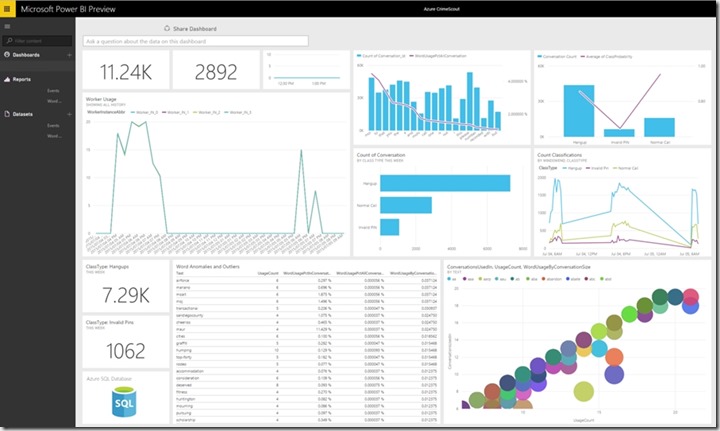

- Power BI to provide a self-service dashboard experience to display analysis results

The high-level architecture of such a solution including the solution components and where they sit as part of the Azure cloud environment is shown in the following diagram:

Media Ingestion/Processing Flow

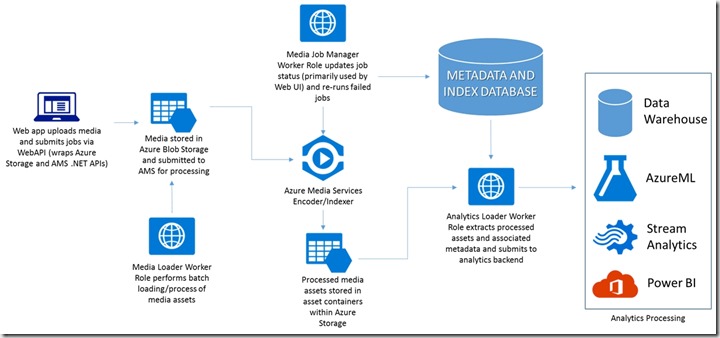

Media ingestion/processing leveraging Azure Media Services was straight forward. Media files (audio or video) can be uploaded to the solution via the web-based front-end of the application or through batch processing via Azure Cloud Service worker role that can process large volumes of media files, stored as block blobs in Azure Blob Storage, in batch.

The media ingestion/processing flow is depicted in the following diagram:

During media processing (e.g. Encoding/Indexing by Azure Media Services), the solution employed additional worker roles to track processing status of media assets flowing through the system as well as submit the processed assets into its analytics processing backend for data analysis and business intelligence, which leveraged Azure Media Services job notification through Azure queues to determine when an encoding/indexing job has completed in order for it to then begin the analytics processing. To learn more about how to check job progress in Media Services, and to better understand the technique used here, click here.

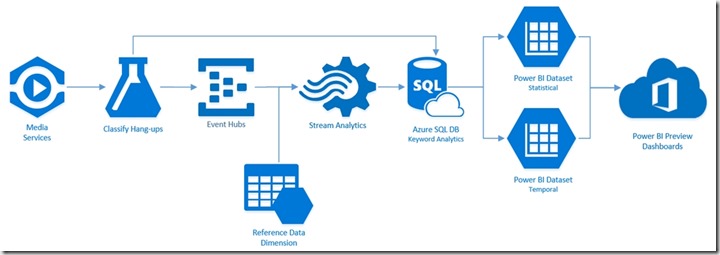

Analytics processing within the solution involved the use of Azure Machine Learning that executes several classification models against the processed media assets using both Multiclass Decision Forest and Multiclass Decision Jungle to properly classify media types. The classification types were defined as follows:

- Standard (Normal) Call – Call that represents a standard phone conversation that occurs between inmate and a person(s) outside of the jail

- Standard Hang-Up Call – Call that represents a classic hang-up scenario (e.g. Callee rejected the collect call)

- Invalid PIN used – Call that represents caller entering in an invalid PIN needed to initiate a collect call from the jail

The analytics processing flow is depicted in the following diagram:

After classification, the system then attempts to capture the probability that it actually classified the call in the correct category based on characteristics of the process media assets. These characteristics are largely gleaned from the outputs of the media processing in Azure Media Services which include:

- Closed Caption text files in Timed-Text Markup Language (TTML) and Synchronized Accessible Media Interchange (SAMI) format

- Keywords for a given media file generated in XML format

The output assets are interrogated by the analytics backend and a data warehouse is populated with the results of analytics processing through a combination of Azure Event Hubs and Azure Stream Analytics.

All output of analytics processing is visualized through a Power BI-based “executive dashboard” that provides insight into the processed data via standard Power BI features/functionality for situational awareness. The screenshot below shows a the Power BI dashboard (names redacted to protect the innocent ![]() )

)

Things to consider

When building solutions of this nature, there are some important considerations with respect to the architecture of the solution, the design and operations of the Azure Services upon which the solution was implemented, and general “gotchas” to look out for. I’ve summarized them below.

Azure Media Services Architecture

One important consideration with building solutions of this type deals with understanding the current architecture of Azure Media Services itself. An initial assumption, which can be a costly mistake, of a developer going into a development effort with the end goal of producing this type of solution, is that Azure Media Services can adequately process a large volume of media data within a small amount of time with a reasonable set of computing resources required. I mean, it is the cloud…right?

It’s common to have such an expectation when working with cloud-based technologies, but that shouldn’t be a dependency that you base your entire solution architecture around without understanding what constraints, if any, are imposed by the cloud services you consume.

For example, the scenario outlined earlier isn’t a typical workload that Azure Media Services typically targets, however, it is a growing scenario that we’re starting to see with a large number of customers. While Azure Media Services can certainly process this volume of data without question, careful analysis must be performed on the type of media content that will be processed to ensure optimal results, to include:

- Inspection of media file size/duration and quality of the content contained

- Classification of what should or should not counted as media requiring indexing. Oftentimes, you’ll find that a lot of media content can be classified as “throwaway” due to poor quality (for example, spoken voices in the file are unclear due to dialect being used, a faulty recording device, and a host of other factors)

There are also constraints imposed on an Azure Subscription, particularly within Azure Media Services, that can impact your solution architecture. A detailed listing of those constraints are outlined in the Azure documentation referenced here. Of those constraints, the key constraints to highlight are:

- The number of assets per Azure Media Services account is capped at 1,000,000. If you have a large number of files to process, it gets compounded by the media indexing process that produces 4 outputs for every media file indexed that can cut into the asset limit very quickly. These outputs include:

- Jobs per Azure Media Services account is capped at 50,000

- Encoding units per Azure Media Services account is capped at 25

Therefore, it is important that you go through an analysis exercise, regardless of what media indexing technology you use, to determine what makes sense for indexing and what doesn’t.

With respect to Azure Media Services, it is also important to note that the Azure Media Indexer is optimized for accuracy over performance. The Media Indexer architecture is designed around a concept of a shared processor pool as opposed to being reservable (via the Reserved Units functionality currently exhibited by the Azure Media Encoder, which has the responsibility of encoding media for playback). Processing time of media files indexed by the Azure Media Indexer can vary depending on the size and duration of the media file. Therefore, this solution is not intended for scenarios that have a real-time processing requirement or which require a fast turnaround of indexed output. A good rule of thumb is that indexing time is roughly 3x the duration of a media file. Therefore, if you have a media file that is roughly 1 hour in duration, you need to plan for processing time to be upwards of 3 hours to complete indexing.

Regardless of your design pattern, you should be aware that as powerful as the Azure Media Indexer is, you need to allow time for it to perform its indexing task. There are optimizations that can be made to this process such as:

- Trimming “unwanted” content from the audio file prior to submitting for indexing

- Parallelization of media jobs submitted for indexing

However, at the end of the day, the Azure Media Indexer will execute its deep neural net (DNN) speech recognition algorithms to create the most accurate output possible, and as such, will take the necessary time it needs to do so.

Searching Media Files using SQL Server

As mentioned earlier, one of the outputs from the Azure Media Indexer is a blob known an Audio Indexing Blob (AIB). This blob format is proprietary to Azure Media Services (for more technical background of the AIB, check out the Microsoft Research website located here). The Azure Media Services team exposes the AIB for searching via SQL Server Full-Text search. In order to enable this capability, the installation of an Azure Media Indexer IFilter Add-On to a full SQL Server instance is required, thereby eliminating the ability to use Azure SQL DB and Azure Search as neither supports the installation/configuration of custom IFilters. To learn more about how to use AIB with the Azure Media Indexer and SQL Server, click here.

The reliance upon a custom IFilter to search the audio indexing blob can introduce scalability limitations if not properly accounted for when designing the SQL Server database architecture using Azure Virtual Machines in order to support this capability. Your database architecture must be designed to accommodate a potentially massive amount of data and equally massive amount of data and data transactions. SQL Server performance best practices apply in this context. For more information on optimizing SQL performance within an Azure Virtual Machine, click here.

Data Security and Privacy

When dealing with any data, security and privacy of the data should a top concern, especially in the case of media assets generated by law enforcement. We take data security and privacy very seriously. Security and privacy are embedded into the development of the Azure platform and we go through great pains to ensure our customer’s data is safe. Visit our Azure Trust Center for more information on our position with respect to security and privacy.

In the scenario outlined earlier, all physical media files as well as output from Azure Media Services Indexing and Encoding is stored within blob storage Azure Government, while any metadata captured that provides further context about the media files being indexed are stored in databases running in virtual machines provisioned within Azure Government. Azure Government is a unique offering at Microsoft in that it exhibits the following characteristics:

- Physically isolated datacenter and network

- Data, applications, and hardware reside in CONUS

- Geographical redundancy with datacenters located more than 500 miles apart

- Managed/Operated by screened US persons

- Strong commitment to upholding rigorous compliance requirements (to include Criminal Justice Information Services (CJIS), which carries high importance within the Justice & Public Safety community)

Azure Service Availability

The solution prototype produced from the proof-of-concept engagement makes use of a number of Azure Services as part of its architecture. In order to leverage these services, the solution had to be developed and deployed across both an Azure Government and Azure Public subscription. This was done so to account for a few feature gaps between Azure Government and Azure Public. That said, the Azure Government Team is diligently working to close the feature parity gap and ensure that bring due to the lack of service availability within the Azure Government offering. The table below represents services and technologies used in the development of the proof-of-concept solution.

|

TECHNOLOGY |

SUPPORTED IN AZURE PUBLIC? (Y/N) |

SUPPORTED IN AZURE GOVERNMENT (Y/N) |

|

ASP.NET MVC |

N/A |

N/A |

|

ASP.NET WebAPI |

N/A |

N/A |

|

Entity Framework |

N/A |

N/A |

|

Azure Media Services |

YES |

YES |

|

Azure Storage (Blobs) |

YES |

YES |

|

Azure Virtual Machines |

YES |

YES |

|

Azure Cloud Services |

YES |

YES |

|

Azure Active Directory |

YES |

YES |

|

Azure SQL Database |

YES |

YES |

|

Azure Service Bus |

YES |

YES |

|

Azure Event Hubs |

YES |

YES |

|

Azure Machine Learning |

YES |

NOT YET |

|

Azure Stream Analytics |

YES |

NOT YET |

|

Microsoft Power BI |

YES |

NOT YET |

Summary

This post outlined a proof-of-concept solution focused on media indexing and analysis using Azure Media Services and a host of Azure Services provided by the Azure Platform. Hopefully you learned a bit from this post that will assist you in your future development of cloud-based media solutions.

Happy Developing!

Lamont Harrington

Principal Program Manager

Azure Engineering Customer Advisory Team

(cross-posted from www.lamontharringtonsblog.com)

0 comments