Generative AI is not just a technological advancement; it’s a paradigm shift that is redefining the landscape of customer interaction. Azure SQL Database stands at the forefront of this revolution, offering unparalleled opportunities to harness the power of “your data” in crafting state-of-the-art applications.

As we stand at the cusp of an AI renaissance, it’s clear that AI-enabled applications are becoming the new norm, from personal devices to the vast expanse of the cloud. Yet, it’s not the technology alone that sets you apart—it’s how you leverage it with your unique datasets. Your data is the key to delivering personalized experiences that your competitors simply cannot replicate. In this era, AI is the great equalizer, but your data is the ultimate differentiator.

Consider the innovative application pattern of Retrieval Augmented Generation (RAG). By creating vector embeddings from your data and employing similarity searches, coupled with the reasoning capabilities of a Large Language Model (LLM), you can craft experiences that resonate deeply with your customers’ needs.

Take inspiration from Walmart’s groundbreaking approach, as showcased in their CES 2024 Keynote [2]. They’ve transformed their shopping app into an intuitive platform where customers can effortlessly discover the perfect products for any occasion, like hosting a Superbowl party, with just a single search.

I thought it would be interesting to try building such an app. Should be easy, isn’t it? Prompt an LLM with your query and be done! Football season is long gone, but it is the season for the graduation parties, so I decided to look for “help me plan a graduation party”.

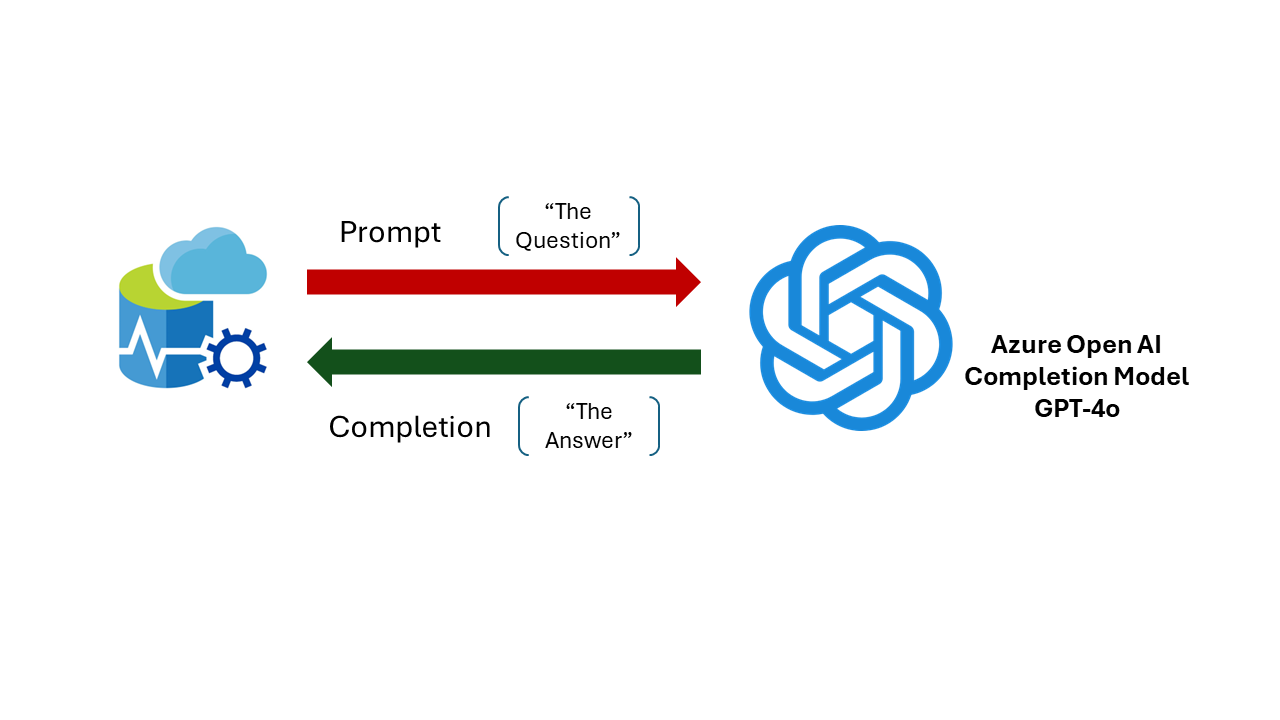

Being a database person, I set out to do this using T-SQL. We need to build a prompt with our search query, add some instructions to the LLM, and then send the prompt to an LLM to gather and synthesize the result for us. I created an Azure Open AI deployment for Completions API with GPT-4o, and used the simple yet powerful stored procedure sp_invoke_external_rest_endpoint from the T-SQL code to call the model.

declare @search_text nvarchar(max) = 'help me plan a high school graduation party' declare @llm_payload nvarchar(max); set @llm_payload = json_object( 'messages': json_array( json_object( 'role':'system', 'content':'You are an awesome AI shopping assistant tasked with helping users find appropriate items they are looking for the occasion.' ), json_object( 'role':'user', 'content': + @search_text + 'You answer needs to be a json object with the following format. { "answer": // the answer to the question, add a source reference to the end of each sentence. Source reference is the product Id. "products": // a comma-separated list of product ids that you used to come up with the answer. "thoughts": // brief thoughts on how you came up with the answer, e.g. what sources you used, what you thought about, etc. }' ) ), 'max_tokens': 800, 'temperature': 0.7, 'frequency_penalty': 0, 'presence_penalty': 0, 'top_p': 0.95, 'stop': null ); declare @retval int, @response nvarchar(max); exec @retval = sp_invoke_external_rest_endpoint @url = 'https://<endpoint>/openai/deployments/<deployment name>/chat/completions?api-version=2024-05-01-preview', -- Completions API @headers = '{"Content-Type":"application/json"}', @method = 'POST', @credential = <credential>, @timeout = 120, @payload = @llm_payload, @response = @response output; select @retval as 'Return Code', @response as 'Response'; select [key], [value] from openjson(( select t.value from openjson(@response, '$.result.choices') c cross apply openjson(c.value, '$.message') t where t.[key] = 'content' ))

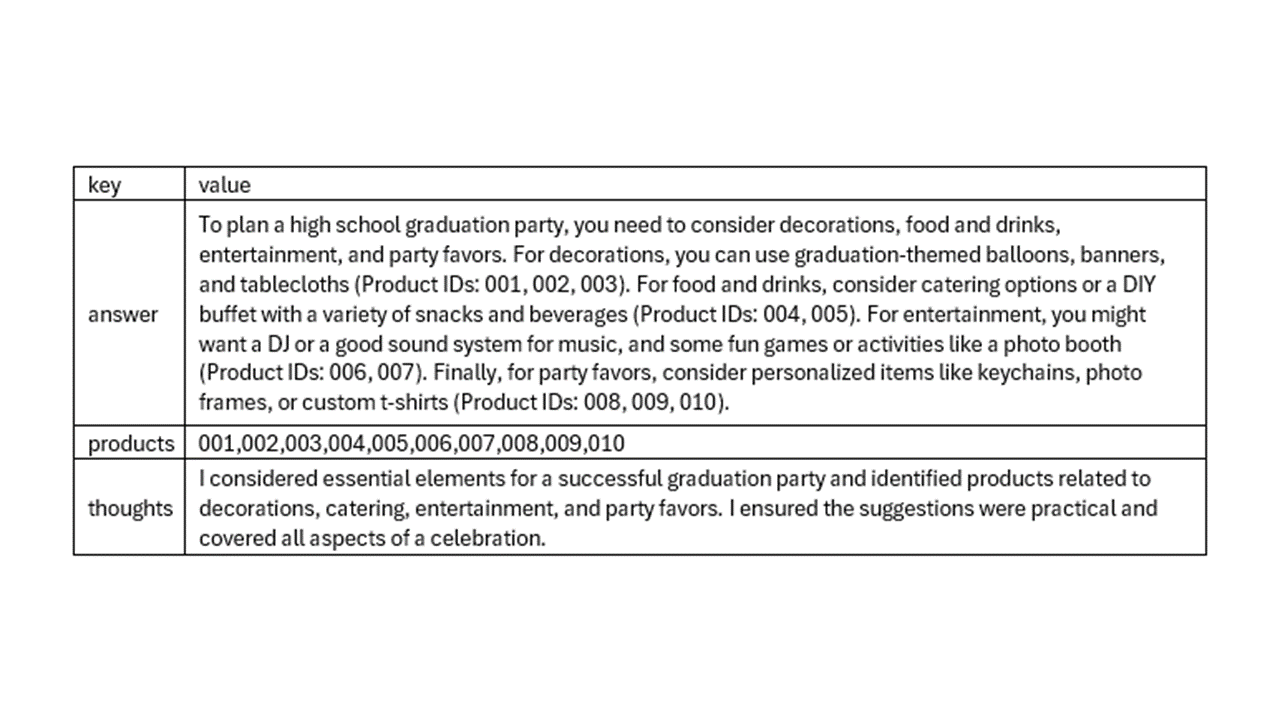

The response comes back as:

Observe the response from the LLM.

- It does give some good suggestions, such as decorations, food, drinks and even recommends having a DJ at the party. Nice!

- Note that the product IDs are just made up. The LLM lacks information about which set of specific products to consider for this query and provides rather generic answers.

While the generic answer is somewhat useful but doesn’t help further; because now I need to find out which store to go to pick up the balloons and the decorations, and which specific decoration items to pick for the occasion, and so on.

This approach (sending a prompt to an LLM with my query) does provide a search result, but nothing more than that. It doesn’t fulfil my quest.

What can a retailer like Walmart do better? It has all the data about its products. And, that’s where lies the differentiator. You can look into your products data and find applicable items for the occasion, and then use the reasoning power of the LLM on this subset of the data to provide you with the best information to fulfil your quest. We will do this in three steps:

Step 1: Get Products data

Step 2: Find products from this dataset that are applicable (in other words, “similar”) to the occasion you have in mind, using vector similarity search

Step 3: Use LLM to reason over the data, and synthesize a response using the products that are available to buy in the retailer catalog

Step 1: Get Products Data

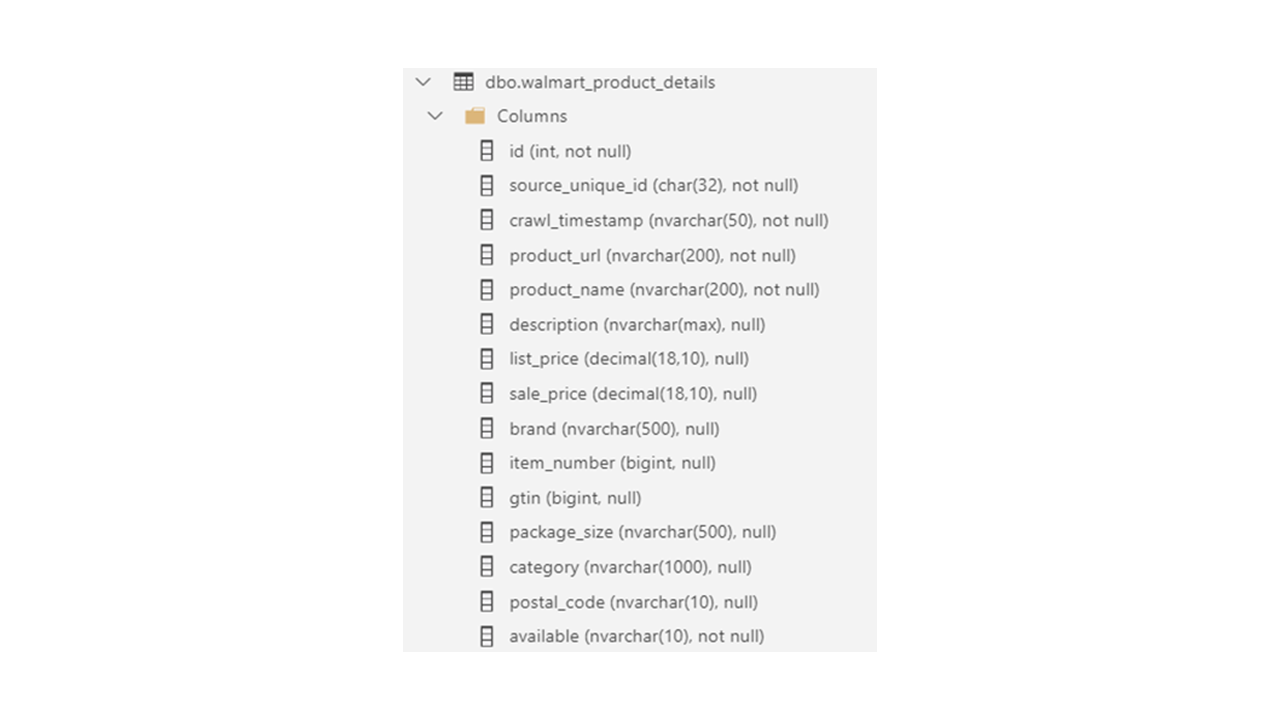

I found that a subset of Walmart products data is available on Kaggle [3]. It is a csv file with 30000 products, and 14 columns / attributes for each product. It is under CC0: Public Domain license.

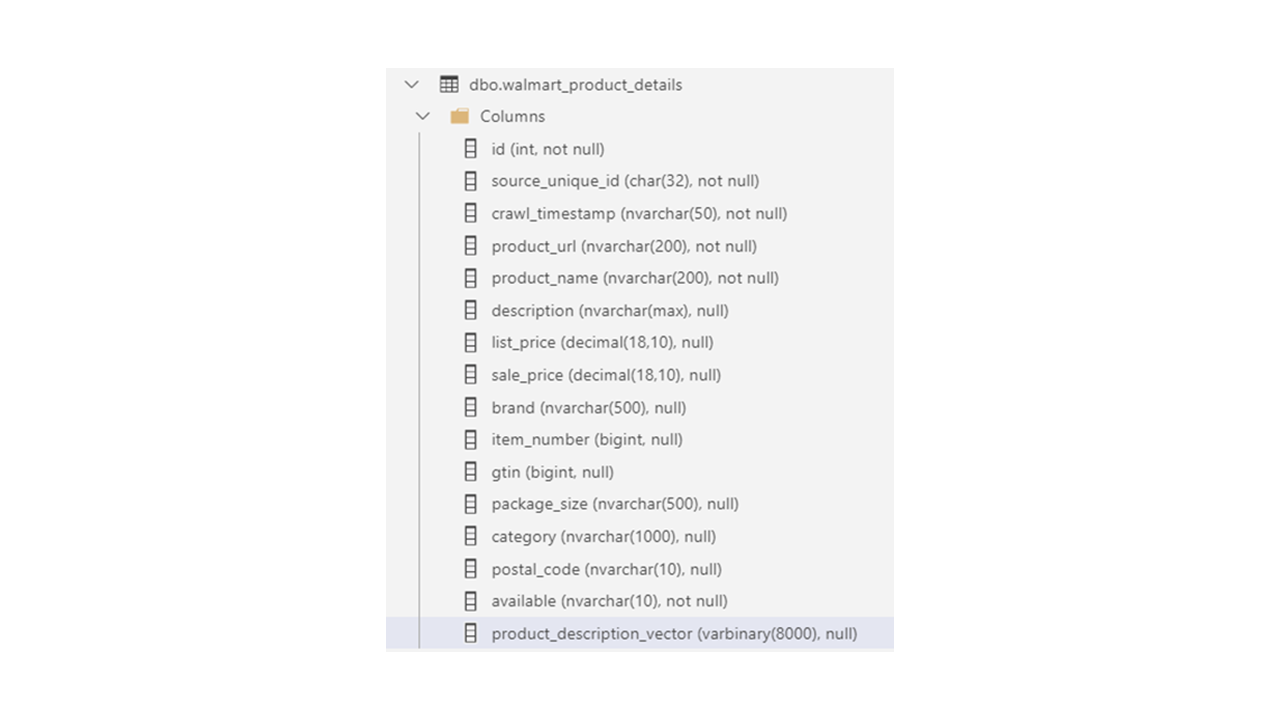

I downloaded the file, and loaded the data into a table in Azure SQL Database.

Step 2: Find applicable products

To find the products applicable to our occasion, we will use vector similarity search. The new vector capabilities introduced in Azure SQL Database is a game changer here. You don’t need to take your data to a specialized vector store to perform vector similarity search. Taking the data from your operational data store (Azure SQL Database, in this case) to a specialized vector store for performing vector similarity search will increase solution cost and complexity, not to mention the need to move data back and forth between two disparate systems, with all the security, compliance and performance implications that come with data movement.

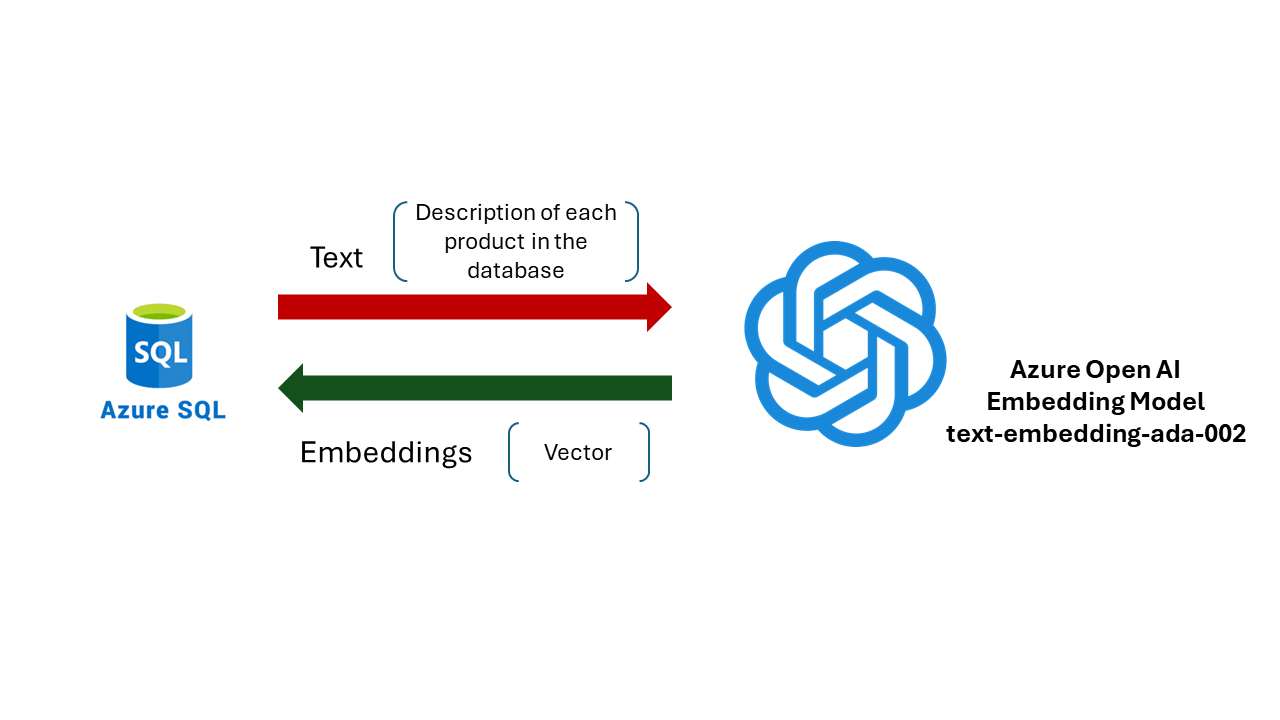

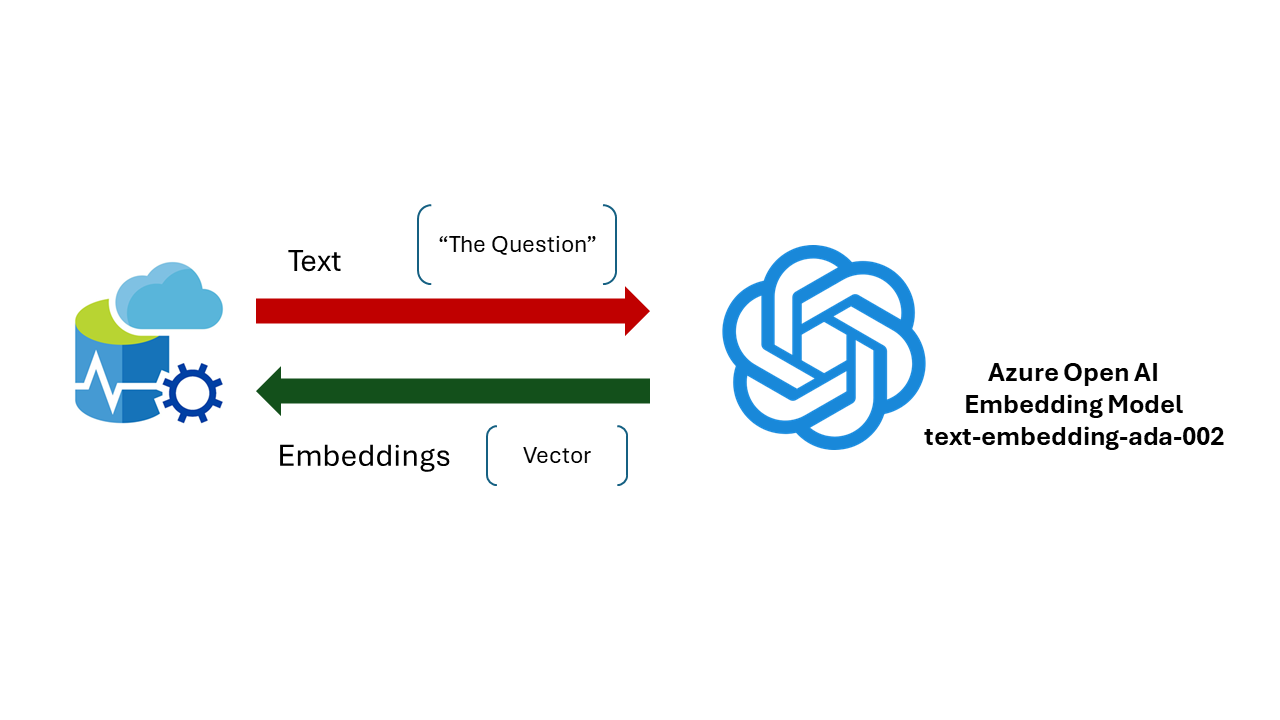

Step 2.1: Generate Embeddings on your data

The first step is to generate embeddings on the relevant columns of the table (for all the rows in the table) and store them in the database. We will use an Embeddings model of Azure Open AI (please note the difference between the two deployments of Azure Open AI — one for Completions API, and the other for Embeddings) to create embeddings.

[This is a one-time effort. You need to generate embedding for a data item once. If the data changes, you will need to recreate the embedding with the new data and update the corresponding embedding value. If new rows get inserted into the table, you will create embeddings for those new rows and store in the database.]

For our scenario, the most important information corresponding to a product’s relevance to an occasion are the “product_name” and the “description” columns. We will create vector embedding for the concatenated text of these two columns in each row, and store the embeddings alongside each row of the table.

To store vector embeddings, we will use a column of type varbinary(8000) (Please note, in the private preview release of the vector capability in Azure SQL Database, vector embeddings are stored in varbinary(8000) columns. This could change in future releases).

First, we will alter the table to add a column to store embeddings.

alter table [dbo].[walmart_product_details]

add product_description_vector varbinary(8000) null;

Next step is to create embeddings for each row in the description column, and store in the newly added embeddings column.

The stored procedure below takes a string (such as the description of a product), makes an API call to a large language model for embeddings, and then returns the embedding.

create or alter procedure dbo.create_embeddings ( @input_text nvarchar(max), @embedding varbinary(8000) output ) AS BEGINdeclare @url varchar(max) = 'https://<endpoint>/openai/deployments/<deployment name>/embeddings?api-version=2023-03-15-preview'; --Embeddings model declare @payload nvarchar(max) = json_object('input': @input_text); declare @response nvarchar(max); declare @retval int; -- Call to OpenAI to get the embedding of the search text begin try exec @retval = sp_invoke_external_rest_endpoint @url = @url, @method = 'POST', @credential = <credential>, @payload = @payload, @response = @response output; end try begin catch select 'SQL' as error_source, error_number() as error_code, error_message() as error_message return; end catch if (@retval != 0) begin select 'OPENAI' as error_source, json_value(@response, '$.result.error.code') as error_code, json_value(@response, '$.result.error.message') as error_message, @response as error_response return; end -- Parse the embedding returned by Azure Open AI declare @json_embedding nvarchar(max) = json_query(@response, '$.result.data[0].embedding'); -- Convert the JSON array to a vector and set return parameter set @embedding = JSON_ARRAY_TO_VECTOR(@json_embedding); END;

The key ingredient is the sp_invoke_external_rest_endpoint stored procedure, that enables you to call any REST endpoint (such as Azure Open AI or Open AI endpoints, PowerBI, Azure Functions, etc.) from within T-SQL code. Read more about it here.

We will call the create_embeddings stored procedure we just created, and create embeddings for each product description, one row at a time:

declare @i int = 1;

declare @text nvarchar(max);

declare @vector varbinary(8000);

while @i <= 30000

BEGIN

set @text = (SELECT isnull([product_name],'') + ': ' + isnull([Description],'') from dbo.walmart_product_details where id = @i);

exec dbo.create_embeddings @text, @vector output;

update [dbo].[walmart_product_details] set [product_description_vector] = @vector where id = @i;

set @i = @i + 1;

if @i % 100 = 0

begin

WAITFOR DELAY '00:00:05'; -- wait for 5 seconds, every 100 items (to allow for OpenAI API rate limiting)

end

END

Now we have a products table with data about each product and enriched with embedding vectors.

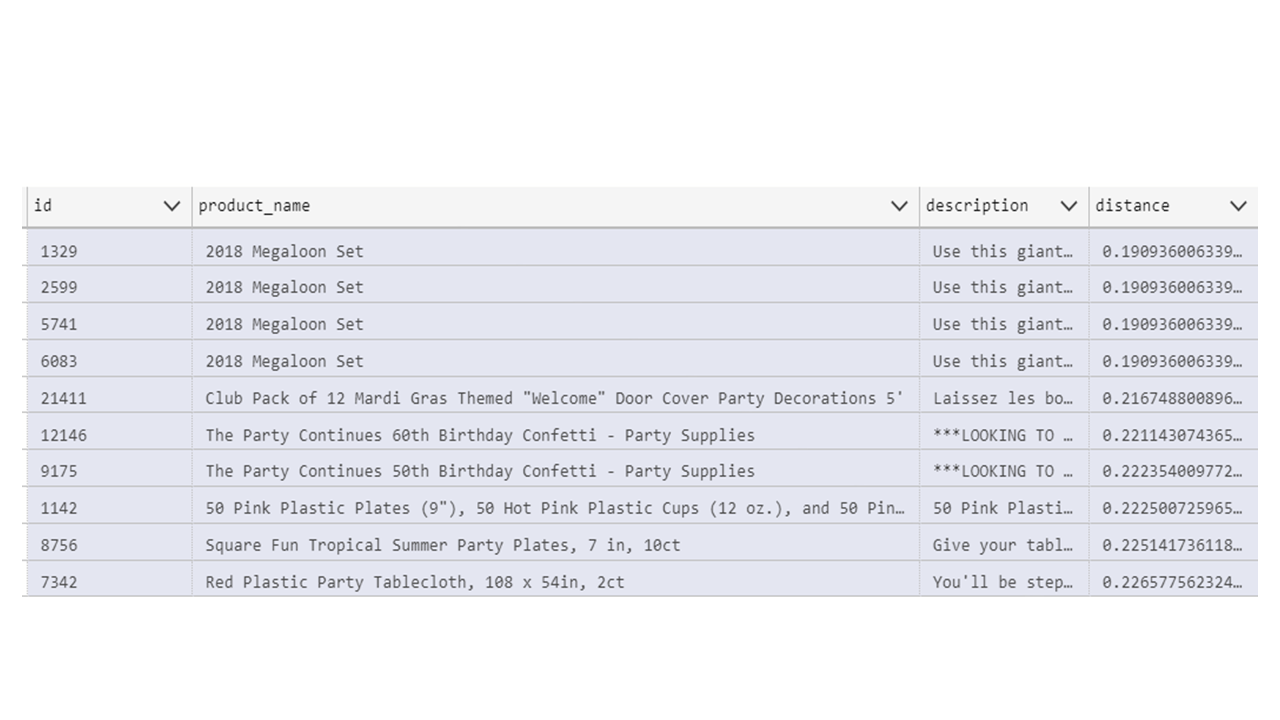

Step 2.2: Find products that are “similar” to our query

To find “similar” products, we will first create a vector embedding that represents our search text. We will do that using the create_embeddings stored procedure we created above.

Then, we will compare the vector embedding for our search text with the vector embeddings for the products in the table, using the vector distance function newly introduced in Azure SQL Database (Read more about the vector functions here. We used cosine distance in this example, you have other choices as well.). And pick the Top 10 products (we could pick top 20 or top 50 as well) that satisfy our search.

declare @search_text nvarchar(max) = 'help me plan a high school graduation party'

declare @search_vector varbinary(8000)

exec dbo.create_embeddings @search_text, @search_vector output;

SELECT TOP(10)

id, product_name, description,

vector_distance('cosine', @search_vector, product_description_vector) AS distance

FROM [dbo].[walmart_product_details]

ORDER BY distance

The results returned:

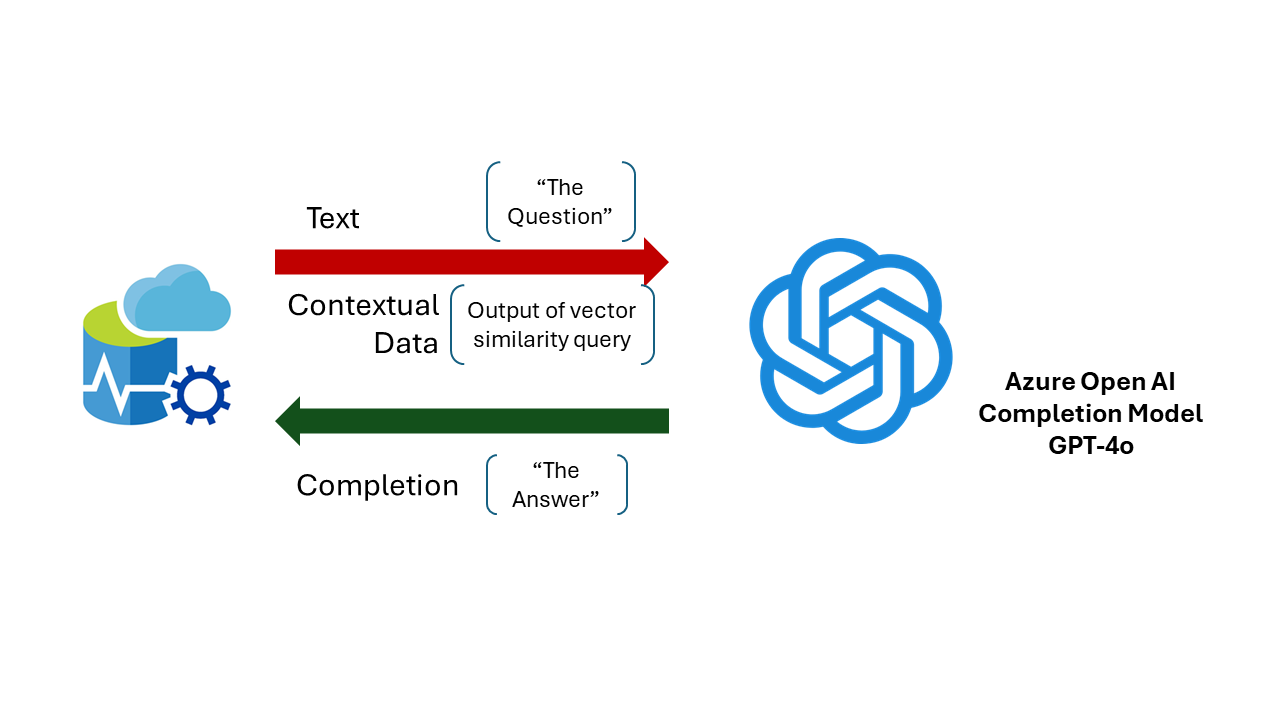

Step 3: Reason with your data and synthesize

Instead of returning the Top 10 rows we obtained above, we will use the LLM to reason over it and provide us with synthesized data for our query.

To do this, we will use the deployment Completions API with GPT-4o model we used at the beginning of this article, and pass our search query and the top 10 output we have obtained above. We will also pass to the LLM an explanation of what data will be provided and how it should be used and how it the LLM should behave. Again we will use the immensely powerful and useful stored procedure sp_invoke_external_rest_endpoint we used earlier.

declare @search_text nvarchar(max) = 'help me plan a high school graduation party'

declare @search_vector varbinary(8000)

exec dbo.create_embeddings @search_text, @search_vector output;

declare @search_output nvarchar(max);

select

@search_output = string_agg(cast(t.[id] as varchar(10)) +'=>' + t.[product_name] + '=>' + t.[description], char(13) + char(10))

from

(SELECT TOP(10)

id, product_name, description,

vector_distance('cosine', @search_vector, product_description_vector) AS distance

FROM [dbo].[walmart_product_details]

ORDER BY distance) as t

declare @llm_payload nvarchar(max);

set @llm_payload =

json_object(

'messages': json_array(

json_object(

'role':'system',

'content':'

You are an awesome AI shopping assistant tasked with helping users find appropriate items they are looking for the occasion.

You have access to a list of products, each with an ID, product name, and description, provided to you in the format of "Id=>Product=>Description".

When users ask for products for specific occasions, you can leverage this information to provide creative and personalized suggestions.

Your goal is to assist users in planning memorable celebrations using the available products.

'

),

json_object(

'role':'user',

'content': '## Source ##

' + @search_output + '

## End ##

Your answer needs to be a json object with the following format.

{

"answer": // the answer to the question, add a source reference to the end of each sentence. Source reference is the product Id.

"products": // a comma-separated list of product ids that you used to come up with the answer.

"thoughts": // brief thoughts on how you came up with the answer, e.g. what sources you used, what you thought about, etc.

}'

),

json_object(

'role':'user',

'content': + @search_text

)

),

'max_tokens': 800,

'temperature': 0.7,

'frequency_penalty': 0,

'presence_penalty': 0,

'top_p': 0.95,

'stop': null

);

declare @retval int, @response nvarchar(max);

exec @retval = sp_invoke_external_rest_endpoint

@url = 'https://<endpoint>/openai/deployments/<deployment name>/chat/completions?api-version=2024-05-01-preview', -- Completions API

@headers = '{"Content-Type":"application/json"}',

@method = 'POST',

@credential = <credential>,

@timeout = 120,

@payload = @llm_payload,

@response = @response output;

select @retval as 'Return Code', @response as 'Response';

select [key], [value]

from openjson((

select t.value

from openjson(@response, '$.result.choices') c cross apply openjson(c.value, '$.message') t

where t.[key] = 'content'

))

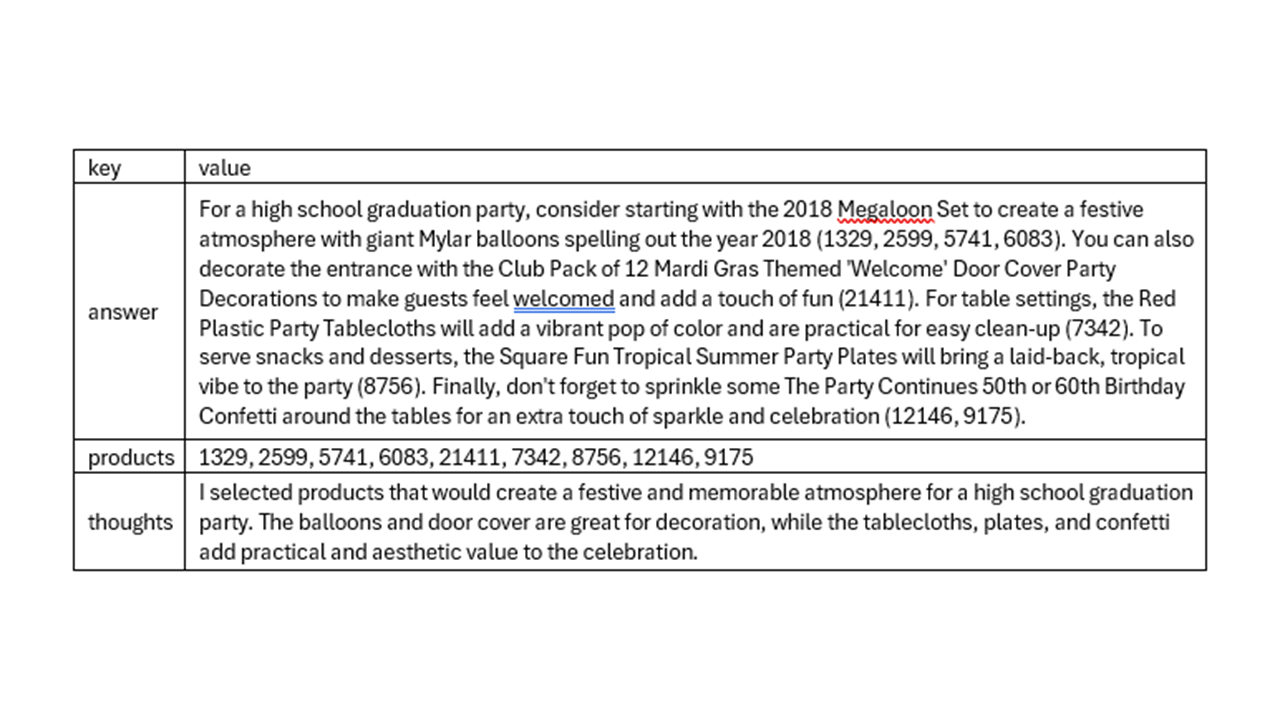

Observe the response from the LLM.

- It gives recommendations for specific products in the store.

- The product IDs are actual product ids from the database that you find in the store.

Using “your data” in the search definitely improved the relevance of the output and enhanced customer experience.

In summary, the fusion of Generative AI with Azure SQL Database is not just about building applications; it’s about creating experiences that are as unique as your data. It’s time to step into the future and redefine what’s possible with AI, and your business data. After all, AI is the equalizer, and your data is true differentiator!

Call To Action

- Sign up for the Private Preview of vector capabilities in Azure SQL Database: https://aka.ms/azuresql-vector-eap

- Apply the techniques learned here to your data

- Bonus exercises

- Filter the products that are only available in a store in your zipcode

- Generate a cost estimate for the all the items recommended by the application

- I used T-SQL to build this. If your favorite programming language is, say Python, you can build using Python as well. Try it out.

- I didn’t show the step to load the downloaded file into Azure SQL Database. It is an interesting exercise in itself. Try it out.

- Try this out for the next occasion for your family, say Father’s Day, or Grandma’s Birthday

- Check the SQL AI samples at aka.ms/sqlaisamples. The code in this article will be uploaded there soon.

References

[1] Walmart unveils new generative AI-powered capabilities for shoppers and associates

[2] Walmart Keynote at CES 2024! (The whole video is very exciting. If you want to skip to the part that inspired the example used in this blog, skip to 29:10).

[4] sp_invoke_external_rest_endpoint

[5] Vector Support in Azure SQL Database

Phenomenal job, Sanjay! Very clear, powerful use case and straight implementation. I’m going to implement and test Immediately!