Since the release of vector preview, we’ve been working with many customers that are building AI solution on Azure SQL and SQL Server and one of the most common questions is how to support high-dimensional data, for example more than 2000 dimensions per vector. In fact, at the moment, the vector type supports “only” up to 1998 dimensions for an embedding. One of the impressions that such limitation may give, is that you cannot use the latest and greatest embedding model offered by OpenAI, and also available in Azure, which is the text-3-embedding-large model, as it returns 3072 dimensions.

Well, that’s not the case. And I would even add that using such high number of dimensions is not really giving you that much benefit compared to the costs that comes with that usage. Let me show you why.

Embedding dimensions and MTEB Benchmark

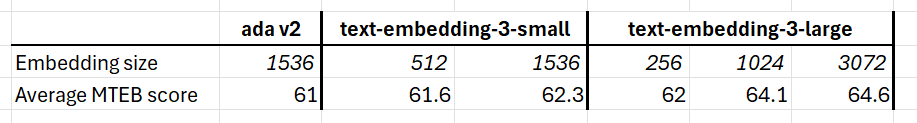

“MTEB is a massive benchmark for measuring the performance of text embedding models on diverse embedding tasks.” as stated here: https://huggingface.co/blog/mteb. Taking a look at the published leaderboard, and filtering it for the models available in OpenAI – just to compare something that can be easily used by everyone – you can see very interesting results:

as you can notice the average performance is very similar and it is interesting to notice that the text-embedding-3-large can be used to return only 256 dimensions instead of the default 3072, while still performing very well. Great performance with 1/12 of the resource usage. That’s quite a great deal!

as you can notice the average performance is very similar and it is interesting to notice that the text-embedding-3-large can be used to return only 256 dimensions instead of the default 3072, while still performing very well. Great performance with 1/12 of the resource usage. That’s quite a great deal!

Setting Dimensions Count

As described in the OpenAI text-3-embedding model release article, with those models “developers can shorten embeddings (i.e. remove some numbers from the end of the sequence) without the embedding losing its concept-representing properties by passing in the dimensions API parameter. For example, on the MTEB benchmark, a text-embedding-3-large embedding can be shortened to a size of 256 while still outperforming an unshortened text-embedding-ada-002 embedding with a size of 1536.”

Details can be found in the “Native support for shortening embeddings” section of the article “New embedding models and API updates” published by OpenAI in January 2024.

To set the desired dimension count you just have to pass the dimension parameter in your payload. Here’s the sample code in T-SQL:

declare @inputText nvarchar(max) = 'It''s fun to do the impossible.';

declare @payload nvarchar(max) = json_object(

'input': @inputText,

'dimensions': 1024

);

declare @retval int, @response nvarchar(max)

exec @retval = sp_invoke_external_rest_endpoint

@url = 'https://dm-open-ai-3.openai.azure.com/openai/deployments/text-embedding-3-large/embeddings?api-version=2023-03-15-preview',

@method = 'POST',

@credential = [https://dm-open-ai-3.openai.azure.com],

@payload = @payload,

@response = @response output;

declare @re nvarchar(max) = json_query(@response, '$.result.data[0].embedding')

select cast(@re as vector(1024));Please note that I’m using a DATABASE SCOPED CREDENTIAL, as explained in the sp_invoke_external_rest_endpoint documentation, to securely store and use Azure OpenAI API keys. Another, even better, option would be to use Managed Identities, to forget about having to store and protect keys altogether.

Consideration on choosing the right model and dimension size

Setting 1024 dimensions seems to be a sweet spot for the text-embedding-3-large model as with 4Kb of space (1024 dimensions each one using 4 bytes to store single-precision float value) as it will give pretty much the same performance of using 3072 dimensions that will instead use 12Kb of space. More importantly calculating dot product or, even more, the cosine distance, will require way less computation, which in turns means less CPU usage and less power drain for still practically the same results, as shown by this chart that is also referenced in the OpenAI article mentioned before:

It is important to understand that “latest and greatest” with embedding models might not be the best for your case, and deciding the right model to use goes well beyond the simple dimension count. Taking a deeper look at the MTEB Leaderboard is something I strongly suggest to do, so that you can pick the best model taking into account all factors. From use case to dimension count through localization, resource usage, speed and quality: in that way you can make sure avoid overspending for getting pretty much the same results.

Yes but…

But what if you really need more than 2K dimensions? We are aware of some fascinating use cases, particularly in machine learning workloads, where more than 10K dimensions are necessary. We’ve also received feedback about scenarios where each dimension value is just a bit (binary quantization). Additionally, some embedding models are even reaching up to 4K dimensions. We’re exploring all these options (and more) to ensure we prioritize correctly. Stay tuned for updates in 2025! If you have any feedback or use cases you’d like us to consider, please leave a comment below. Your input will help us shape vector support to provide the best balance between performance, ease of use, and practical application.

Great article, love the numbers as comparison. Thanks Davide!