Are you tired of spending countless hours building APIs from scratch? With Data API builder (DAB), you can create your API in just minutes! All you need to do is create a JSON configuration file to describe your database entities (tables, views, stored procedures, or collections) from your Azure SQL Database, SQL Server, Cosmos DB, PostgreSQL, or MySQL database.

Using containers with DAB (Data API Builder for short) provides a consistent, isolated, portable, and lightweight local development experience that makes team collaboration easier.

Once the local development is completed, one possible next step is to deploy the same solution consistently to Azure services such as Azure Container Apps and Azure SQL Database.

Quick recap

In Part 1 of our blog series, Build your APIs with DAB using Containers, we embarked on a transformative journey in API development. We harnessed the power of Data API builder (DAB) and containerization, with DAB emerging as a game-changer, automating CRUD operations seamlessly and reading entities directly from diverse databases. The integration of containers provided a portable, consistent, and lightweight local development environment, fostering collaboration and easing the transition to Azure services.

We explored multiple avenues to run the DAB Engine, offering choices from CLI efficiency to source code control and the convenience of containerization. The demonstration of a Library app exemplified the agile setup, with SQL Server 2022 managing data securely and DAB-Library container providing REST and GraphQL endpoints. We outlined prerequisites and setup steps, establishing a solid foundation for the practical implementation showcased in the blog.

As Part 1 concludes, we set the stage for Part 2, where we will explore orchestrating a containerized DAB-enabled solution using Docker Compose.

Putting all together with Docker compose

Docker Compose provides a more convenient and maintainable way to define, manage, and run multi-container applications. By using a single YAML configuration file (docker-compose.yml), you encapsulate the configuration (dependencies, network, volumes) for both the SQL Server and DAB containers, making it easier to share, version control, and reproduce the entire environment.

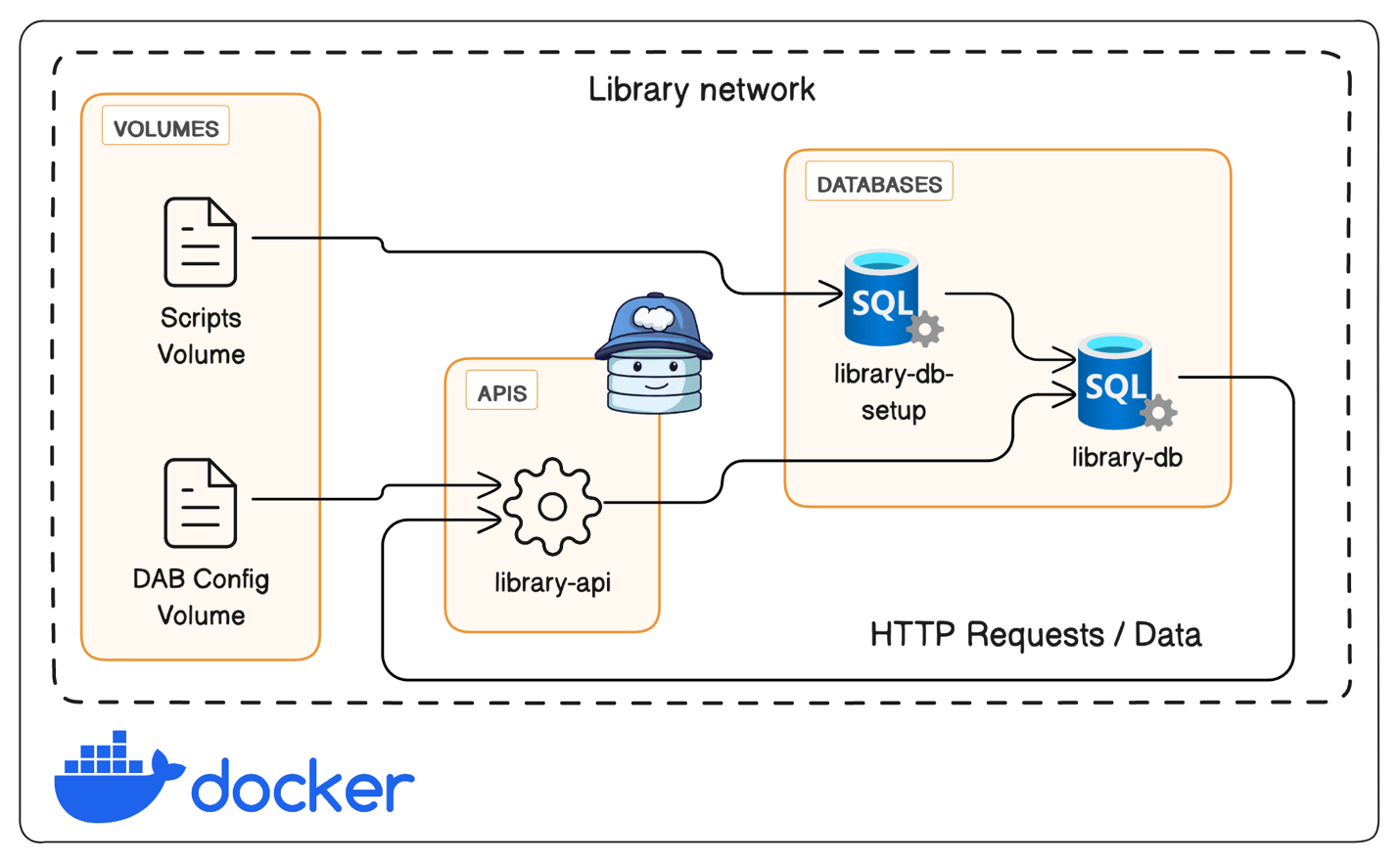

Before diving into the specifics of how the Library demo will utilize Docker compose, let’s first examine the diagram below, which illustrates the container architecture for this follow-up blog post.

This diagram shows the architecture of a Dockerized application that runs within a network called library-network. The application consists of three main components: Volumes, APIs, and databases. The volume, store data or files needed by the application. Such as SQL scripts and the configuration file for DAB. The API layer handles HTTP requests (REST / GraphQL) and data operations inside this network, using Data API Builder. The database stores and manages the library data, using Microsoft SQL Server.

We deploy all these components as Docker containers, defining and configuring them as services using a Docker compose file as follows:

version: '3.8'

services:

library-db:

image: mcr.microsoft.com/mssql/server:2022-latest

hostname: SQL-Library

container_name: SQL-Library

environment:

ACCEPT_EULA: Y

SA_PASSWORD: ${SA_PASSWORD}

ports:

- "1401:1433"

networks:

- library-network

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "/opt/mssql-tools/bin/sqlcmd -S localhost -U sa -P ${SA_PASSWORD} -Q 'SELECT 1' || exit 1"]

interval: 10s

retries: 10

start_period: 10s

timeout: 3s

library-db-setup:

image: sqlcmd-go-scratch

hostname: SQL-Config

container_name: SQL-Config

volumes:

- ./Scripts:/docker-entrypoint-initdb.d

networks:

- library-network

depends_on:

library-db:

condition: service_healthy

command: sqlcmd -S SQL-Library -U sa -P ${SA_PASSWORD} -d master -i docker-entrypoint-initdb.d/library.azure-sql.sql -e -r1

library-api:

image: mcr.microsoft.com/azure-databases/data-api-builder:latest

container_name: DAB-Library

volumes:

- "./DAB-Config:/App/configs"

ports:

- "5001:5000"

env_file:

- "./DAB-Config/.env"

networks:

- library-network

depends_on:

- library-db-setup

command: ["--ConfigFileName", "/App/configs/dab-config.json"]

restart: unless-stopped

networks:

library-network:

driver: bridge

Let’s dive deep on each individual service.

library-db

This service creates the SQL-Library container using Microsoft SQL Server 2022’s latest container image for Ubuntu 20.04. The ${SA_PASSWORD} variable in the environment section of the service configuration represents an environment variable that dynamically populates at runtime. When executing the docker compose up command with the SA_PASSWORD=<value> argument, this variable directly assigns the specified value passed through the command.

In this context, ${SA_PASSWORD} serves as a placeholder, allowing flexibility in setting sensitive information such as the SQL Server’s System Administrator (SA) password without directly exposing it in the Docker Compose file. This practice enhances security by separating sensitive credentials from the configuration, ensuring they’re securely provided during container initialization.

To enable connectivity, we configure this service to map port 1401 on the host to port 1433 within the container, facilitating external access. This mapping redirects requests arriving at port 1401 to port 1433 within the container, where the SQL Server instance listens for connections. Furthermore, the service integrates into the Docker Compose network named library-network, ensuring seamless communication with other services within the same network.

Finally, the health check configuration ensures continuous health and, more importantly, availability of this service. In this specific configuration, the health check involves executing a SQL query against the SQL Server instance running within the container. The query, SELECT 1, is a lightweight operation intended to verify that the database server is up and running and capable of processing queries. It employs the sqlcmd utility to connect to the local SQL Server instance using the SA credentials, dynamically provided through the ${SA_PASSWORD} environment variable. If the command fails to execute successfully, indicating a failure to connect to or query the database, the health check will fail.

library-db-setup

This service was designed to efficiently execute SQL scripts for database initialization, without the need for a full SQL Server instance within a container. To accomplish this goal, I created a custom container image named sqlcmd-go-scratch. Unlike traditional SQL Server images, which include the complete database engine and additional features, this custom image is streamlined to include only the sqlcmd utility. By leveraging this minimalist container image with Scratch as the base OS, we omit unnecessary components, optimizing resource usage and simplifying the container’s footprint.

This service’s command is responsible for initializing the library database. It accomplishes this by establishing a link between the local ./Scripts folder and a specific directory within the container called the entry point. Through this setup, sqlcmd can connect to the SQL-Library container using the provided connection parameters. Additionally, upon startup, it seamlessly accesses and executes the SQL script file located at docker-entrypoint-initdb.d/library.azure-sql.sql.

The service depends on the library-db service, which is the main database service for the library-network. It uses the condition: service_healthy option to specify that it should only start after the library-db service passes its health check test.

The library-db-setup service runs the command only once, and then exits. It does not need to be restarted or kept running, as its only purpose is to initialize the library database.

library-api

The library-api service plays a pivotal role in providing access to the library’s API layer functionalities via HTTP requests through REST or GraphQL endpoints. It leverages the latest Data API builder (DAB) container image, which requires you to configure the DAB engine using a configuration file to set the database connection string and API entities. For that matter, this service uses both the DAB configuration and env files located within the ./DAB-Config directory. By mounting this directory as a volume, the DAB engine running within the container can seamlessly access the necessary configuration.

Furthermore, we establish a clear dependency with the library-db-setup service to ensure the database is fully initialized before the API service starts. As explained before, this other service is responsible for setting up the database schema and seeding initial data, ensuring that the API service operates on a fully functional database environment when the DAB engine starts.

In terms of connectivity, this service exposes port 5000 within the container, which is the default port for the DAB. However, to enable external access, the service maps this port to port 5001 on the host machine. This configuration allows users to access the library’s API REST and GraphQL endpoints conveniently through localhost:5001.

Overall, the library-api service encapsulates the essential components required to provide a robust and accessible API interface for interacting with the library’s database within the Docker Compose architecture.

Initiating Docker Containers with Docker Compose

Now that we understand the basics of each service defined in the Docker compose file in the Library demo architecture. Let’s Initiate all the services as follow:

SA_PASSWORD=P@ssw0rd! docker compose up -dThis command initiates the deployment of Docker containers according to the specifications outlined in the docker-compose.yml file. By setting the SA_PASSWORD environment variable to P@ssw0rd!, it configures the password for the SQL Server instance within the containers.

Subsequently, docker-compose up -d instructs Docker Compose to commence the services defined in the configuration file, with the -d flag ensuring the containers run in detached mode, operating in the background. This enables seamless execution of multiple services while maintaining a user-friendly interface, crucial for managing containerized applications efficiently.

After running the Docker Compose command, you’ll see that the network and all containers are reported to be in the “Created” status, except for the SQL-Library container, which is shown as “Started“:

This intentional behavior occurs because a health check test is included for this service. Essentially, this test serves as a signal indicating that the SQL Server engine within the container is operational and prepared to accept connections.

After a few seconds, once the health check test concludes, the status of the SQL-Library service transitions to “Healthy“. Simultaneously, the SQL-Config container updates its status to “Started“, indicating that the process of deploying the schema and loading data into the Library database within the SQL-Library container has started.

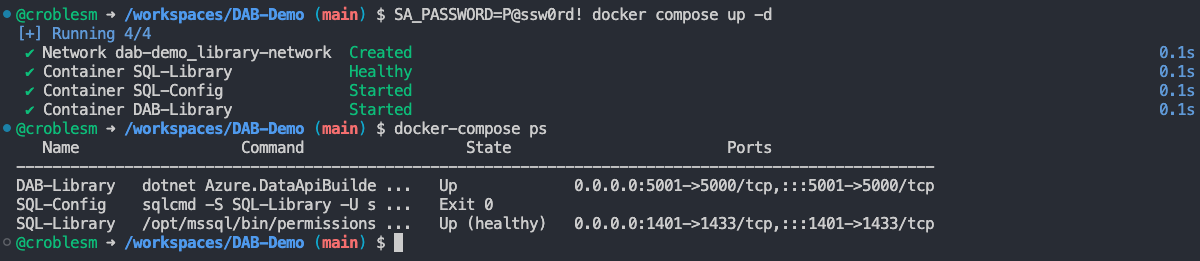

Once the database initialization process is completed, you will get your prompt back. You can always check the status of all services using the docker-compose ps command as follows:

Note, the DAB-Library and SQL-Library services are up and running, while the SQL-Config container is in Exit 0 state. This status indicates that the container executed the previous command successfully without encountering any errors. In this specific instance, the executed command was the SQL script responsible for creating the Library database, defining its schema, and loading data.

Testing REST and GraphQL endpoints

As we did it before, that that our Docker Compose services are ready. We can conduct a series of tests from command line or a web browser to ensure our REST and GraphQL endpoints are responding as expected.

Here you have a few examples on how to test both endpoints from the command line using Curl:

# Testing DAB health

curl -v http://localhost:5001/

# Testing Book and Author REST endpoints

curl -s http://localhost:5001/api/Book | jq

curl -s http://localhost:5001/api/Author | jq

# Testing DAB REST endpoints with jQuery

curl -s 'http://localhost:5001/api/Book?$first=2' | jq '.value[] | {id, title}'

curl -s 'http://localhost:5001/api/Author' | jq '.value[1] | {id, first_name, last_name}'

# Testing DAB GraphQL endpoint

curl -X POST -H "Content-Type: application/json" -d '{"query": "{ books(first: 2, orderBy: {id: ASC}) { items { id title } } }"}' http://localhost:5001/graphql | jq

As we wrap up our testing of REST and GraphQL endpoints, we’ve laid the groundwork for a solid and dependable API interface. With everything in place, let’s shift gears to the conclusion section, where we’ll sum up our findings and look ahead to what’s next with Data API Builder and Docker Compose.

Conclusion

In this second part of our blog series, we examined the seamless integration of Data API Builder (DAB) with Docker Compose, facilitating rapid API development.

Utilizing Docker Compose, we witnessed firsthand how the orchestration of containers simplifies deployment. Additionally, it ensures reproducibility and streamlined management practices. Moreover, emphasizing secure methodologies, such as leveraging environment variables for safeguarding sensitive data, adds an extra layer of protection to our systems.

Looking ahead, our journey will venture into advanced deployment strategies encompassing Dev Containers and Azure services. These topics aim to provide valuable insights for both seasoned developers and newcomers to containerized workflows, offering practical guidance for optimizing API development with DAB and containers.

Stay tuned as we continue to unravel the possibilities and intricacies of modern API development in our upcoming articles!

0 comments