Guest Post

Larry Silverman is the Chief Technology Officer at TrackAbout, Inc. (a Datacor company) and a long-time user of Azure SQL Database and SQL Server. In this blog post Larry shares a novel open-source solution which addresses a core need for their business. Thanks, Larry!TrackAbout is a worldwide provider of SaaS applications for tracking reusable, durable, physical assets like chemical containers and gas cylinders. With over 22 million physical assets tracked across 350 customers, each with their own Azure SQL database, optimizing our infrastructure for both cost and performance is a critical, ongoing mission.

Our journey with Microsoft technologies goes back to our founding in 2002. We started by racking our own servers, moved to managed hosting, and finally migrated to Azure in 2016 to gain more control and scalability. A core part of our architecture today is Azure SQL Database elastic pools.

When Azure SQL Hyperscale elastic pools was announced, we were intrigued. The promise of scaling up and down in just a few minutes seemed ideal for our largest, most demanding customers. It offered potential improvements in both performance and cost. However, we discovered a key limitation: Microsoft didn’t provide built-in autoscaling for Hyperscale elastic pools.

That limitation led us to create AutoScaler, the open-source solution we’re sharing with you today. This post will walk you through our approach for auto-scaling Hyperscale elastic pools and show how you can use it to optimize your own Azure SQL Hyperscale deployments.

The Solution: The AutoScaler Azure Function

The AutoScaler is a timer-triggered Azure function written in C#. It’s designed to be lightweight and efficient. A single instance can manage multiple elastic pools on the same Azure SQL Server, or you can run multiple instances of the function for different servers. You can find the code in our GitHub repo.

Key Features at a Glance

- Multi-Pool Management: A single instance of the AutoScaler can manage multiple elastic pools on a single Azure SQL Server.

- Configurable Scaling Logic: Tailor the scaling behavior to your specific workload patterns. You can skip steps to scale up faster if desired.

- Hysteresis-based Scaling: Avoids erratic scaling with a smart, two-window approach.

- Comprehensive Logging: Detailed logging provides a clear view of the AutoScaler’s actions. Optional logging to a SQL table is also available.

- Managed Identity Support: Securely connect to your Azure SQL resources using Azure Managed Identities.

How It Works: Metrics and Logic

Metrics Monitored

Instead of requiring a separate database to store metrics, the AutoScaler makes decisions using the historical data found in the sys.dm_elastic_pool_resource_stats view. This view provides about 40 minutes of historical readings, which is plenty of data to make informed scaling decisions. Scaling commands are automatically recorded in the Azure SQL server’s sys.dm_operation_status view, and also in the Azure Activity Log. We also provide an option to log scaling decisions and the data used to make those decisions to a SQL table for long-term analysis.

Based on our nine years of experience running elastic pools, we chose to monitor these four key metrics for our workload:

- Average CPU Percentage

- SQL Instance CPU Percentage

- Worker Percentage

- Data IO Percentage

Your workload might be different than ours. The function is extensible, so you could, for example, add monitoring for Log IO Percentage if that’s a bottleneck for your application.

Hysteresis-Based Scaling Logic

The core of the AutoScaler is its hysteresis-based scaling logic, which prevents “thrashing” (rapidly scaling up and down). We delay scaling decisions until metrics have remained above or below a threshold for a sustained period.

To do this, we measure averages over two sliding windows:

- A short window (e.g., 5 minutes) to detect sudden spikes.

- A long window (e.g., 15–30 minutes) to confirm a sustained change in load.

When deciding whether to scale up, we check if a metric has breached its high threshold in the short window. But we don’t act immediately. We wait to see if the long-window average also exceeds the threshold. If only the short window is high, the scaler waits, as it might be a temporary burst.

When deciding whether to scale down, we require that all four metrics remain below their “low” thresholds across both the short and long windows before we reduce capacity.

This logic can be summarized as follows:

- Scale Up if any one of the four metric averages for both the long and short windows are at or above their high threshold.

- Scale Down if all four of the metric averages for both the long and short windows are at or below their low threshold.

You can configure much of this, including the high/low thresholds for each metric, the lookback windows, and the floor/ceiling vCore levels to ensure you stay within your budget.

Getting Started

We’ve tried to make it as easy as possible to get up and running. The project’s GitHub repository has all the details, but here’s the gist:

- Deploy the Azure Function: The core of the AutoScaler. There’s a GitHub Actions workflow in the repo which demonstrates how to deploy.

- Configure Your Settings: Set up your connection strings, thresholds, and other parameters in the Function’s application settings.

- Set Permissions: Ensure the Function’s managed identity has the necessary permissions to monitor and scale your elastic pools.

We strongly encourage you to explore the docs, code and unit tests, and run your own load test to get comfortable with how the AutoScaler behaves in your environment. There are load testing instructions in the repo.

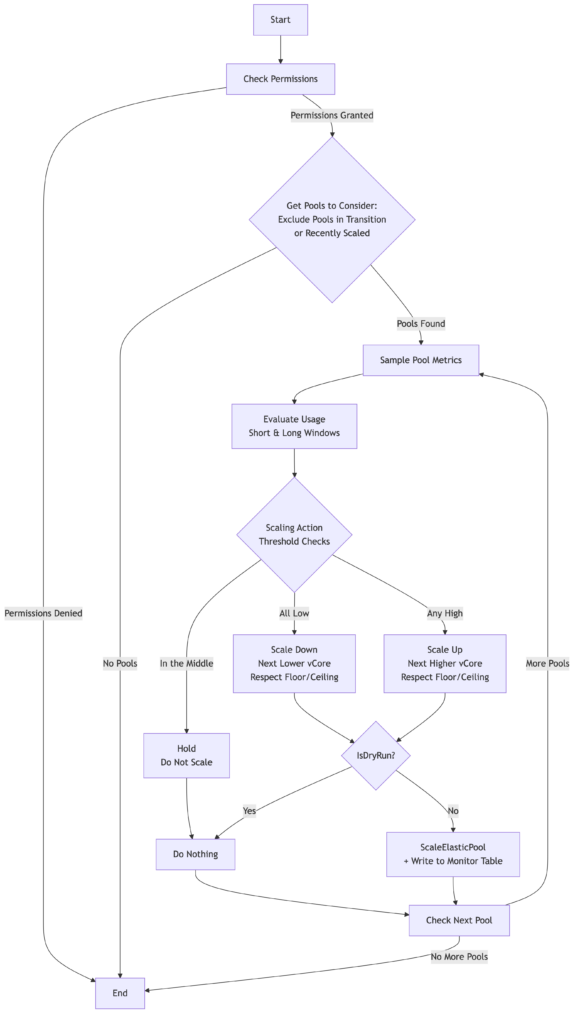

Logic Flowchart

The following flow chart illustrates the logic of the autoscaler (click to enlarge) each time the timer trigger fires.

Warning

AutoScaler works by invoking the standard Azure SQL elastic pool scaling APIs. When the pool scales, client connections are dropped. Your client code must be built for resiliency to handle this disconnect and retry interrupted transactions. Refer to the documentation for guidance on how to do this correctly. There are libraries that can help.

Disclaimer

As with any cloud resource, Azure SQL elastic pools can incur very high costs if not used appropriately. You are solely responsible for any costs you incur by using this code. We strongly recommend that you study the project, review the unit tests, and run your own load tests to ensure the AutoScaler behaves in a manner you are comfortable with before deploying to production.

Join the Community

This project was inspired by Microsoft’s own past work on autoscaling, and we’re thrilled to contribute back to the community. We welcome your feedback, suggestions, and contributions. Check out the project on GitHub, and let’s work together to make it even better.

Happy Scaling,

Larry Silverman Chief Technology Officer TrackAbout, Inc.

0 comments