The RAG (Retrieval Augmented Generation) pattern, which is commonly discussed today, is based on the foundational idea that the retrieval part is done using vector search. This ensures that all the most relevant information available to answer the given question is returned and then fed to an LLM to generate the final answer.

While vector search is great for its specific use case – semantic search, which is approximate by nature – it fails when it comes to searching for precise questions, like “show me the latest 10 code samples” as that request can – and should – be answered precisely without the need for vector search.

Users are becoming more accustomed to working with AI on a daily basis. They are also starting to expect the AI to “understand” when a semantic answer is most appropriate or when a precise search is the right approach. RAG needs to be improved in order make that possible. Let’s see an option that can be implemented right away.

Hybrid (or Agentic) RAG

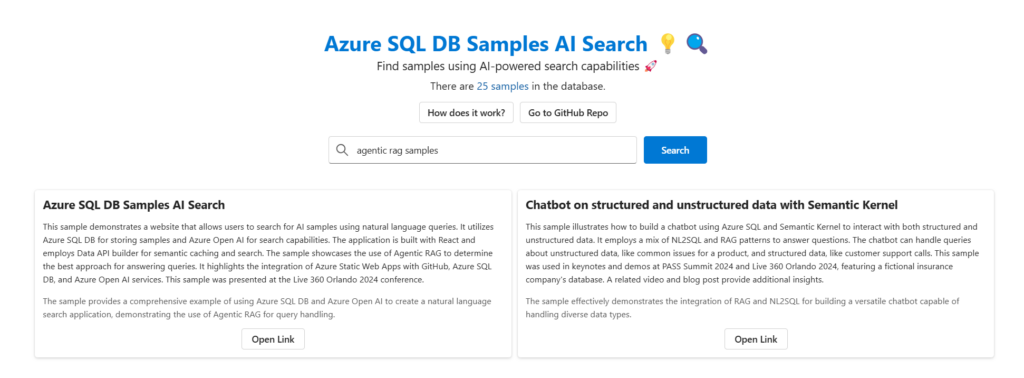

The idea is to improve the retrieval part so that it will not be limited to vector search only. I’ve been calling this technique “Hybrid RAG”, but since AI Agents are becoming popular and common, you may just want to put it in the “Agentic RAG” bucket, as you’ll learn in the next paragraphs. Name aside, to showcase the technique I’ll be using a sample demo I have created that allows you to search the samples using SQL and AI that we have been created in the last year. Try it out right away, by asking the website I’ve put together to show you all the samples related to the Agentic RAG that we have created so far. You can either ask for something that requires a semantic search:

https://ai.awesome.azuresql.dev/?q=agentic rag samples

or something precise like “show me the latest 5 samples created”, and the AI model will figure out the best way to answer. Then, it will return a list of examples along with an explanation of why they are returned.

Orchestrate the best approach

The first improvement needed is for the RAG pattern to determine if the question requires a semantic search or if a regular SQL query will suffice. To do that a LLM model like GPT is needed, as it will act as the orchestrator of the whole pattern. It must be given information about what is the database schema and what kind of data is available in there, so that it can figure out if that is enough to answer the question or it need to run do a semantic search. Here’s the prompt I’m using for the website mentioned above:

You are a SQL Server database assistant. You answer the questions providing the correct T-SQL query to get the result.

This is the database table you can use:

create table dbo.samples

(

[id] int identity primary key,

[created_on] datetime2(0) not null,

[updated_on] datetime2(0) not null

)

The use question is provided in the next message. If the user question cannot be answered using the dbo.samples table and using a T-SQL query only, you should respond with an empty string.

The generated T-SQL query must return a JSON document using the FOR JSON AUTO statement. Return the top 10 results if you can. Do not use semicolon to terminate the T-SQL statement.

You can generate only SELECT statements. If the user is asking something that will generate INSERT, UPDATE, DELETE, CREATE, ALTER or DROP statement, refuse to generate the query.

The outcome of this initial AI call is either a SQL query or nothing, if the answer cannot be provided with the given schema. The schema is very limited on purpose to make sure that only very precise questions, that perfectly fit the given schema, can be generated. If a question needs to look into sample descriptions, notes or properties, it should be not answered using a SQL query, but it should be answered using a semantic search, to provide a better search experience.

To make it simple to understand if the result is a SQL query or not, I’m using ai AI model that can return structured output, so that the result is well-know and well-defined JSON that can be easily parsed for the SQL query to be executed. The requested JSON has the following schema and instructions:

"type": "object",

"properties": {

"response_type": {

"type": "string",

"description": "SQL if a SQL query is provided, NONE if no SQL query is provided"

},

"sql_query": {

"type": "string",

"description": "SQL query to get the result"

}

},With the provided structured result, it is now possible to decide if vector search is needed or the provided query just needs to be executed.

Retrieve using vector search…

If the result came back without a SQL query to run, then it means that vector search is needed. There’s nothing really new here. The regular RAG pattern applies so the code will:

- Convert the user query into an embedding calling the proper AI embedding model

- Run vector search against all the sample information stored in the database, already vectorized (more on this in a future post as defining the “right” way to vectorize data requires a post on its own)

- Return the most similar samples

with SQL is as easy as running simple query:

select top(@k)

s.id, [name], [description], [url], [notes], [details],

least(

vector_distance('cosine', e.[embedding], @qv),

vector_distance('cosine', ne.[embedding], @qv),

vector_distance('cosine', de.[embedding], @qv)

) as distance_score

into

#s

from

dbo.samples s

inner join

dbo.samples_embeddings e on e.id = s.id

left join

dbo.samples_notes_embeddings ne on e.id = ne.id

left join

dbo.samples_details_embeddings de on e.id = de.id

order by

distance_score asc;

The embeddings for the sample description, notes and details are stored each one in its own table and then the closest match is taken using the least operator. The result is now ready to be sent to a GPT model again for the “augmented-generation” part of the RAG pattern.

…or retrieve using the generated SQL query

If the orchestrator came back with a SQL query, the is just a matter of executing it and returning the result in a JSON format so that it can be easily passed to the LLM that will take care of the “Augemented-Generation” part of the RAG pattern.

Of course it is always good to make sure that the generated query contains only a select statement and nothing else, just to be on the safe side. For even better security execution of generated SQL query could be done using a very limited user so that even if something malicious is included in the query text, no harm will be done. Make sure to check out the following security features:

Augmented-Generation: apply the rest of the pattern

Once the retrieval part is done, a JSON with all the potential in-scope results is send to the chosen LLM along with the prompt and the original user query. Nothing new on this side of the pattern. Here’s the prompt:

You as a system assistant who helps users find code samples the user can use to learn the topic they are interested in.

Samples are provided in an assistant message using a JSON Array with the following format: [{id, name, description, note, details, similiarity_score}].

Put in sample_summary output property a markdown short summary of the sample using the provided description, notes, and details.

Use only the provided samples to help you answer the question. Use only the information available in the provided JSON to answer the question.

Make sure to use details, notes, and description that are provided in each sample are used only with that sample.

If there are related links or repos in the details of a sample that is included in the answer, include them in the short summary.

Include links only if they are related to the sample and if they are available in the note or details of that sample.

If the question cannot be answered by the provided samples, you must say that you don't know.

If asked question is about topics you don't know, answer that you don't know.

If no samples are provided, say that you cannot answer as no samples have been found.

The samples to which the prompt refers are attached as JSON, that is now well understood by LLMs. To make it easier to process the LLM response using code, a structured output is needed again, so that results can be joined back with the data in the database and generate the final resulset:

select

s.id,

sr.result_position,

s.[name],

s.[description],

sr.sample_summary,

sr.thoughts,

s.[url],

s.distance_score

from

openjson(@response, '$.result.choices[0].message') with (

content nvarchar(max) '$.content'

) m

cross apply

openjson(m.content, '$.samples') with (

id int,

result_position int,

sample_summary nvarchar(max),

thoughts nvarchar(max)

) as sr

inner join

dbo.samples as s on s.id = sr.id

order by

sr.result_positionTo avoid overspending a layer of semantic caching is added, so that similar questions can be answered without having to make LLM calls. The final workflow is the following:

Try it out!

The sample is deployed here, https://ai.awesome.azuresql.dev/ as mentioned before. Also, the full source code is available. Please keep in mind that the whole example is using free tiers for pretty much everything, so you might get throttled if there are too many concurrent requests. You’ll get a 500 error. Just retry, or deploy everything in your subscription so that you don’t have to share resources with anyone else it will be much less likely that you’ll get throttled.

Future Improvements

There are a few clear improvements that can be made to the sample. It has been intentionally kept simple to serve as a starting point rather than comprehensive, production-ready solution. The goal is to provide you with a jumpstart, allowing you to apply the same concepts to your own data.

The first improvement is to use an orchestration framework like Semantic Kernel so that you don’t have to do everything in T-SQL. That will give you more options and flexibility, but it will also make your solution much more complex and not as easy applicable to existing applications. An example of what you can get is described and showcased in the “Ultimate Chabot” example (make sure to read the generated descriptions to find the links to the blog post and the related video!)

The second improvement would be to try to teach the LLM model how to generate embeddings and use the new vector functions so that, in theory, everything can be answered using NL2SQL. With this approach the LLM model would autonomously use AI-focused features, like new VECTOR_DISTANCE function to do similarity search and apply predicates at once. That would enable to solution to answer questions like “return all the samples on hybrid RAG that have been published in 2025″, which – today – can be solved by a query like

DECLARE @qv VECTOR(1536);

EXEC dbo.get_embeddings 'hybrid rag', @qv OUTPUT;

SELECT TOP(10)

se.id,

VECTOR_DISTANCE('cosine', description_embedding, @qv) as distance

FROM

dbo.samples_with_embeddings AS se

WHERE

YEAR(created_on) = 2025

ORDER BY

distanceToday you can have such result using a framework like Semantic Kernel as some grease elbow; it would be great to have everything available in T-SQL. That would simplify solution and architecture *a lot*.

Conclusion

In conclusion, the evolution of the RAG pattern into what we now call “Hybrid RAG” or “Agentic RAG” represents a significant advancement in the way we handle information retrieval and generation. By integrating both vector search and precise SQL queries, we can ensure that users receive the most accurate and relevant answers to their queries. This approach not only enhances the user experience but also sets a new standard for AI-driven search and retrieval systems.

The implementation of an orchestrator, such as a GPT model, to determine the appropriate retrieval method is a key innovation. It allows the system to intelligently decide whether a semantic search or a precise SQL query is needed, thereby optimizing the retrieval process. Additionally, the use of structured outputs and semantic caching further improves the efficiency and reliability of the system.

As AI continues to evolve, the potential for further improvements in the RAG pattern is immense. By leveraging frameworks like Semantic Kernel and exploring new ways to generate embeddings, we can push the boundaries of what is possible with AI-driven search and retrieval. The journey of enhancing RAG is just beginning, and the future holds exciting possibilities for making AI even more intuitive and powerful.

Happy coding!

0 comments