Kubernetes is designed to support an open platform to support a diverse variety of containerized workloads including both stateless and stateful and data-processing workloads. Technically any application can be containerized, it can be up and running in Kubernetes. As a cloud-native platform, Kubernetes is available to deploy cloud-native applications in any language of your choice. Working with BDC, you get more benefits on the top of Kubernetes.

This article will walk you through how to develop and deploy apps to Big Data Clusters ( BDC ).

BDC Native versus Kubernetes Native

Applications deployed on BDC are not only to benefit from numerous advantages, such as the computational power of the cluster but can also access massive data that is available on the cluster. This can be dramatically beneficial to increase the performance of the applications since it sits in the same area where the data lives. The natively supported application runtimes on SQL Server Big Data Clusters are as the following:

- Python – One of the most popular general programming languages for various personas for instance Data Engineers, Data Scientists, and DevOps engineers. It supports numerous scenarios such as data wrangling, automation, prototyping, to some extent. It also increasingly used to program enterprise-grade applications working in conjunction with web development frameworks such as Flask and Django to address different business requirements.

- R – Another popular programming language for Data Engineers and Data Scientists. Compared to Python, R is a programming language with more a specific focus on statistical computing and graphics.

- SQL Server Integration Services (SSIS) – high-performance data integration solutions for building and debugging ETL packages, it uses Data Transformation Services Package File Format (DTSX) which is an XML-based file format that stores the instructions for the processing of migrating data between databases and the integration of external data sources.

- MLeap – is a common serialization format and provides everything needed to execute and serialize SparkML pipelines and others that can then be loaded at runtime to process ML scoring tasks in near real-time and close to the data.

Find those of samples of entre level application that can be deployed in BDC on Github.

Develop and Deploy Application on BDC

One of the coolest things while working with BDC is we can start application development by creating an app skeleton by using azdata app init command which provides a scaffold with the relevant artifacts that are required for deploying an app. For instance, to start a python application, we can use the following command:

azdata app init --name hello --version v1 --template python

It creates a folder structure as the following:

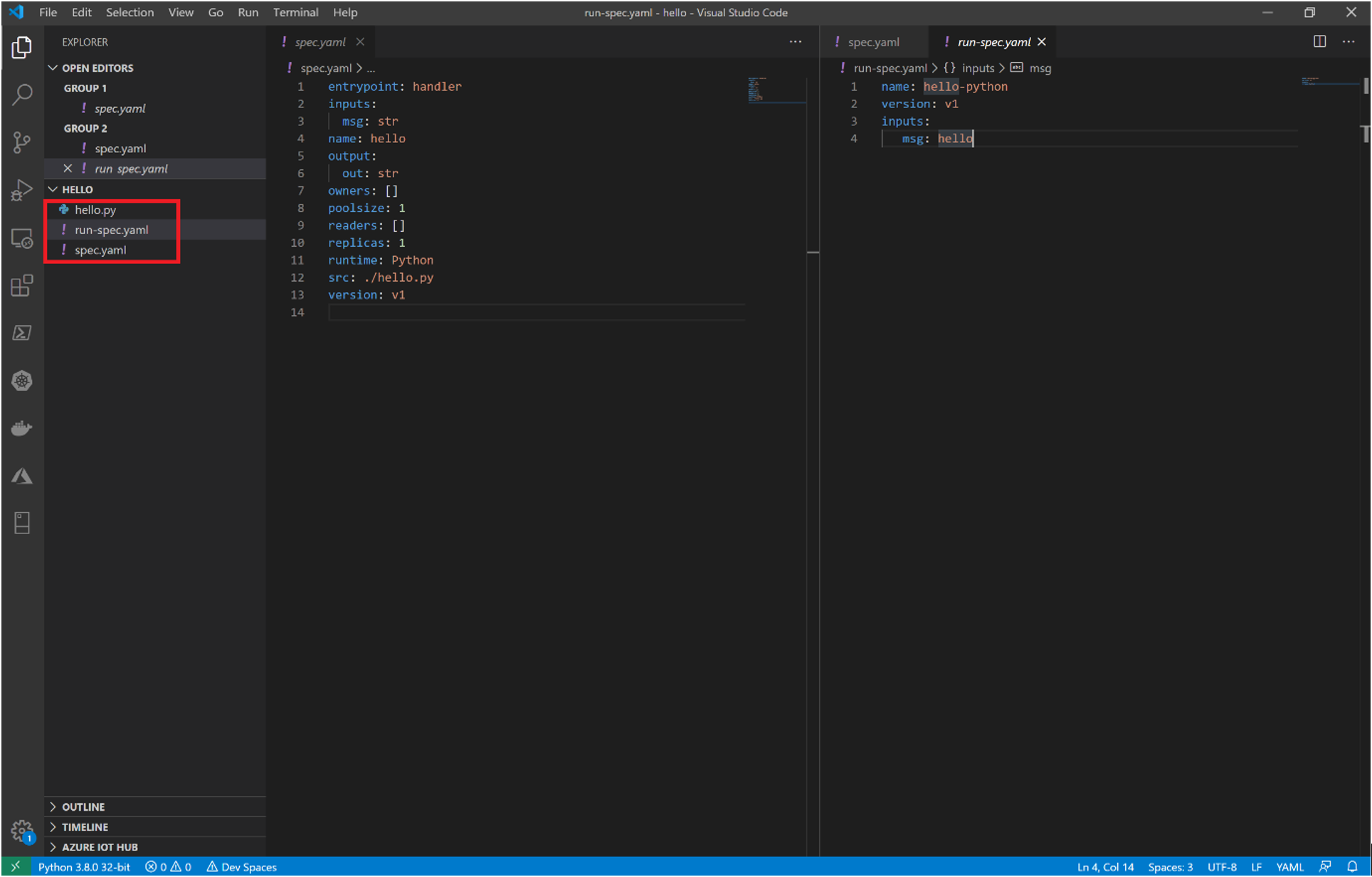

- hello.py where your python code lives. It can be another particular type of language kernel. It supports Python and R by far.

- run-spec.yaml contains everything app consumption definition given to the VS Code extension client. To know more about how to work with VS Code App Deploy extension, check here.

- spec.yaml contains everything the controller needs to know to successfully deploy the application in BDC.

If you open this folder with VS Code or any other IDE of your choice. You’d see a screenshot as the following screenshot:

Then we’ll deploy the sample application by using azdata app create command, and then specify the folder path to your spec.yaml file. In case you’re on Linux OS, you can use pwd command to get your current path :

azdata app create –-spec /path/to/your/spec.yaml

If you’re already in the folder where you have your spec.yaml file, use the following command:

azdata app create --spec .

After executing this command, you can expect the following output which means you have deployed an application successfully.

![]()

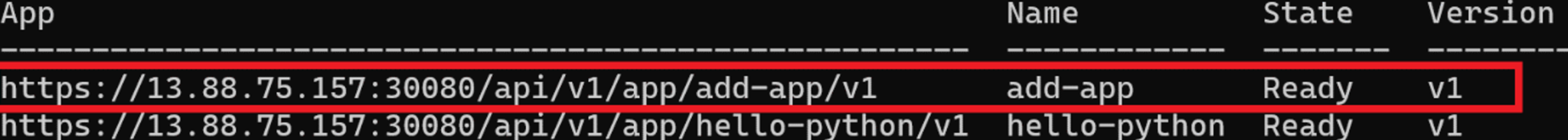

Let’s check all applications deployed in BDC using azdata app list command, notice that sqlbigdata is the name of your BDC cluster which is also the name of the namespace at this time:

azdata app list

If you have a similar output as follows with Ready state which means you have successfully deployed the application in BDC and it’s ready to be consumed via a respective endpoint.

Next Steps

Next article we’ll walk through how to develop and deploy Apps to SQL Server Big Data Clusters (BDC), please check: Working with big data analytics solution in Kubernetes Part 3 – Run and Monitor Apps in SQL Server Big Data Clusters (BDC). Let’s stay tuned!

0 comments