The Azure SDK team was formed in 2018 to develop a new generation of Azure client libraries—written in language-idiomatic ways, considering the individual ecosystem expectations for each language regarding API design, dependencies, and other best practices. In 2020, we released a new Event Hubs client library built using these new Azure SDK design guidelines. Since then, we’ve received hundreds of pieces of feedback from customers, with this feedback directly resulting in numerous improvements. Some of the feedback related to the library’s reliability and performance, compared to the legacy Event Hubs client library. Over the last two years, we devoted ourselves to debugging and fixing reliability and performance issues. This blog post covers some efforts to drastically improve the reliability and performance of the Event Hubs library for Java.

Reliability

One of the core themes we investigated was reliability. A typical GitHub issue was “My EventProcessorClient stopped receiving events”. The customers would see the same result and similar exception call stacks, but the underlying causes differed.

Unlike other Azure services that expose REST endpoints, Event Hubs (and Service Bus) clients communicate to their respective Azure services using the AMQP protocol. AMQP requires a persistent and stateful connection used for the lifetime of the client. “AMQP 1.0 in Azure Service Bus and Event Hubs protocol guide” provides additional information if users are interested in specifics. The state of an AMQP connection can become corrupted through many different paths, leaving users in a bad state. For example, “My processor stopped receiving events”. We relied heavily on customer logs to root cause analyze many of our reliability issues. Looking into customer logs allowed us to observe each state in the client library’s recovery process to see where it failed or hung. We acknowledge that customers’ logs are verbose and are continuously making improvements to understand the state of the AMQP state machine without so much overhead. Our messaging SDKs support distributed tracing with OpenTelemetry and we’re evaluating metrics support.

During this period, we fixed 34 bugs in the Azure Core library for AMQP (azure-core-amqp) and Azure Event Hubs client library (azure-messaging-eventhubs). Of these 34 bugs, 22 were reliability issues.

The gap in a customer’s experience and what we were running as part of our integration tests propelled us to create a stress/reliability suite that emulated the workload and flow of an existing enterprise customer. Before, our customers would find that the application hung after several days. With all of our improvements, our stress/reliability infrastructure, based on their workload of 330 million events/day, has continued running uninterrupted for the last few months!

Performance

Improving the Event Hubs client library was a complex journey, and it was much like peeling an onion. Fixing one issue often uncovered another! Additionally, we found that a couple of our fixes regressed the performance of our library. Customers rightfully expected our unified client library to be more performant than the legacy client library it replaced. We onboarded the library to our unified performance framework to provide objective data on improvements. Our next steps are to automate the performance runs to ensure regressions don’t occur.

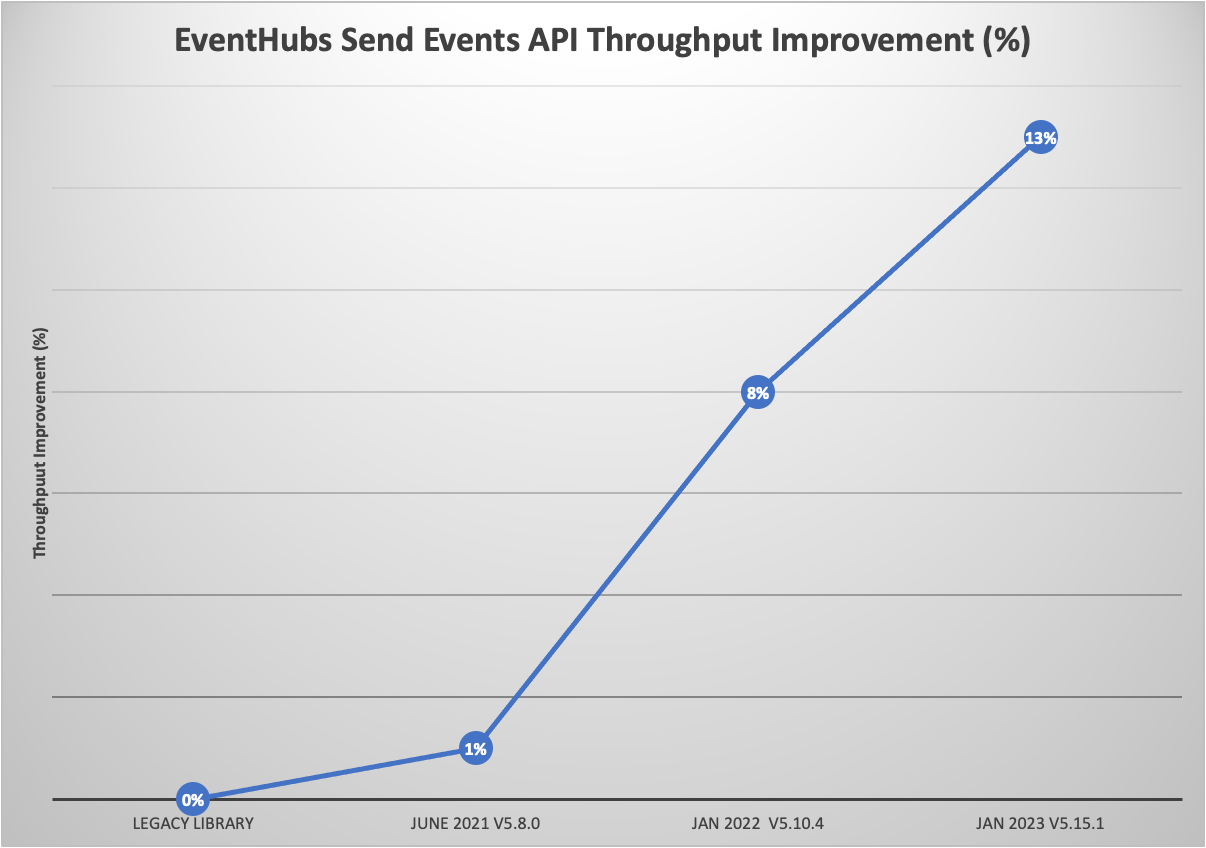

Publish events

Publishing events is a core scenario for customers. With our improvements, our library throughput is 13% faster than the legacy library.

| Library version | Throughput (messages/s) | Throughput improvement |

|---|---|---|

| Legacy library | 29,474 | 0% |

| June 2021 V5.8.0 | 29,646 | 1% |

| Jan 2022 V5.10.4 | 31,793 | 8% |

| Jan 2023 V5.15.1 | 33,191 | 13% |

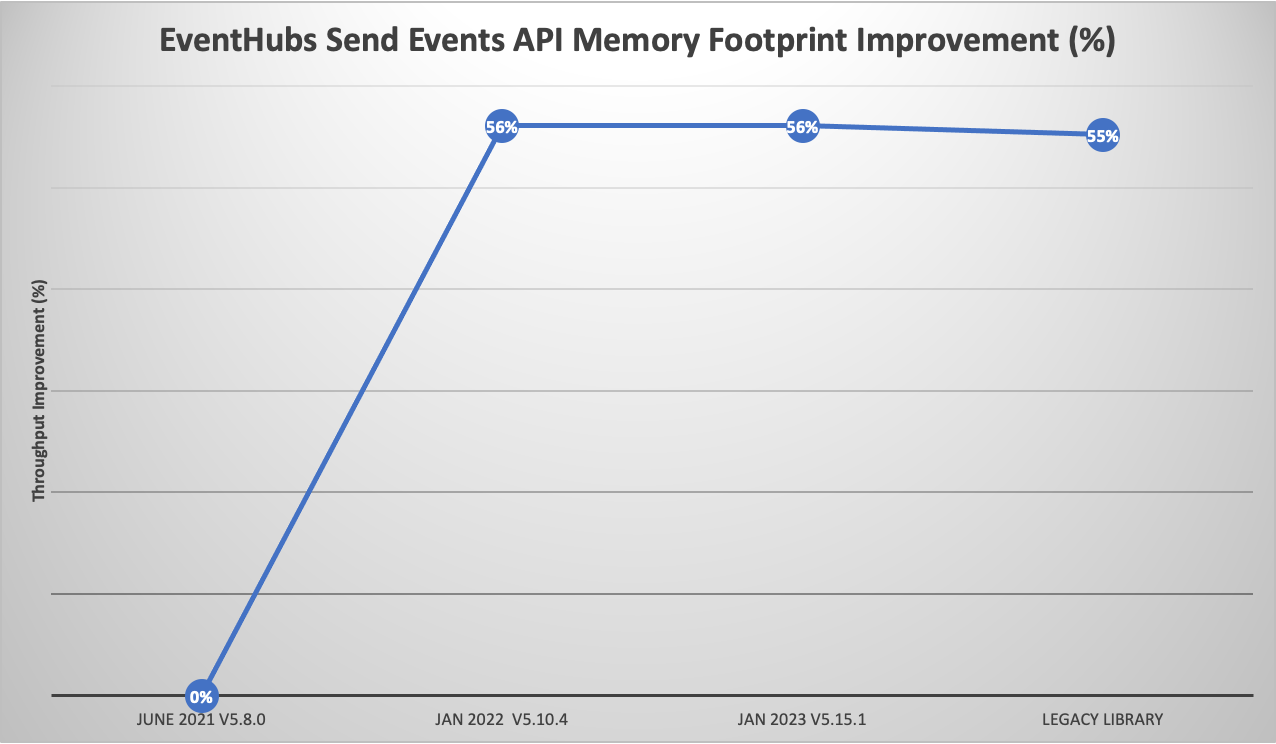

Memory leaks and usage were another focus of our reliability push. Since its first release, our library’s memory usage has decreased significantly, matching the legacy library’s usage.

| Library version | Peak memory allocation (GiB) | Memory footprint improvement |

|---|---|---|

| Legacy library | 6.63 | 0% |

| June 2021 V5.8.0 | 14.8 | -123% |

| Jan 2022 V5.10.4 | 6.5 | 2% |

| Jan 2023 V5.15.1 | 6.5 | 2% |

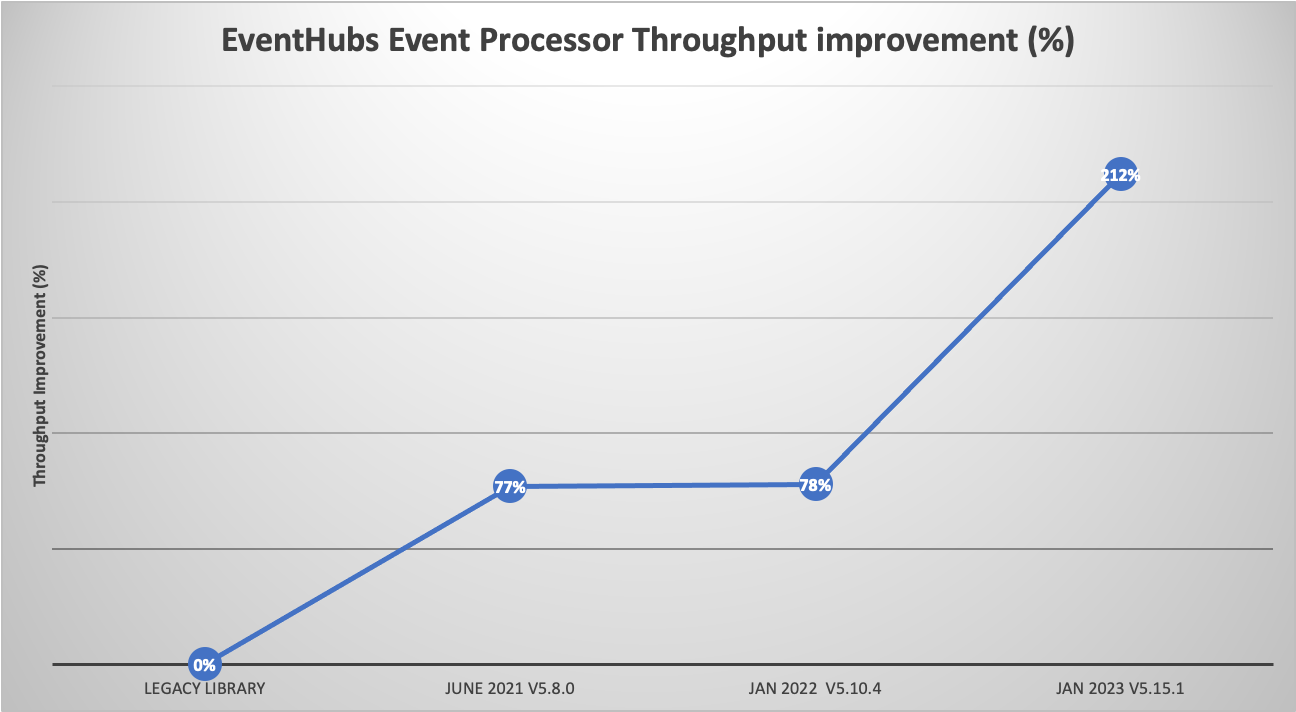

Consume events

Consuming events at a high rate is another scenario we have improved. Our customers send and receive millions of events daily, so we must ensure our library meets their expectations.

| Library version | Throughput (messages/s) | Throughput improvement |

|---|---|---|

| Legacy library | 6,276 | 0% |

| June 2021 V5.8.0 | 11,135 | 77% |

| Jan 2022 V5.10.4 | 11,153 | 78% |

| Jan 2023 V5.15.1 | 19,572 | 212% |

Note: The throughput of the legacy library is around 6,000 events/s because the legacy library caps the number of

EventDatain anEventDataBatchto 8,000.

We love customer feedback

We’ve learned quite a bit since the first release of azure-messaging-eventhubs. A few of our takeaways include multi-threading considerations, managing disposable resources, error scenarios, and nuances about our new async stack, built using Project Reactor. A few questions we ask ourselves are:

- What happens if an error occurs in the async operation (via Mono/Flux)? Is it recoverable? What is the recovery path?

- Do we eagerly create this resource/data structure? When should we dispose of it?

- Will multiple threads access this method or class? How do we handle the concurrent operations, such as lockless drain loops, synchronized methods/classes, and locks?

Summary

There have been several improvements to the Event Hubs client library in the last few years. We improved its reliability and performance and created stress tests to ensure the quality of our product.

- Publishing events is 13% faster than the legacy client library.

- Consuming events is 212% faster than the legacy client library.

- Memory footprint matches the legacy client library.

We’re constantly improving our library using customer feedback. Don’t hesitate to provide feedback via our GitHub repository.

0 comments