This post was written by guest blogger Paul Michaels.

I’ve previously written here about using Azure Service Bus in the Wild. One comment following that post relates to the speed implications of larger messages and the message size limits. In this post, we’ll delve into that in more detail. We’ll analyze the exact limits around message sizes, along with the cost and speed implications of increasing that size. We’ll also explore the implications of sending and receiving a high volume of messages.

Best practice

Before we explore the size and speed limits, let’s discuss the best practice here. This post will explain how you might mitigate some of the effects of having large messages and ways that you can improve the throughput for excessively large message batches. However, this post isn’t advocating for that approach as a good strategy.

I created an imaginary sales order while running tests for this post. The order contained around 10 properties for the header and 10 per line—a single sales order in a JSON-serialized message with 100 lines totaling ~16 KB and 500 lines around 80 KB. To replicate some of the sizes we’re talking about in this post, I needed to send hundreds of orders. Each order had thousands of lines, each in a single message. I’m not saying these situations are rare. But when they occur, the best way to correct them may be to look to your data first.

Size: Message size limits and cost implications

Let’s start with some hard limits. There are three tiers in Azure Service Bus. The Basic tier prevents use of topics, so we’ll ignore that in this post.

| Tier | Cost (10 M operations / month) | Max message size |

|---|---|---|

| Standard | ~ $10 – $15 | 256 KB |

| Premium | ~ $650 – $700 | 100 MB |

The two pricing models differ in that Standard is, essentially, Pay-As-You-Go. That is, you pay per message. The Premium model doesn’t increase with the number of messages, but you pay for each hour the service is running. What this means is the Premium tier will never exceed the monthly figure.

The preceding table isn’t intended to be an exact cost chart. For exact costs, see the pricing details and the quotas.

Let’s start with the Standard tier: the limit for a message is 256 KB. If what you’re sending is a JSON-serialized payload (and I’d guess that would be the case for most readers), then that’s a fairly large limit. To put that into context, the sales order that I mentioned above needed to be around 1,500 lines before it came close to that limit.

There are times when you may wish to transmit binary information, such as images. It’s also possible that your sales order is bigger than the one I have here. If you had 100 properties per order, and the same for each line, then it’s possible that a normal order (just a few lines) would exceed this limit. Again, it’s worth considering whether absolutely all of this information is required, or even whether it can be compressed.

Speed: How bigger messages affect speed

I tried various message sizes while researching this post. Obviously, the larger the message, the longer it takes to transmit. I don’t have an amazing broadband Internet speed. For a 70-MB file, it usually took just under a minute to send. A 5-MB file took around 5 seconds. I don’t think these figures will shock anyone. You might notice an improvement if your broadband is better than mine. Although I suspect at some stage, you’ll be throttled by Azure itself.

Large messages may present issues with various other parts of the system. They take more time to serialize and de-serialize. If something goes wrong, it’s more difficult to work out what that may be. For example, if you’re expecting a field to not be null, it may be obvious to see where the payload is measured in KB, but much less obvious when measured in MB. However, the main issue that they present is a timeout.

Timeout

In the Azure Service Bus library, when you send a message, there’s an inbuilt time limit. The limit is adjustable, and by default, it’s one minute; you can change this easy enough:

var options = new ServiceBusClientOptions()

{

RetryOptions = new ServiceBusRetryOptions()

{

TryTimeout = TimeSpan.FromMinutes(5)

}

};

await using var serviceBusClient =

new ServiceBusClient(connectionString, options);

var sender = serviceBusClient.CreateSender(topicName); Note that, despite the propensity to create the service bus client in the examples in this post, it would be best practice to simply use this as a singleton, instead of re-creating it each time it’s used.

The timeout here applies to the send time for each message (that is, the time between the request being made and a response being received). Receiving a message is slightly different. When you receive a message, you have two options (or modes): you can Receive and Delete or Peek-Lock.

Receive and Delete

The principle behind this method is that you confirm the message has been received as you accept it from Service Bus. As an analogy, imagine a restaurant. Once your food is ready, the server will bring it to your table. As they pick up the plate, they’re taking that plate from a queue. You can imagine multiple dishes waiting to be served. In our Receive and Delete scenario, before they pick up the plate, they would burn the original order ticket, and then take the food to the table. Let’s imagine that, when the food gets to the table, the customers claim they have been given the wrong order. In a Receive and Delete scenario, the server just throws the plates in the bin and wanders away. The message has been consumed. If there’s a problem after it’s received, the message is lost. However, the person serving the food is now serving the next customer. Essentially, you trade reliability for speed.

Peek-Lock

Peek-Lock is almost the reverse of Receive and Delete. If we return to our restaurant analogy, Peek-Lock is more of a standard operation. The server would keep the original order ticket and take that to the table with them. Once the order is accepted at the table, the server would return with the ticket. Obviously, while the food is being delivered to the table, it’s locked. So a second member of staff can’t pick up and deliver the food as well. That is, a message received under Peek-Lock must be explicitly confirmed as received before it’s removed from Service Bus. However, that message is locked (the lock in Peek-Lock) until that confirmation occurs or until the lock expires.

How a timeout can occur

In fact, this is where the timeout can occur on receipt of a message. Imagine you have a modestly large message; say it’s 10 MB for the sake of argument. This message takes 10 seconds to receive and another 1 to process. That’s 11 seconds before we can acknowledge. The first message is received, and a lock is taken on the message. This has a default lock duration of 30 seconds. Also, imagine that our system can process only two messages at a time. This number is low for illustration, but there’s a limit to the number of messages that your system can process simultaneously—it’s just higher than two.

The following table shows what can happen here.

| Message number | Time lock is taken | Time lock is confirmed |

|---|---|---|

| 1 | 10:00 | 10:11 |

| 2 | 10:00 | 10:11 |

| 3 | 10:00 | 10:22 |

| 4 | 10:00 | 10:22 |

| 5 | 10:00 | 10:33 |

| 6 | 10:00 | 10:33 |

In this case, messages 5 and 6 are in danger of their locks expiring.

Speed: How dealing with large quantities of messages affect speed

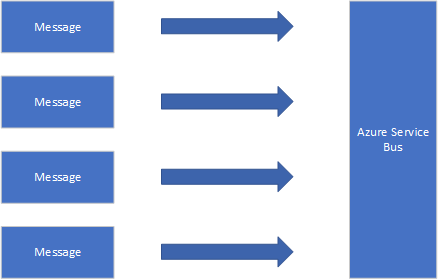

It stands to reason that if a single message takes a second, then 10 messages would take 10 seconds. If you’re trying to send 100 messages, you’re going to exceed a minute. The problem is one of accumulated time, as shown in the image below:

The same is true when receiving messages, but receiving messages can cause the issues that we saw earlier when using the Peek-Lock receive mode. To be clear, the problem is that the lock may expire before processing has completed on this batch.

Methods to improve and mitigate speed issues

With all the issues mentioned above, we’re dealing with sheer numbers or size causing timeouts at some stage in the process. We can’t increase the speed of transmission. At least, we can’t do that by changing the software. We’re left with the following options:

- Reduce the message size.

- Reduce the number of calls across the network.

- Reduce the number of messages.

- Increase the timeout period, so that the time taken no longer causes timeouts.

- Increase the number of receivers.

Let’s discuss each, and see what the options are.

Reduce the message size

Let’s assume you have an unnegotiable amount of data that needs to be transmitted. For example, a sales order with hundreds of properties and thousands of lines. Maybe there’s some image data in there, too.

One option is to write the details of the sales order to, for example, Cosmos DB. The message that’s sent can then be reduced to be a pointer to the order in Cosmos DB.

A second option is to compress the message (see the link above). Your receiver needs to be aware of this compression, which leads to a form of coupling with which you may be uncomfortable.

Reduce the number of calls across the network

There are many ways to reduce network calls for both sending and receiving. Let’s start with sending.

Batch sending

One method to prevent incurring the latency of multiple calls is to send messages in a single call. The following code will send 100 messages in a single call:

await using var serviceBusClient = new ServiceBusClient(connectionString);

var sender = serviceBusClient.CreateSender("bulk-send");

List<ServiceBusMessage> serviceBusMessages = new();

for (int i = 1; i <= 100; i++)

{

string messageText = $"Test{i}";

serviceBusMessages.Add(

new ServiceBusMessage(messageText);

}

await sender.SendMessagesAsync(serviceBusMessages);This batching approach doesn’t get around the limit of the message. That is, the messages sent above all need to be less than the 256-KB limit combined. There’s an alternative way to structure this code to mitigate this issue slightly:

List<ServiceBusMessage> serviceBusMessages = new();

var serviceBusMessageBatch = await sender.CreateMessageBatchAsync();

for (int i = 1; i <= count; i++)

{

if (!serviceBusMessageBatch.TryAddMessage(new ServiceBusMessage(messageText)))

{

await sender.SendMessagesAsync(serviceBusMessageBatch);

serviceBusMessageBatch.Dispose();

serviceBusMessageBatch =

await sender.CreateMessageBatchAsync();

}

}

await sender.SendMessagesAsync(serviceBusMessageBatch);We can do the same on the receive side – receive a chunk of messages in a single call; for example:

await using var serviceBusClient = new ServiceBusClient(connectionString);

await using var receiver = serviceBusClient.CreateReceiver("bulk-send", "sub1");

var messages = await receiver.ReceiveMessagesAsync(200);

foreach (var message in messages)

{

await receiver.RenewMessageLockAsync(message);

Console.WriteLine(message.Body.ToString());

}For both of these mechanisms, there’s a price to pay, but more so for the receive. The issue is that the operation may fail. Arguably, if it fails during a send, you can run again. The worst case scenario is you get duplicated messages. Duplicated messages aren’t difficult to deal with in most cases.

For the receipt, specifically for Receive and Delete, there’s a much larger problem. If the operation fails after you’ve received the messages (for example, after the call to ReceiveMessagesAsync), the remaining messages may be lost. In a Peek-Lock scenario, these messages would eventually return to the queue after their lock expires.

Azure Service Bus also supports the concept of pre-fetching. The idea is that the Service Bus client tries to read ahead by maintaining a cached buffer of messages. This mechanism has all the same issues that batch receiving does. Your messages run the risk of one of the following things:

- Being lost (in Receive and Delete mode) if there’s an issue with the code.

- Timing out (in Peek-Lock mode) where you don’t process the messages fast enough due to volume.

Reduce the number of messages

Another possibility that presents itself is to group messages together. Grouping enables more data to be transmitted at one time, but the data is still treated as a single message. This approach is generally bad, unless you know that the data would always live and die together. For example, grouping two orders together means that if one order were Dead Lettered, the other would be stuck with it, despite being potentially fine. On the other hand, if you’re creating a customer, it may make sense to send the customer address in the same message.

Increase the timeout period, so that the time taken no longer causes timeouts

We saw earlier how the timeout on a send can be adjusted. You can also renew the lock (for Peek-Lock):

var messages = receiver.ReceiveMessagesAsync();

await foreach (var message in messages)

{

await receiver.RenewMessageLockAsync(message);

// Message processing

Console.WriteLine(message.Body.ToString());

// Be sure to complete the message once processing as finished.

// await receiver.CompleteMessageAsync(message);

}The code above is a variation on the code earlier to retrieve a batch of messages. This code will refresh the message lock on each message. Additionally, if you’re using the ServiceBusProcessor, which allows an event-based receipt of messages, you can set the MaxAutoLockRenewalDuration property, which will do the above for you (until that timeout expires).

The default lock time is 30 seconds at the time of writing. This lock time is changeable on the queue or subscription level. You can change the lock time via the Azure portal, or you can use the ServiceBusAdministrationClient:

var serviceBusAdministrationClient = new ServiceBusAdministrationClient(connectionString);

var sub = await serviceBusAdministrationClient.GetSubscriptionAsync("topic-name", "sub1");

sub.Value.LockDuration = TimeSpan.FromSeconds(newDuration);

await serviceBusAdministrationClient.UpdateSubscriptionAsync(sub);The lock duration must be below 5 minutes and, obviously, if messages are being locked for too long, it will affect the scalability of the application.

Increase the number of receivers

One option that’s always available to improve the speed of receiving messages is to scale out your receiver; that is, to have more individual processes that can take messages from the queue or subscription. If you have 100 messages to process, one processor may take 100 seconds, but 100 processors may take one. If you’re using something like an Azure Function, you can have that automatically scale out for you.

Summary and caveats

In this post, we’ve explored the edges of Azure Service Bus in terms of size and quantity. The ideal situation is that you keep messages relatively small in size and quantity. In most cases, the information that you need can easily fit into the limit for the service’s Basic or Standard tiers.

If you reach for mechanisms and suggestions in this blog post, first question whether you really need the size, or quantity, of messages that necessitates them.

We use another option which is to process each batch of messages in parallel. Since we are using .Net Framework 4.8 we need to use the Async.Enumerable library (https://github.com/Dasync/AsyncEnumerable):

<code>

We have a high parallel count since in general our messages are lightweight in CPU - adjust parallelism to taste.

Since 99.9% of our messages are independent we don't get collisions, though it can occur (for example, processing two actions on the same database item). Note also that this does not handle messages that require serial processing, though sessions may help, which we have not explored. We have avoided sessions to reduce complexity...

Thanks for your comment – this is a good idea. I’m guessing that we’re talking here about batch receiving, and then processing the result in parallel. This makes sense; although I suppose it depends on the receiver, and the nature of the message – as you said, in your case, the processing is completely independant.