Analyzing the recorded audio, video, or chat history, as well as the average conversation time can be very helpful. We can find this kind of data in the call centers which are company hubs for customer communication. This article presents how new Azure .NET SDK client libraries (Cosmos DB, Text Analytics and Azure Storage Blobs) were used in the real project – call center conversations analyzer.

Business case

Together with my team at Predica, we needed a solution to get insights related to the recorded audio, video, and chat history from the call center owned by one of our clients. We wanted to know how many customers are satisfied and what are the most popular topics. This is why we decided to build call center conversations analysis solution with Azure cloud using services like Azure Storage, Azure Functions, Azure Cognitive Services, Azure Media Services Video Indexer, and Azure Cosmos DB.

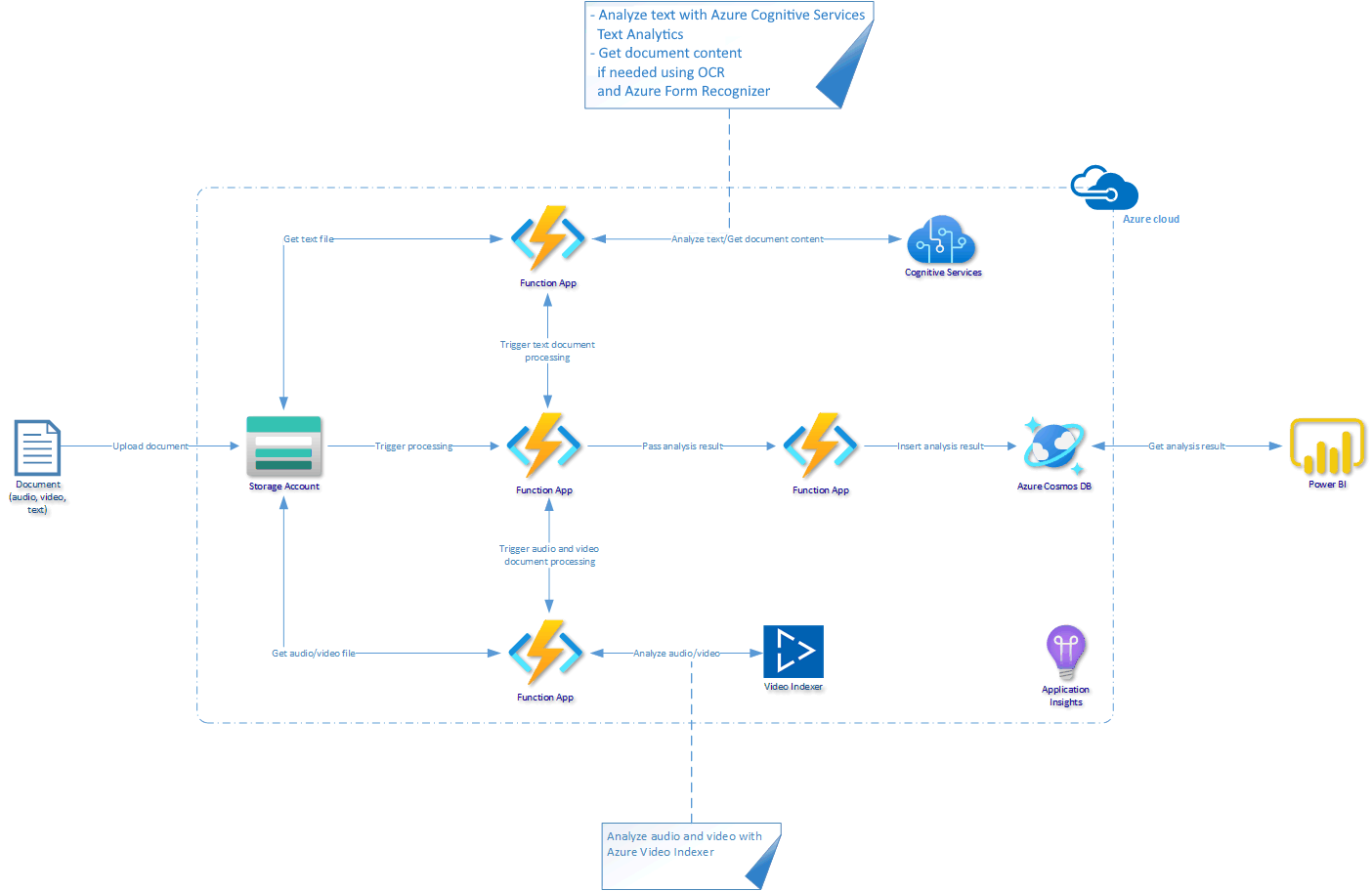

Solution architecture

The below diagram presents an implemented solution, that enables uploading PDF files with conversation history or audio/video files of the recorded conversation.

These services were used:

- Azure Storage Account (Blob Storage) – to store text, audio and video files for the further analysis

- Azure Durable Functions – to orchestrate analysis flow

- Azure Cognitive Services Text Analytics – to analyze text and get sentiment together with topics

- Azure Cognitive Services Form Recognizer – to scan PDF documents with OCR

- Azure Video Indexer – to analyze audio and video content

- Azure Cosmos DB – to store analysis results as JSON documents

- Power BI – to visualize collected data in a form of report

- Azure Application Insights – to monitor the solution and discover issues

Services choice substantiation

Before we discuss solution implementation in detail, it is worth to talk about why we decided to use the services listed above.

Azure Storage Account

Azure Storage Account (Blob Storage in this case) was a natural choice for a place for storing source documents. Azure Blob Storage is a cost-efficient and secure service for storing different types of documents. In our solution, we used not only text documents, but also audio and video.

Azure Durable Functions

Initially, we thought about using Azure Web App and host API that would be responsible for orchestration. After some discussions, we made our mind to go with Azure Durable Functions. The reason behind it was simple. First, we wanted to run the analysis flow only when there is a new file uploaded on the Azure Blob Storage. Azure Functions are ideal to be used as event handlers. The second important reason was related to cost. With Azure Functions, we can reduce the cost as the first million executions are for free.

Azure Cognitive Services Text Analytics

There are many Azure Cognitive Services available. We needed a service to analyze the content of the text document and provide information about sentiment value. It is not easy to write own processor to discover emotions for instance. We wanted to analyze the conversation script and discover some insights related to customer satisfaction.

Azure Cognitive Services Form Recognizer

Form Recognizer is a great service that provides an easy way to extract text, key/value pairs, and tables from documents, forms, receipts, and business cards. Initially, we wanted to use Azure Computer Vision API to scan documents with OCR but in the end, we moved with Form Recognizer. The reason behind it was simple. In the future, we have also plan to scan customer satisfaction survey forms and with Form Recognizer, it will be much easier to extract the content and validate specific values.

Azure Video Indexer

When it comes to video analysis, Azure Video Indexer is powerful. With this service, we were able to process audio and video files and extract information about customer satisfaction using the sentiment analysis feature. Of course, this is only a small part of Video Indexer’s capabilities. It also provides a way to create translations, automatically detect language, or face detection of people in the video.

Azure Cosmos DB

There are a few different databases available in the Azure cloud. The most popular, Azure SQL, was our first shoot when designing the architecture of the system. Clear structure, easy and secure access. There was one fact that we realized further. Our data model was not constant. We had a plan to extend it in the future and we wanted to remain flexible when it comes to data schema. This is why we decided to switch to Azure Cosmos DB. As a non-relational database, it was a perfect match. It also provides a geo-replication and easy way to manage the data using Azure Cosmos DB SDK.

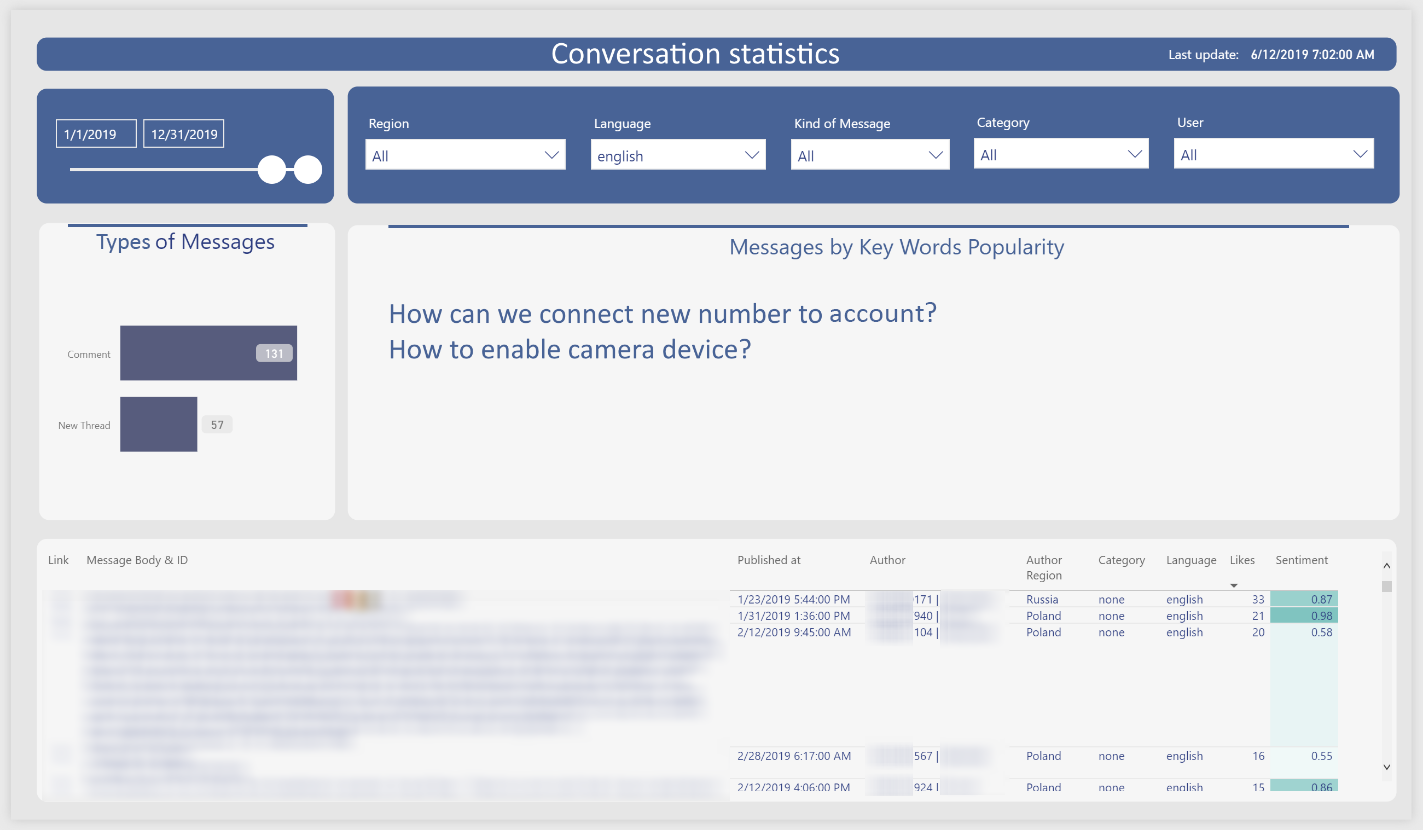

Power BI

To visualize the results we used Power BI. With this easy to use tool, we were able to create dashboards with information about customer satisfaction.

Azure Application Insights

Monitoring and resolving issues in the solution are very important. We wanted to be sure that if an issue occurs we will be able to identify it quickly and resolve. This is why we integrated Azure Application Insights in our solution.

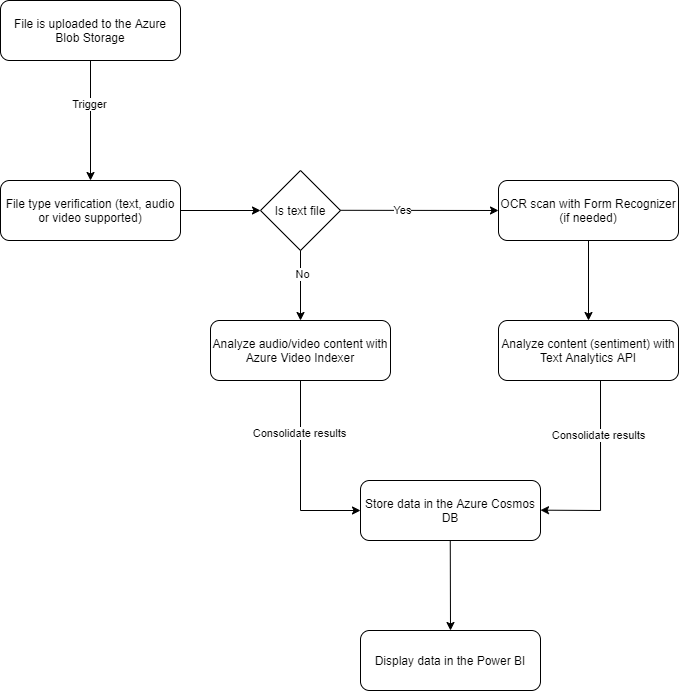

Data flow

Below diagram presents the data flow in our solution:

Now let’s talk about some implementation details.

Azure Cosmos DB for .NET in action

Once text, audio, and video files are analyzed by different Azure cloud services, we store analysis results in Azure Cosmos DB. This allows us to easily connect to our data from Power BI and we can extend the data model in the future. The code snippet below shows how we implemented a service class that uses a CosmosClient instance from the new Azure Cosmos DB client library for .NET:

public sealed class CosmosDbDataService<T> : IDataService<T> where T : class, IEntity

{

private readonly ICosmosDbDataServiceConfiguration _dataServiceConfiguration;

private readonly CosmosClient _client;

//Shortened for brevity

private CosmosContainer GetContainer()

{

var database = _client.GetDatabase(_dataServiceConfiguration.DatabaseName);

var container = database.GetContainer(_dataServiceConfiguration.ContainerName);

return container;

}

public async Task<T> AddAsync(T newEntity)

{

try

{

CosmosContainer container = GetContainer();

ItemResponse<T> createResponse = await container.CreateItemAsync(newEntity);

return createResponse.Value;

}

catch (CosmosException ex)

{

_log.LogError($"New entity with ID: {newEntity.Id} was not added successfully - error details: {ex.Message}");

if (ex.ErrorCode != "404")

{

throw;

}

return null;

}

}

}

To follow best practices related to lifetime management, CosmosClient type is registered as singleton in the IoC container.

Azure Storage Blobs for .NET SDK in action

As mentioned at the beginning of the post, we used the Azure Media Services Video Indexer to analyze audio and video file content. To make it possible for the Video Indexer to access files stored in the Azure Storage, we have to add a Shared Access Signature (SAS) token to the URL that points to the blob. We created our own StorageService using a BlobServiceClient instance from the new Azure Storage Blobs .NET client library. Please note that the last method is responsible for generating SAS tokens:

public class StorageService : IStorageService

{

private readonly IStorageServiceConfiguration _storageServiceConfiguration;

private readonly BlobServiceClient _blobServiceClient;

//Shortened for brevity

public async Task DownloadBlobIfExistsAsync(Stream stream, string blobName)

{

try

{

var container = await GetBlobContainer();

var blockBlob = container.GetBlobClient(blobName);

await blockBlob.DownloadToAsync(stream);

}

catch (RequestFailedException ex)

{

_log.LogError($"Cannot download document {blobName} - error details: {ex.Message}");

if (ex.ErrorCode != "404")

{

throw;

}

}

}

}

As I mentioned above when talking about lifetime management, we followed best practices and registered our BlobServiceClient instance as singleton in the IoC container.

Azure AI Text Analytics and Form Recognizer .NET SDK in action

Having chat history in the form of text files, we used Azure Cognitive Services Text Analytics to analyze the content and Form Recognizer to apply OCR scanning on the documents to extract the text content. One of the great features of Text Analytics is the ability to analyze sentiment. After scanning the chat conversation, we can get sentiment analysis results that will be displayed on our Power BI dashboard. To process text files, we created TextFileProcessingService class which uses TextAnalyticsClient instance from the new Text Analytics .NET client library:

public class TextFileProcessingService : ITextFileProcessingService

{

private readonly TextAnalyticsClient _textAnalyticsClient;

private readonly IStorageService _storageService;

private readonly IOcrScannerService _ocrScannerService;

//Shortened for brevity

public async Task<FileAnalysisResult> AnalyzeFileContentAsync(InputFileData inputFileData)

{

if (inputFileData.FileContentType == FileContentType.PDF)

{

var sasToken = _storageService.GenerateSasTokenForContainer();

inputFileData.FilePath = $"{inputFileData.FilePath}?{sasToken}";

var textFromTheInputDocument = await _ocrScannerService.ScanDocumentAndGetResultsAsync(inputFileData.FilePath);

try

{

DocumentSentiment sentimentAnalysisResult = await _textAnalyticsClient.AnalyzeSentimentAsync(textFromTheInputDocument);

var fileAnalysisResult = new FileAnalysisResult();

fileAnalysisResult.SentimentValues.Add(sentimentAnalysisResult.Sentiment.ToString());

return fileAnalysisResult;

}

catch (RequestFailedException ex)

{

_log.LogError($"An error occurred when analyzing sentiment with {nameof(TextAnalyticsClient)} service", ex);

}

}

throw new ArgumentException("Input file shuld be either TXT or PDF file.");

}

}

Please note that again here, we use the instance of the StorageService class to get Shared Access Signature and make it possible for Text Analytics API to access the file stored on the Blob Storage. Again, when talking about lifetime management, we followed best practices and registered TextAnalyticsClient instance as a singleton in the IoC container.

Form Recognizer SDK was used to apply OCR scanning on the PDF documents and to extract the text content:

public class OcrScannerService : IOcrScannerService

{

private readonly FormRecognizerClient _formRecognizerClient;

private readonly ILogger<OcrScannerService> _log;

//Shortened for brevity

public async Task<string> ScanDocumentAndGetResultsAsync(string documentUrl)

{

FormPageCollection formPages = await _formRecognizerClient.StartRecognizeContentFromUriAsync(new Uri(documentUrl)).WaitForCompletionAsync();

StringBuilder sb = new StringBuilder();

foreach (FormPage page in formPages)

{

for (int i = 0; i < page.Lines.Count; i++)

{

FormLine line = page.Lines[i];

sb.AppendLine(line.Text);

}

}

return sb.ToString();

}

}

We followed best practices and registered FormRecognizerClient instance as a singleton in the IoC container.

Reporting collected data

Below there is a fragment of Power BI dashboard with collected data:

Full project available on GitHub

The project described in this article is available on my GitHub under this link. If you would like to see implementation details or play with the project yourself, feel free to fork this repository. If you want to read more about Text Analytics SDK, please read Text Analytics Service documentation and if you are interested in reading more about lifetime management, please refer to Lifetime management for Azure SDK .NET clients blog post.

Azure SDK Blog Contributions

Thank you for reading this Azure SDK blog post! We hope that you learned something new and welcome you to share this post. We are open to Azure SDK blog contributions. Please contact us at azsdkblog@microsoft.com with your topic and we’ll get you setup as a guest blogger.

Azure SDK Links

- Azure SDK Website: aka.ms/azsdk

- Azure SDK Intro (3 minute video): aka.ms/azsdk/intro

- Azure SDK Intro Deck (PowerPoint deck): aka.ms/azsdk/intro/deck

- Azure SDK Releases: aka.ms/azsdk/releases

- Azure SDK Blog: aka.ms/azsdk/blog

- Azure SDK Twitter: twitter.com/AzureSDK

- Azure SDK Design Guidelines: aka.ms/azsdk/guide

- Azure SDKs & Tools: azure.microsoft.com/downloads

- Azure SDK Central Repository: github.com/azure/azure-sdk

- Azure SDK for .NET: github.com/azure/azure-sdk-for-net

- Azure SDK for Java: github.com/azure/azure-sdk-for-java

- Azure SDK for Python: github.com/azure/azure-sdk-for-python

- Azure SDK for JavaScript/TypeScript: github.com/azure/azure-sdk-for-js

- Azure SDK for Android: github.com/Azure/azure-sdk-for-android

- Azure SDK for iOS: github.com/Azure/azure-sdk-for-ios

- Azure SDK for Go: github.com/Azure/azure-sdk-for-go

- Azure SDK for C: github.com/Azure/azure-sdk-for-c

- Azure SDK for C++: github.com/Azure/azure-sdk-for-cpp

Is it possible to do this with live conversation between agent and customer? This can help document the conversation instead agent typing the details about the conversations

Thank you for the comment. Just to clarify. Do you ask about real-time conversation analysis or do you ask about recording the conversation between agent and customer?

This solution analyze text, audio and video files, once conversations are ended. Of course you could extend it and analyze conversation in real time. Then for passing real time data you could use SignalR Service for instance.

Thanks, very good example!!!

Thank you!