Until recently, building real-time voice AI for production was challenging. Developers faced hurdles such as managing audio streams and ensuring low-latency performance.

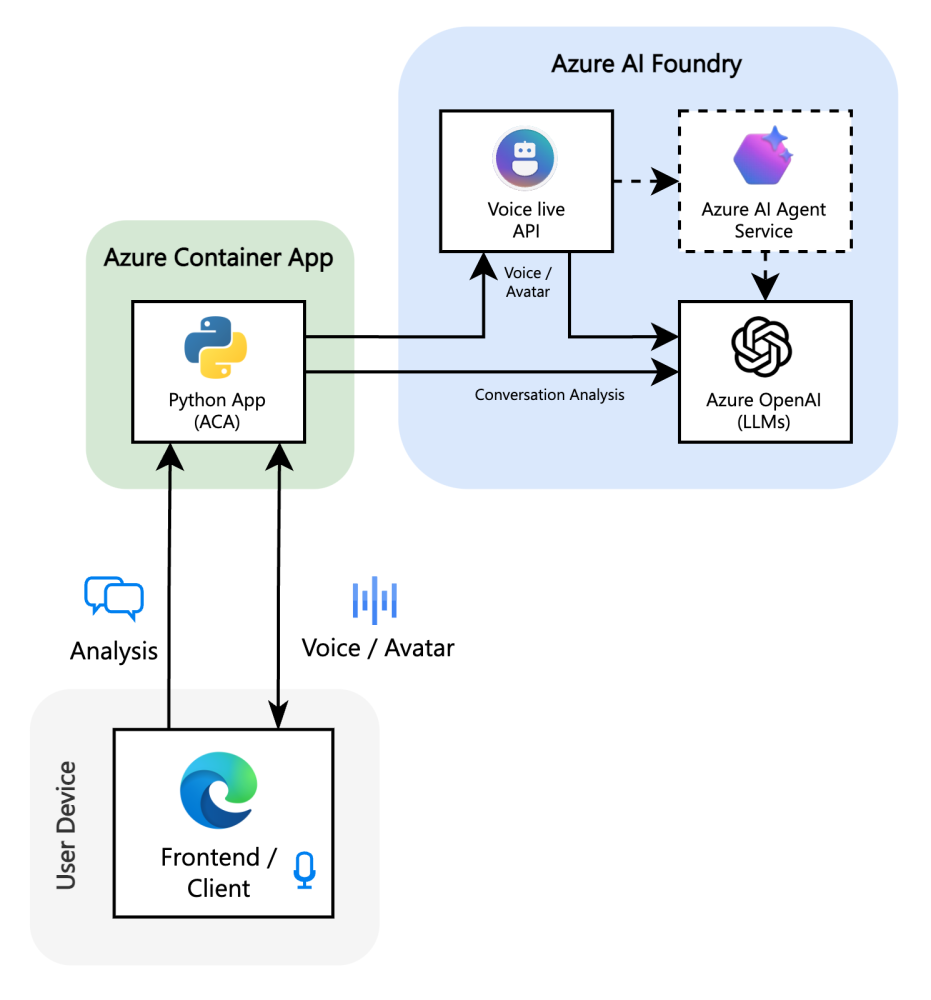

That landscape is changing. The general availability of the Azure Voice Live API marks a turning point, providing a unified abstraction layer that simplifies the development of real-time voice and avatar experiences. This shift inspired me to build a reference implementation called the AI Sales Coach to demonstrate how these new capabilities can be applied to solve a practical business challenge: skill development.

A Practical Application: The AI Sales Coach

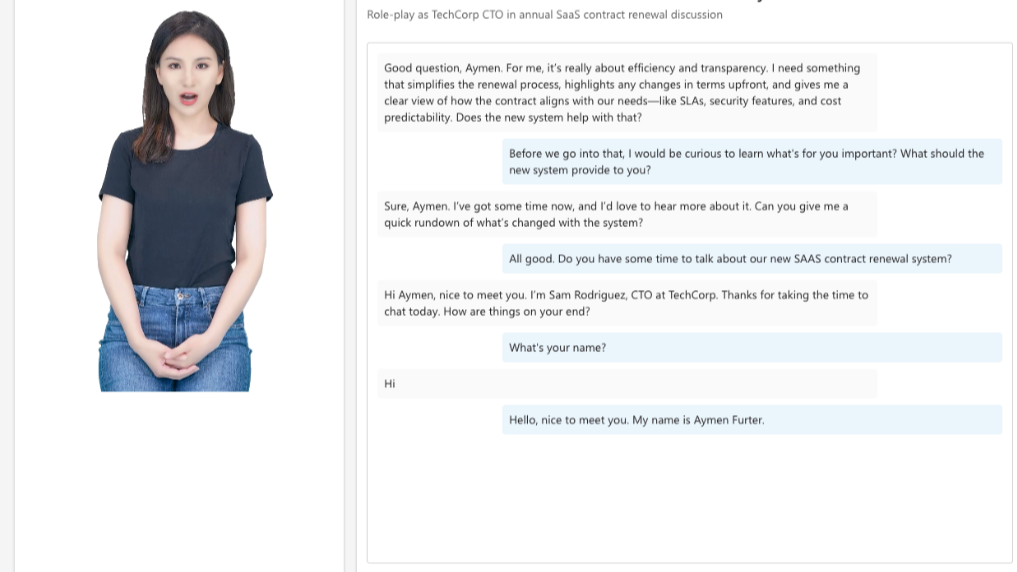

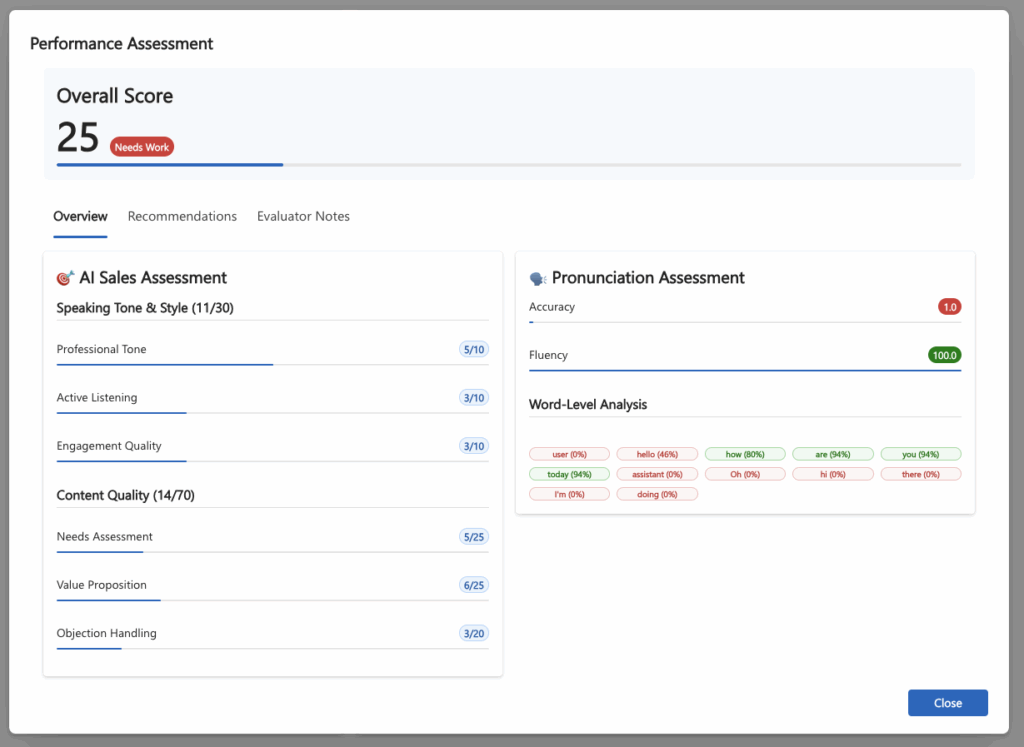

Sales training is a universal need, making it an ideal use case. The AI Sales Coach application simulates sales conversations, allowing a user to select a scenario and engage with an AI-powered virtual customer, complete with a voice and a lifelike avatar. A sales professional can practice their pitch, handle objections, and navigate a realistic dialogue. Once the simulation ends, the application provides a performance analysis, turning the experience into a powerful learning tool.

Session Configuration

When the connection to Voice Live API is opened, the backend constructs a session configuration message. This sets up modalities, voice, avatar, and audio settings.

def _build_session_config(self) -> Dict[str, Any]:

"""Build the base session configuration."""

return {

"type": "session.update",

"session": {

"modalities": ["text", "audio"],

"turn_detection": {"type": "azure_semantic_vad"},

"input_audio_noise_reduction": {"type": "azure_deep_noise_suppression"},

"input_audio_echo_cancellation": {"type": "server_echo_cancellation"},

"avatar": {

"character": "lisa",

"style": "casual-sitting",

},

"voice": {

"name": config["azure_voice_name"],

"type": config["azure_voice_type"],

},

},

}This message is sent as the first step in establishing the conversation.

-

modalities define if both text and audio are active.

-

turn detection specifies how the system decides when the speaker has finished.

-

noise reduction and echo cancellation improve audio quality.

-

avatar and voice personalize the agent’s presence.

Adding Local Agent Behavior

The behavior of the agent is not controlled by session settings alone. Instead, the backend injects the model, instructions, temperature, and max tokens into the session.

def _add_local_agent_config(self, config_message: Dict[str, Any], agent_config: Dict[str, Any]) -> None:

"""Add local agent configuration to session config."""

session = config_message["session"]

session["model"] = agent_config.get("model", config["model_deployment_name"])

session["instructions"] = agent_config["instructions"]

session["temperature"] = agent_config["temperature"]

session["max_response_output_tokens"] = agent_config["max_tokens"]With this, we can dynamically swap out models (e.g., GPT-4o vs GPT-5), adjust creativity with temperature, and add prompt instructions that define how the AI should behave.

The Instructions

The instructions enforce conversational realism, ensuring the AI responds like a real person in a role-play setting.

BASE_INSTRUCTIONS = """

CRITICAL INTERACTION GUIDELINES:

- Keep responses SHORT and conversational (3 sentences max, as if speaking on phone)

- ALWAYS stay in character, never break role

- Simulate natural human speech patterns with pauses, um, well, occasional hesitation

- Respond as a real person would in this business context

- Use natural phone conversation style, direct but personable

- Show genuine emotions and reactions appropriate to the situation

- Ask follow-up questions to keep the conversation flowing naturally

- Avoid overly formal or robotic language

"""These are appended to the role-play scenario instructions to guide the AI toward natural, in-character responses.

Feedback System

At the end of the conversation, the full transcript is sent to a GPT-4o model for evaluation. This “LLM-as-judge” pattern allows us to display a detailed scorecard covering key competencies.

The Manifestation of AI

A key feature of the sample implementation is the avatar. This is not a gimmick; it addresses a fundamental design question we must now ask about AI: How should it manifest?

In a sales simulation, giving the AI a face makes the interaction more personal and realistic, improving the training’s effectiveness. However, an avatar is not always the right choice. In a high-stress customer support scenario, it might be distracting or inappropriate. This project highlights the need to be intentional about the “body” we give our AI, tailoring its manifestation to the specific use case and user’s emotional state.

The Azure Voice Live API is the core of the system, handling the real-time, speech-to-speech conversation and avatar simulation. It serves as an abstraction layer, allowing different voice and language models to be used as the underlying reasoning engine without rewriting the application.

Final Thoughts

We have moved from text-based assistants to fully interactive, voice-driven experiences that can collaborate in increasingly human-like ways. The technology to build these systems is no longer a future concept; it’s here and ready to be deployed.

This project is a demonstration of what is now possible. I encourage you to explore the repository, envision how these capabilities could be used in your own organization, and start building. For a deeper dive into the Azure Voice Live API, check the official documentation.

The full code for this technology demonstrator is available on GitHub. You can deploy it to your own Azure subscription in minutes using the Azure Developer CLI (azd up).

GitHub Repository: https://github.com/Azure-Samples/voicelive-api-salescoach

0 comments

Be the first to start the discussion.