Learn how to deploy a self-hosted AI assistant that leverages Microsoft Learn content via the Model Context Protocol (MCP) and Azure OpenAI. It’s fast, secure, and ready for developer use in real-world apps.

Prerequisite

This guide assumes you already have an Azure OpenAI resource provisioned in your subscription, with a deployed model (e.g., gpt-4, gpt-4.1, or gpt-35-turbo). If not, follow the Azure OpenAI Quickstart before proceeding.

The goal

Imagine being able to ask Microsoft Learn, “How do I monitor AKS workloads?” or “What are best practices for Azure Bicep deployments?” and instantly get a precise, summarized answer powered by GPT-4.1 and grounded in documentation.

This blog post walks through deploying a production-ready AI assistant, using:

- Microsoft’s Model Context Protocol (MCP)

- Azure OpenAI (GPT-4.1 Mini deployment)

- Azure Container Apps for secure, serverless hosting

- Docker and ACR for packaging and image delivery

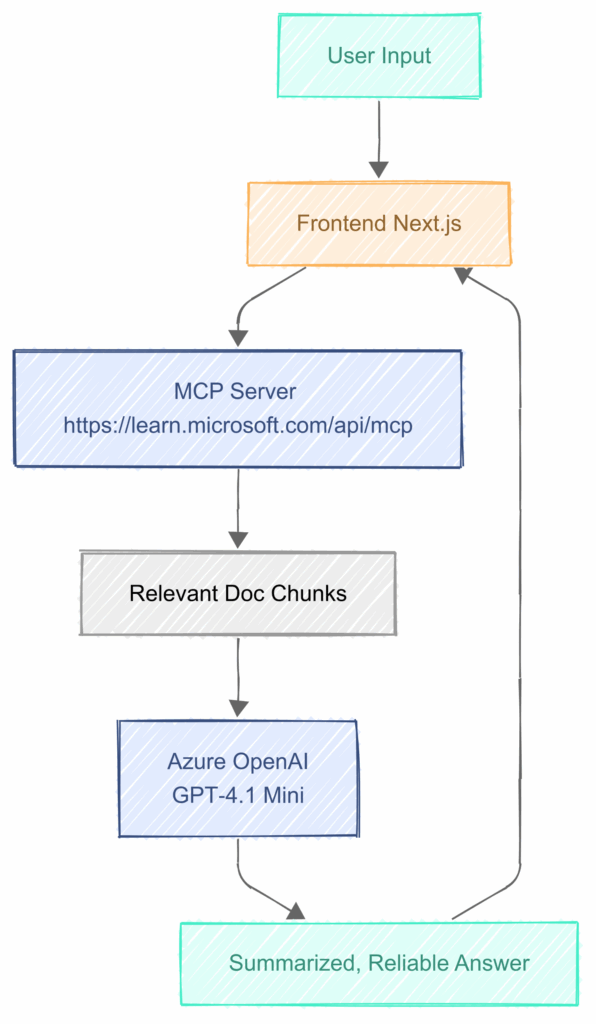

Architecture overview

The flow is based on Model Context Protocol (MCP), an emerging standard designed to provide trusted, contextual information for LLMs and copilots.

The result: an agent that’s grounded in official content and capable of serving precise answers to developers.

Deploying your own instance

Let’s walk through building and deploying your own instance of mslearn-mcp-chat, a lightweight web app powered by Next.js.

Step 1: Clone the project

git clone https://github.com/passadis/mslearn-mcp-chat.git

cd mslearn-mcp-chat

Step 2: Auto-discover Azure OpenAI config

If you’ve already deployed an Azure OpenAI resource and a GPT-4 deployment, you can auto-discover everything and generate .env.local like this:

#!/bin/bash

# Get resource group and AOAI resource name

RESOURCE_GROUP=$(az cognitiveservices account list \

--query "[?kind=='OpenAI'].resourceGroup" -o tsv | head -n1)

AOAI_RESOURCE_NAME=$(az cognitiveservices account list \

--query "[?kind=='OpenAI'].name" -o tsv | head -n1)

# Get endpoint

AOAI_ENDPOINT=$(az cognitiveservices account show \

--name "$AOAI_RESOURCE_NAME" \

--resource-group "$RESOURCE_GROUP" \

--query "properties.endpoint" -o tsv)

# Get API key

AOAI_KEY=$(az cognitiveservices account keys list \

--name "$AOAI_RESOURCE_NAME" \

--resource-group "$RESOURCE_GROUP" \

--query "key1" -o tsv)

# Get deployment name (adjust model name filter if needed)

DEPLOYMENT_NAME=$(az cognitiveservices account deployment list \

--name "$AOAI_RESOURCE_NAME" \

--resource-group "$RESOURCE_GROUP" \

--query "[?contains(properties.model.name, 'gpt-4')].name" -o tsv | head -n1)

# Write to .env.local

cat <<EOF > .env.local

AZURE_OPENAI_KEY=$AOAI_KEY

AZURE_OPENAI_ENDPOINT=$AOAI_ENDPOINT

AZURE_OPENAI_DEPLOYMENT_NAME=$DEPLOYMENT_NAME

EOF

echo ".env.local created"

cat .env.localStep 3: Export for CLI usage

Since Azure CLI doesn’t parse .env.local, create a temp exported version:

sed 's/^/export /' .env.local > .env.exported

source .env.exportedNow you can use those variables in your az commands.

Step 4: Create Azure resources

az group create --name rg-mcp-chat --location eastus

az acr create --name acrmcpchat \

--resource-group rg-mcp-chat \

--sku Basic --admin-enabled trueStep 5: Dockerize and push the image

Create a Dockerfile

FROM node:20

WORKDIR /app

COPY . .

RUN npm install && npm run build

EXPOSE 3000

CMD ["npm", "start"]Build & Push

docker build -t acrmcpchat.azurecr.io/mcp-chat:latest .

az acr login --name acrmcpchat

docker push acrmcpchat.azurecr.io/mcp-chat:latest

Step 6: Create a Container App Environment

az containerapp env create \

--name env-mcp-chat \

--resource-group rg-mcp-chat \

--location eastusStep 7: Deploy the Container App

az containerapp create \

--name mcp-chat-app \

--resource-group rg-mcp-chat \

--environment env-mcp-chat \

--image acrmcpchat.azurecr.io/mcp-chat:latest \

--registry-server acrmcpchat.azurecr.io \

--cpu 1.0 --memory 2.0Gi \

--target-port 3000 \

--ingress external \

--env-vars \

AZURE_OPENAI_KEY=$AZURE_OPENAI_KEY \

AZURE_OPENAI_ENDPOINT=$AZURE_OPENAI_ENDPOINT \

AZURE_OPENAI_DEPLOYMENT_NAME=$AZURE_OPENAI_DEPLOYMENT_NAMEStep 8: Get the public URL

az containerapp show \

--name mcp-chat-app \

--resource-group rg-mcp-chat \

--query properties.configuration.ingress.fqdn \

--output tsvOpen that URL in your browser and try questions like:

- “What’s the best way to deploy Azure Functions using Bicep?”

- “How does Azure Policy work with management groups?”

- “What’s the difference between vCore and DTU in Azure SQL?”

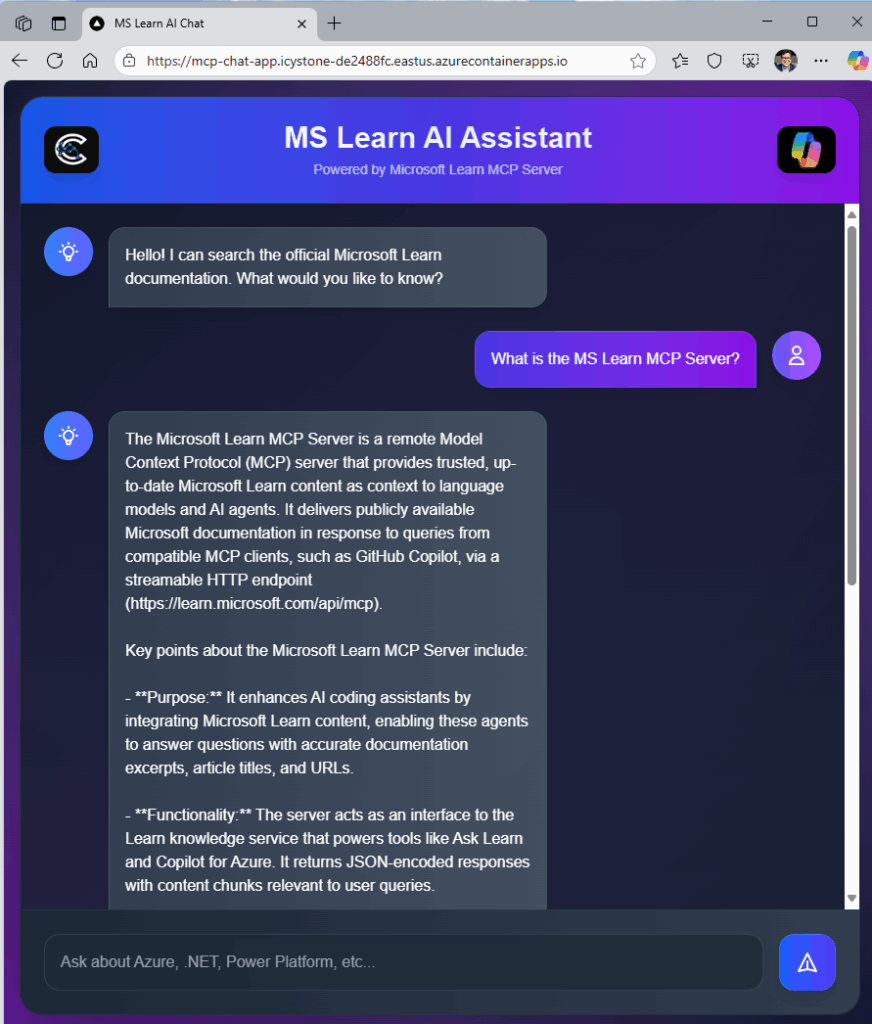

Here’s how the app looks when running:

Why model context protocol (MCP) matters

MCP is a structured protocol that helps AI assistants ground responses in official sources, such as Microsoft Learn and Docs, by returning text fragments (chunks) for use in RAG pipelines or summarization prompts.

Sample MCP payload:

{

"jsonrpc": "2.0",

"id": "chat-123",

"method": "tools/call",

"params": {

"name": "microsoft_docs_search",

"arguments": {

"question": "How do I deploy AKS with Bicep?"

}

}

}The assistant receives those docs, crafts a system message, and sends the question and context to Azure OpenAI for synthesis.

What you get

| Feature | Benefit |

|---|---|

| 🧩 MCP Integration | Answers grounded in Microsoft Learn docs |

| 🔐 AOAI Security | Backend-only key usage, never exposed in client |

| 🚀 Container Apps | Scalable, secure hosting with no infrastructure to manage |

| 🛠️ Dev-Focused Stack | Next.js + Node + Azure CLI + ACR, fast to iterate |

Final thoughts

Whether you’re building an internal dev assistant, an Azure learning tool, or testing out custom copilots, the MCP + Azure OpenAI combo is powerful, trustworthy, and fully customizable. And thanks to Azure Container Apps, deploying it is just a few CLI commands away.

0 comments

Be the first to start the discussion.