Hello Android developers!

We’re excited to announce that version 30.9.0 of the Android emulator now supports multiple touch points to let you test gestures and interactions that require more than one finger! Multi-touch support requires the emulator be running on a touch screen device, which includes most modern Windows PCs, including the Microsoft Surface line.

Pinch, zoom, rotation etc.

Lots of common UI practices are hard to test on emulators without multi-touch support, including pinch and spread gestures common on map controls, and zooming photos, web pages, and other content.

Figure 1: Emulator multitouch recognition

Figure 2: Emulator pinch zoom in gesture recognition

Figure 3: Emulator zoom out pinch gesture recognition.

Figure 4: Multitouch rotation gesture recognition

Multiple touch points

The emulator updates go beyond two-finger gestures to recognize the maximum number of touch points supported by your hardware, up to ten separate contacts.

In order to have the capability of using multi touch the requirement is that the emulator must be running on a device with touch screen capabilities which by default is present on most modern Windows devices including the Microsoft Surface line.

Microsoft Surface family, like the Surface Laptop, Book, Pro, or Surface Duo have great support for multitouch recognition, but other devices with multitouch screen support should work too. Multitouch can help with enhancing the user experience by simplifying interactions and giving the user a new perspective on how applications can be modeled in order to take advantage of this feature.

Most Android application developers will have used the Android Emulator to develop, test and debug their applications. Up until now touch interaction was limited to only one touch point, which was recognized more as a mouse click than an actual physical touch point.

Multitouch was extremely limited because you could not simulate complex or even simple gestures. The only multitouch implementation simulated just two touch points by generating two corresponding orthogonally symmetrical mouse click points.

Now developers have the capability of not only receiving information on each touch point but also receiving and registering for multitouch gestures like pinch zoom in, punch zoom out, rotations using multiple fingers, drag gestures etc. Now users can properly integrate and test these gestures when developing Android applications.

Figure 6: Multi-touch ten fingers recognition

Open-source project

For developers interested in the underlying implementation, the QEMU emulator source is available at android.googlesource.com.

The multitouch implementation proposal is in change 1737613.

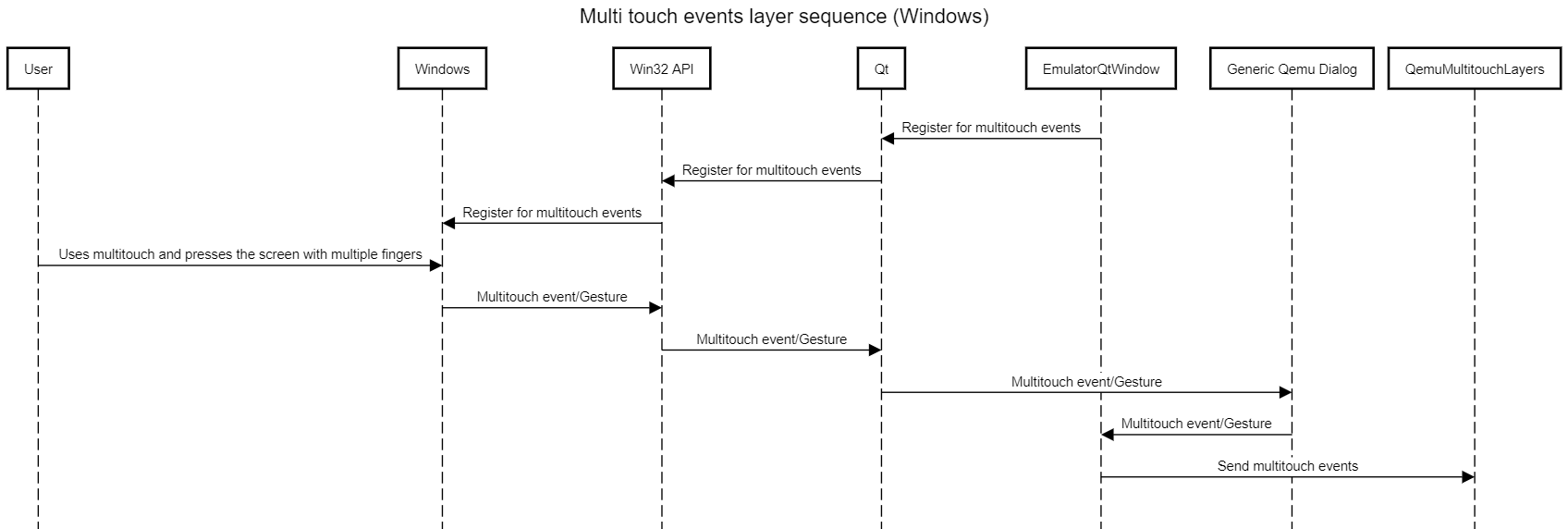

The multi-touch implementation is implemented according to the following observations:

- Before multi-touch events can be captured and sent to the Linux kernel the main dialog responsible for interacting with the user and showing the emulators main content, in Windows it must be registered to the OS so it knows which dialog can receive and process specific finger touch events.

-

The main GUI framework behind the scenes is QT. The constructor of QEMU dialogs that should receive events must be modified to signal the OS. In our specific case, the base class for almost all dialogs in QEMU is

EmulatorQtWindowclass. Modifying the constructorEmulatorQtWindow::EmulatorQtWindow(QWidget* parent)will let all sub dialogs explicitly handle and receive multi-touch events. All events subsequently will be eventually passed back to theEmulatorQtWindowbecause of how QT Works. This is the main component that forwards the multi-touch events to the filtering layers. -

The constructor

EmulatorContainer::EmulatorContainer(EmulatorQtWindow* window)is another component that can receive multi-touch events. All the dialogues that are created in QEMU use these basic classes. All events first pass through them and they are consumed or forwarded. Figure 6 illustrates how events are passed though pipelines. TheEmulatorContainerclass in our case will just forward the multi-touch events toEmulatorQtWindow. - After this initial step, events are passed down to the filtering layers. These filters process and deserialize/serialize/convert these events to compatible internal objects.

-

After conversion and validation of touch points, they are pushed into the Linux Kernel using the VirtIO stack. Before pushing, they are managed internally using a buffer that has been defaulted to manage a maximum of ten elements. The managing part consists of identifying previous touch points by

stateandid. There are at least three possible states:- Touch Point Pressed – This state generates a new touch point.

- Touch Point Moved – This state updates a previous touch point.

- Touch Point Released – This state removes the touch point from the buffer.

Please note that other states exist but are ignored, i.e., the main function responsible with the management of the touch points.

void multitouch_update(MTESource source,

const SkinEvent* const data,

int dx,

int dy) {

MTSState* const mts_state = &_MTSState;

const int slot_index = _mtsstate_get_pointer_index(mts_state, data->u.multi_touch_point.id);

if (data->type == kEventTouchBegin) {

if (slot_index != -1) {

assert(1 == 2 && "Event of type kEventTouchBegin must provide new ID for touch point. ID already used");

return;

}

_touch_down(mts_state, &data->u.multi_touch_point, dx, dy);

} else if (data->type == kEventTouchUpdate) {

_touch_update(mts_state, &data->u.multi_touch_point, dx, dy, slot_index);

} else if (data->type == kEventTouchEnd) {

_touch_release(mts_state, slot_index);

} else {

assert(1 == 2 && "Event type not supported");

return;

}

if (!data->u.multi_touch_point.skip_sync) {

_push_event(EV_SYN, LINUX_SYN_REPORT, 0);

}

}

Figure 6: Multi-touch general architecture

The following information is pushed into the Linux kernel. For each touch point, the following set of data members should be accessible to users (indirectly using the desired framework). Depending on hardware capabilities, some of these members might have optional constant values, regardless of how the user provides input:

// It presents id number that uniquely identifies the touch point.

// The value can vary so any assumptions regarding ordering is invalid

// For example, the assumption that id values start from 0 is false

int id;

// Optional:

// This value represents the pressure of the touch point.

// The value can vary in the range of 0 and 1

// If the hardware is not capable of detecting pressure, then the value is

// always constant regardless of physical input pressures

int pressure;

// Optional:

// The value bellow represent the angular rotation of a touch point.

// If hardware is not capable of detecting orientation, then the value will be 0

int orientation;

// It represents x coordinate of touch point relative to the main emulator dialog

int x;

// y coordinate of touch point relative to the main emulator dialog

int y;

// x coordinate of touch point relative to the whole screen

// X coordinate of screen position.

int x_global;

// y coordinate of touch point relative to the whole screen.

// y coordinate of screen position.

int y_global;

// Optional: Contains the maximum value between width or height of

// the corresponding ellipse of a touch point

// If the hardware is not capable of detecting the

// shape of the touch point the value can be 0 or 1 (the touch point

// is detected as always being a circle) or any nonzero constant value

int touch_major;

// Optional: Contains the minimum value between width or height of

// the corresponding ellipse of a touch point

// If the hardware is not capable of detecting the

// shape of the touch point the value can be 0 or 1 (the touch point

// is detected as always being a circle) or any nonzero constant value

int touch_minor;

Examples of how developers can use/interact or detect touch events and touch gestures:

- Handle multi-touch gestures (Android developer docs)

- Detect common gestures (Android developer docs)

- MotionEvent (Android developer docs)

- Drag and scale (Android developer docs)

Resources and feedback

These changes are part of the Android emulator, which can be updated from within Android Studio SDK Manager.

If you have any questions, or would like to tell us about your Surface Duo apps, use the feedback forum or message us on Twitter @surfaceduodev.

Finally, please join us for our dual screen developer livestream at 11am (Pacific time) each Friday – mark it in your calendar and check out the archives on YouTube.

0 comments