The Architectural Imperative: Why Multi-Agent Orchestration is Essential

In modern enterprise AI systems, the scope and complexity of real-world business challenges quickly exceed the capabilities of a single, monolithic AI Agent. Facing tasks like end-to-end customer journey management, multi-source data governance, or deep human-in-the-loop review processes, the fundamental architectural challenge shifts: How do we effectively coordinate and manage a network of specialized, atomic AI capabilities?

Much like a high-performing corporation relies on specialized departments, we must transition from a single-executor model to a Collaborative Multi-Agent Network.

The Microsoft Agent Framework is designed to address this paradigm shift, offering a unified, observable platform that empowers developers to achieve two core value propositions:

Scenario 1: Architecting Professionalized AI Agent Units

Each Agent serves as a specialized, pluggable, and independently operating execution unit, underpinned by three critical pillars of intelligence:

- LLM-Powered Intent Resolution: Leveraging the power of Large Language Models (LLMs) to accurately interpret and map complex user input requests.

- Action & Tooling Execution: Performing actual business logic and operations by invoking external APIs, tools, or internal services (like MCP servers).

- Contextual Response Generation: Returning precise, valuable, and contextually aware smart responses to the user based on the execution outcome and current state.

Developers retain the flexibility to utilize leading model providers, including Azure OpenAI, OpenAI, Azure AI Foundry or local models, to customize and build these high-performance Agent primitives.

Scenario 2: Dynamic Coordination via Workflow Orchestration

The Workflow feature is the flagship capability of the Microsoft Agent Framework, elevating orchestration from simple linear flow to a dynamic collaboration graph. It grants the system advanced architectural abilities:

- 🔗 Architecting the Collaboration Graph: Connecting specialized Agents and functional modules into a highly cohesive, loosely coupled network.

- 🎯 Decomposing Complex Tasks: Automatically breaking down macro-tasks into manageable, traceable sub-task steps for precise execution.

- 🧭 Context-Based Dynamic Routing: Utilizing intermediate data types and business rules to automatically select the optimal processing path or Agent (Routing).

- 🔄 Supporting Deep Nesting: Embedding sub-workflows within a primary workflow to achieve layered logical abstraction and maximize reusability.

- 💾 Defining Checkpoints: Persisting state at critical execution nodes to ensure high process traceability, data validation, and fault tolerance.

- 🤝 Human-in-the-Loop Integration: Defining clear request/response contracts to introduce human experts into the decision cycle when necessary.

Crucially, Workflow definitions are not limited to Agent connections; they can integrate seamlessly with existing business logic and method executors, providing maximum flexibility for complex process integration.

Deeper Dive: Workflow Patterns

Drawing on the GitHub Models examples, we demonstrate how to leverage the Workflow component to enforce structure, parallelism, and dynamic decision-making in enterprise applications.

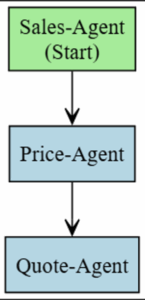

1. Sequential: Enforcing Structured Data Flow

- Definition: Executors are run in a predefined order, where the output of each step is validated, serialized, and passed as the normalized input for the next executor in the chain.

- Architectural Implication: This pattern is essential for pipelines requiring strict idempotency and state management between phases. You should strategically use Transformer Executors (like

to_reviewer_result) at intermediate nodes for data formatting, validation, or status logging, thereby establishing critical checkpoints.

# Linear flow: Agent1 -> Agent2 -> Agent3

workflow = (

WorkflowBuilder()

.set_start_executor(agent1)

.add_edge(agent1, agent2)

.add_edge(agent2, agent3)

.build()

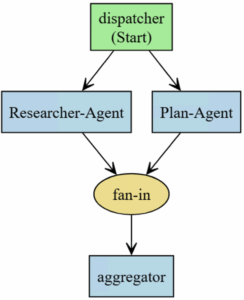

)2. Concurrent: Achieving High-Throughput Fan-out/Fan-in

- Definition: Multiple Agents (or multiple instances of the same Agent) are initiated concurrently within the same workflow to minimize overall latency, with results merged at a designated Join Point.

- Architectural Implication: This is the core implementation of the Fan-out/Fan-in pattern. The critical component is the Aggregation Function (

aggregate_results_function), where custom logic must be implemented to reconcile multi-branch returns, often via voting mechanisms, weighted consolidation, or priority-based selection.

workflow = (

ConcurrentBuilder()

.participants([agentA, agentB, agentC])

.build()

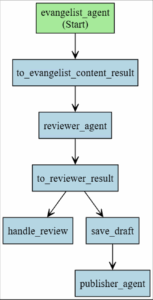

)3. Conditional: State-Based Dynamic Decisioning

- Definition: The workflow incorporates a decision-making executor that dynamically routes the process to different branches (e.g., Save Draft, Rework, Human Review) based on the intermediate results or predefined business rules.

- Architectural Implication: The power of this pattern lies in the selection function (

selection_func). It receives the parsed intermediate data (e.g.,ReviewResult) and returns a list of target executor IDs, enabling not just single-path routing but also complex logic where a single data item can branch into multiple parallel paths.

def select_targets(review, targets):

handle_id, save_id = targets

return [save_id] if review.review_result == "Yes" else [handle_id]

workflow = (

WorkflowBuilder()

.set_start_executor(evangelist_executor)

.add_edge(evangelist_executor, reviewer_executor)

.add_edge(reviewer_executor, to_reviewer_result)

.add_multi_selection_edge_group(to_reviewer_result, [handle_review, save_draft], selection_func=select_targets)

.build()

)In sophisticated production scenarios, these patterns are frequently layered: for instance, a Concurrent search and summarization phase followed by a Conditional branch that routes the result to either automatic publishing or a Sequential Human-in-the-Loop review process.

Production-Grade Observability: Harnessing DevUI and Tracing

For complex multi-agent systems, Observability is non-negotiable. The Microsoft Agent Framework offers an exceptional developer experience through the built-in DevUI, providing real-time visualization, interaction tracking, and performance monitoring for your orchestration layer.

The following simplified code demonstrates the key steps to enable this capability in your project (see project main.py):

- Core Workflow Construction (code unchanged)

# Transform and selection function example

@executor(id="to_reviewer_result")

async def to_reviewer_result(response, ctx):

parsed = ReviewAgent.model_validate_json(response.agent_run_response.text)

await ctx.send_message(ReviewResult(parsed.review_result, parsed.reason, parsed.draft_content))

def select_targets(review: ReviewResult, target_ids: list[str]) -> list[str]:

handle_id, save_id = target_ids

return [save_id] if review.review_result == "Yes" else [handle_id]

# Build executors and connect them

evangelist_executor = AgentExecutor(evangelist_agent, id="evangelist_agent")

reviewer_executor = AgentExecutor(reviewer_agent, id="reviewer_agent")

publisher_executor = AgentExecutor(publisher_agent, id="publisher_agent")

workflow = (

WorkflowBuilder()

.set_start_executor(evangelist_executor)

.add_edge(evangelist_executor, to_evangelist_content_result)

.add_edge(to_evangelist_content_result, reviewer_executor)

.add_edge(reviewer_executor, to_reviewer_result)

.add_multi_selection_edge_group(to_reviewer_result, [handle_review, save_draft], selection_func=select_targets)

.add_edge(save_draft, publisher_executor)

.build()

)- Launching with DevUI for Visualization (project

main.py)

from agent_framework.devui import serve

def main():

serve(entities=[workflow], port=8090, auto_open=True, tracing_enabled=True)

if __name__ == "__main__":

main()Implementing End-to-End Tracing

When deploying multi-agent workflows to production or CI environments, robust tracing and monitoring are essential. To ensure high observability, you must confirm the following:

When deploying multi-agent workflows to production or CI environments, robust tracing and monitoring are essential. To ensure high observability, you must confirm the following:

- Environment Configuration: Ensure all necessary connection strings and credentials for Agents and tools are loaded via

.envprior to start up. - Event Logging: Within Agent Executors and Transformers, utilize the framework’s context mechanism to explicitly log critical events (e.g., Agent responses, branch selection outcomes) for easy retrieval by DevUI or your log aggregation platform.

- OTLP Integration: Set

tracing_enabledtoTrueand configure an OpenTelemetry Protocol (OTLP) exporter. This enables the complete execution call chain (Trace) to be exported to an APM/Trace platform (e.g., Azure Monitor, Jaeger). - Sample Code:https://github.com/microsoft/Agent-Framework-Samples/tree/main/08.EvaluationAndTracing/python/multi_workflow_aifoundry_devui

By pairing the DevUI’s visual execution path with APM trace data, you gain the ability to rapidly diagnose latency bottlenecks, pinpoint failures, and ensure full control over your complex AI system.

Next Steps: Resources for the Agent Architect

Multi-Agent Orchestration represents the future of complex AI architecture. We encourage you to delve deeper into the Microsoft Agent Framework to master these powerful capabilities.

Here is a curated list of resources to accelerate your journey to becoming an Agent Architect:

-

Microsoft Agent Framework GitHub Repo: https://github.com/microsoft/agent-framework

-

Microsoft Agent Framework Workflow official sample: https://github.com/microsoft/agent-framework/tree/main/python/samples/getting_started/workflows

-

Community and Collaboration: https://discord.com/invite/azureaifoundry

Great article thank you. Do you have guidance around when to decompose tasks vs when to leave the work as a single task? I can see where it might be easy to go overboard on decomposition.

I personally think you should divide it into stages and tasks, like a working group would be better.