Image: DJI Inspire 1 Drone by DFSB DE, used by CC BY-SA 2.0

Background

The drone industry is a fast growing space with more and more players joining this field in a rapid pace. Many drone applications, in particular, autonomous drone ones, require vision capabilities. The camera feed is being processed by the drone in order to support tasks such as tracking, navigating, filming, and more. Companies that are using video processing and image recognition as part of their solutions, need the ability to train their algorithms to identify objects in a video stream. Whether it’s a drone that is trained to identify suspicious activities such as human presence where it shouldn’t be, or a robot that is navigating itself indoors following its human owner- they all need the ability to identify and track objects around them. In order to improve the recognition/tracking algorithms, there’s a need to create a collection of manually tagged videos, which serves as the ground truth.

The Problem

The tagging work today is very sisyphean manual work that is done by humans (e.g., Mechanical Turk). The Turks are sitting in front of the video, watching it frame by frame, and maintaining excel files describing each frame in the video stream. The data that is kept for each frame is the frame’s number, which objects are found in the frame, and the area in the frame in which these objects are located. The video and the tagging metadata is then used by the algorithm to learn how to identify these objects. The tagging data that was created manually by a Turk (the ground truth), is also used to run quality and regression tests for the algorithm, by comparing the algorithm’s tagging results to the Turk’s tagging data.

Exploring the web for existing solutions didn’t bring great results. While larger companies in the video processing space are developing their own internal tools for doing the tagging work, the small startups that don’t have the bandwidth to invest in developing such tools (which are also not part of their IP) are doing manual work, tagging the videos frame by frame using excel. We couldn’t find any tool that came near something that we could easily just take and use.

Solutions similar to Mechanical Turk have the following limitation, which brought us to the decision of developing this tool: 1) Lack of or poor video tagging support, there aren’t good (OSS) tools today. 2) High quantity over high quality. 3) Sometimes there’s a business need to keep those videos confidential, and allow tagging by trusted people only.

The Engagement

We engaged with Percepto and ThirdEye Systems which are startups that provide vision for drones. Both of these startups needed some kind of a frame-by-frame video tagging tool to be able to use it with their video processing algorithms as described above. We met each of the startups for a few days of hacking, to discuss their scenarios, do some brainstorming and scope the problem. We then decided on developing a video tagging tool, with the most essential features, addressing their needs. The video tagging tool is composed of the following two main modules:

HTML Video-Tagging Control

This is a polymer based web control that enables those who consume it to add it to their web pages just like they would with any other native HTML element. It provides basic functionality like navigating through the video frames, selecting an area or a specific location on the frame, and tag it with a single or multiple tagging, and more. Detailed feature list, code, demo and usage can be found in the project’s Github repository.

A Video Tagging Web Tool

This is a single-page, angular.js based web application that provides a basic holistic solution for managing users, videos and tagging jobs. It uses the Video-Tagging HTML control to demonstrate a real use of it in an actual web app. The tool comes with built-in authentication and authorization mechanisms. We used Google login for the authentication, and defined two main roles for the authorization. Each user is either an Admin or an Editor.

An Admin is able to add users, upload videos and create video-tagging jobs (assigning users to videos). An Admin can also review and approve video-tagging jobs, as well as fix specific tags while reviewing. An Editor can only view his jobs list, and do the actual tagging work. When the tagging-work is done, the editor sends the job for review, which is done by an Admin that reviews and approves it. In the end, the tags can also be downloaded (json format) to be used with the video-processing algorithms.

The data schema of each entity (user, video, job) was designed to be extensible. Users can use the tool as is, with its current DB schema (Sql server), and add more data items without changing the schema. In the tool, for example, we keep various of metadata items for a job, like RegionType for example, to define if we would like to tag a specific location, or an area in the frames.

The server side code isn’t aware of this data. It is just being used as a pipe between the client side and the storage layer. It was important for us to provide a framework that will enable adding features without changing the schema, or at least minimizing the amount of changes required to add more feature to the tool.

Detailed feature list, code, and usage can be found in the project’s Github repository.

The Tool in Action

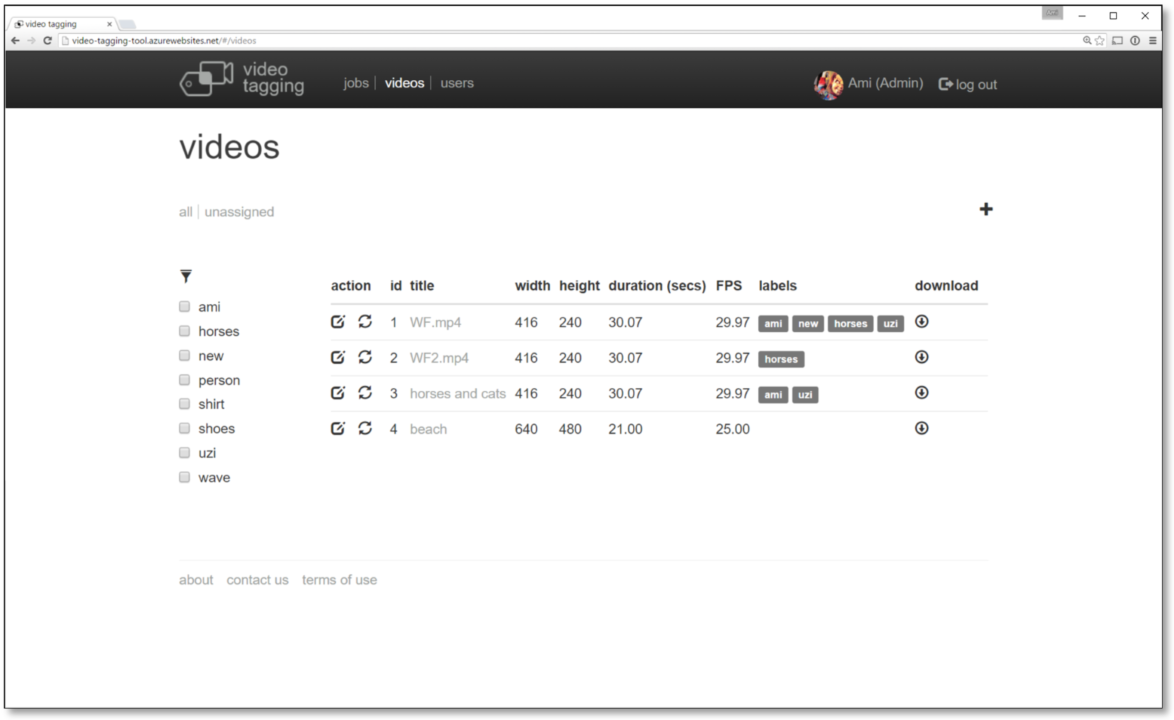

Managing videos

- Videos list

- Filter videos by labels

- Edit videos

- Create a tagging job for a video

- Download tags for a video

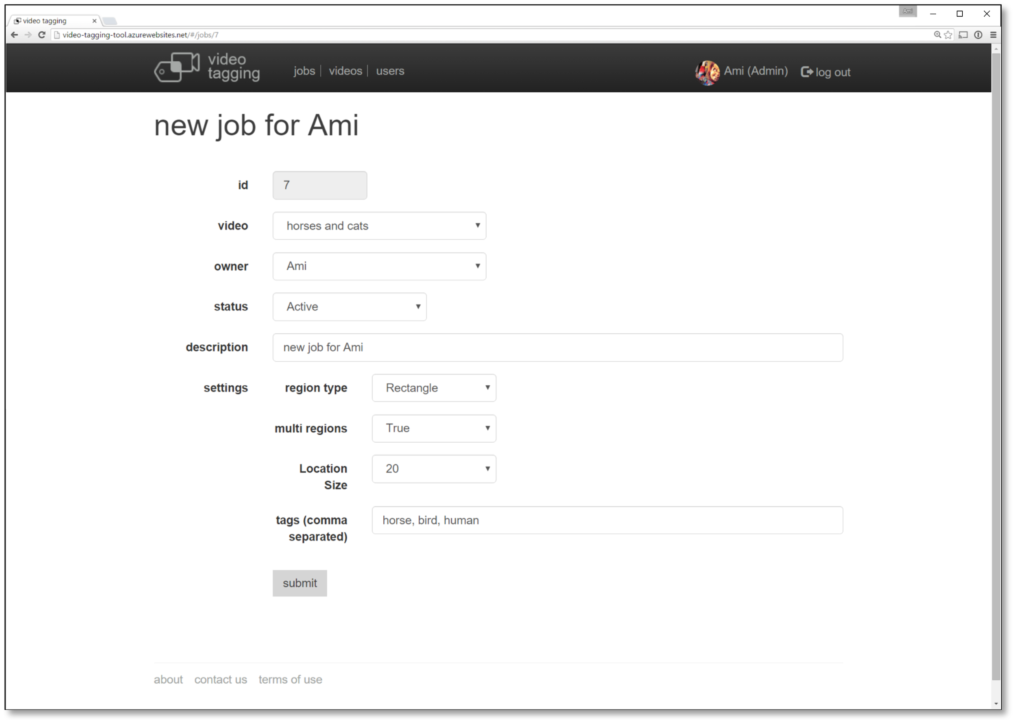

Creating a job

- Assigning a video to a user for tagging

- Configure the tagging job

The settings section is UI specific- the server side nor the DB layer are aware of these data items

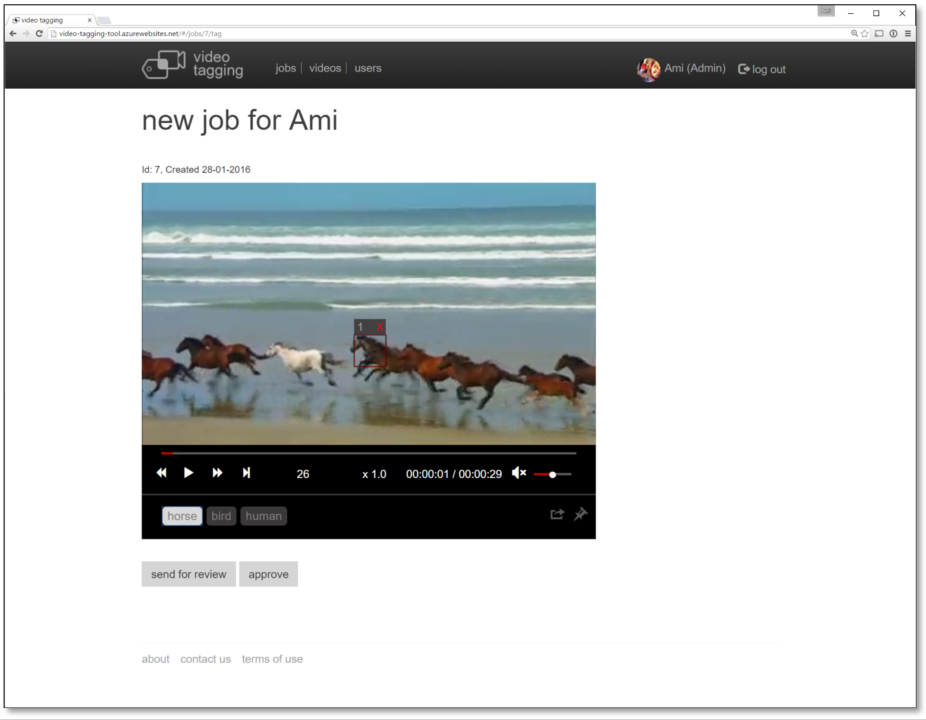

The Tagging

- Navigating frame by frame

- Select areas on the frame, and tags for each area

- Video controls

- Review a tagging job

- Send a job for review by an Admin, or approve the tagging job

Opportunities for reuse

As previously mentioned, the tool provides holistic solution with basic functionality for managing users, videos and tagging tasks. It can be used by anyone who has similar need for video tagging, whether for drones, robots, etc. It can either be used as is, or be extended with more features based on your needs. For example, you can extend the shapes of the tagging area by implementing a more specific polygon for tagging. Other features like supporting multiple users tagging the same video, calculating average/conflation of their results, etc. We encourage you to contribute to the project by sending pull requests for new features so that others can benefit from your work as well.