Azure AI Foundry makes fine-tuning smarter, faster, and more accessible than ever. Whether you’re building agents that reason, tools that adapt, or workflows that scale, this is your launchpad for customizing models to solve real business challenges. Dive in to discover best practices, hands-on resources, and the latest innovations so you can build, test, and deploy specialized AI with confidence.

Azure AI Foundry makes fine-tuning smarter, faster, and more accessible than ever. Whether you’re building agents that reason, tools that adapt, or workflows that scale, this is your launchpad for customizing models to solve real business challenges. Dive in to discover best practices, hands-on resources, and the latest innovations so you can build, test, and deploy specialized AI with confidence.

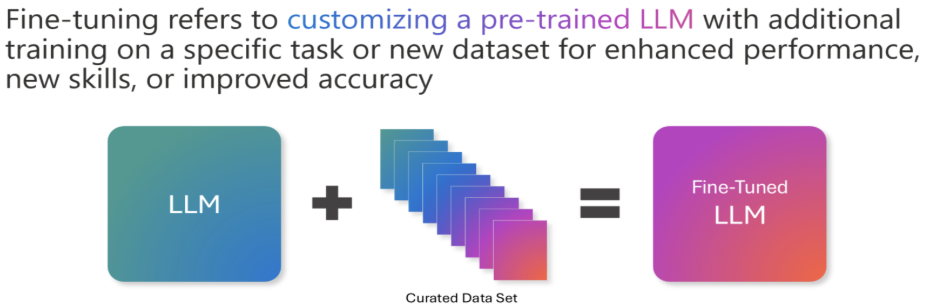

What is Fine-tuning?

Fine-tuning refers to customizing a pre-trained LLM with additional training on a specific task or new dataset for enhanced performance, new skills, or improved accuracy. So instead of building an AI model from scratch, you take a powerful pre-trained model and give it extra training using your own examples, and it learns your style, your needs, and your domain.

Fine-tune for Specialized AI Tasks

Foundation models are powerful, but they’re generalists by design. When precision matters, especially in domain-specific applications, fine-tuning is the unlock. It lets teams shape models to their exact needs, boosting accuracy, slashing latency, and reducing inference costs. Whether you’re building tools for legal contract analysis, conversational agents that understand nuance, or wealth advisory copilots that deliver tailored insights, fine-tuning turns a capable model into a specialized expert. It’s not just about better answers, it’s about delivering relevant, reliable, and ready-to-deploy intelligence.

o4-mini RFT Wealth Advisory Demo

Use Case: From Generalist to Expert

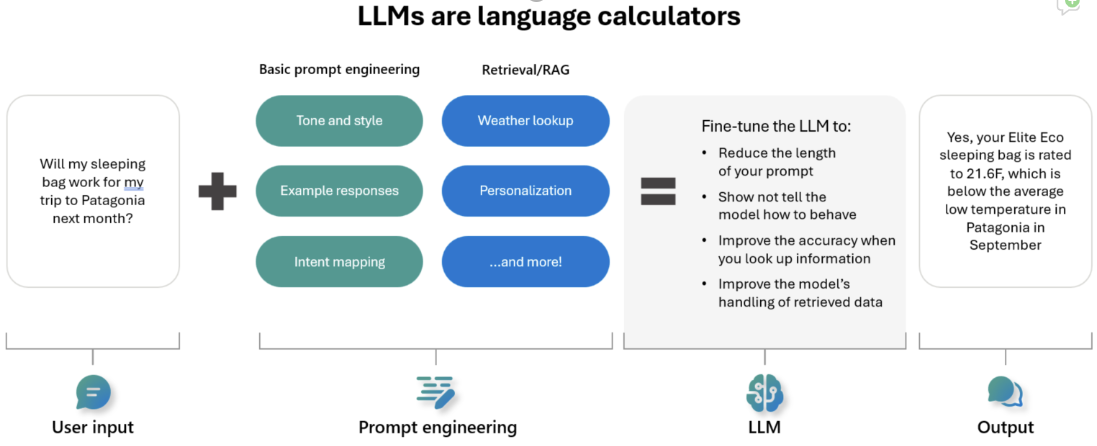

When preparing for a trip to Patagonia, one common concern is whether a sleeping bag will be warm enough for the conditions: “will my sleeping bag work for Patagonia?” becomes a perfect test case for fine-tuning. By combining user input, prompt engineering, and RAG, the model evolves from giving vague travel advice to delivering a precise, context-aware recommendation.

Think of the LLM as a language calculator: user input is the raw data, prompt engineering defines the operation, and fine-tuning adds the specialized logic. The result? A model that factors in regional weather, seasonal temperature ranges, and gear specs to give an answer that was not just helpful but actionable.

Best Practices in the Agentic Era

Done right, fine-tuning is a superpower. Done wrong, it’s a liability. A robust, iterative approach, grounded in clear objectives, high-quality data, and continuous feedback, is essential for safe, effective, and innovative agent deployments.

- Start with Clear Objectives: Define the specific use case, tasks and outcomes you want your model to achieve. Common use cases include reducing prompt length, teaching new skills, improving tool use, and domain adaptation. Consider vertical use cases such as improving dialect/tone responses, customer specific knowledge inclusion, or natural language to code applications.

- Invest in Data Quality: High-quality training data is the backbone of effective fine-tuning. The better your training data, the more effective your fine-tuned model will be.

- Establish a Signals Loop: Agents evolve and so should your model. Continuously evaluate model performance and retrain as needed using observed reasoning, tool use, and performance metrics to ensure alignment, safety, and accuracy.

- Build Fine-tuning into the Agent-building Process: Organizations can enhance the performance of pre-trained models and enable agents to meet unique business requirements without starting from scratch.

- Leverage Azure’s Ecosystem: Azure offers a full-stack toolkit for building and deploying fine-tuned agents. Take advantage of integrated tools, documentation, and community resources for support and innovation.

Fine-tuning Innovations in Azure AI Foundry

Recent Azure updates have made fine-tuning more flexible, affordable, and developer-friendly, enabling teams to build agents that are not just reactive.

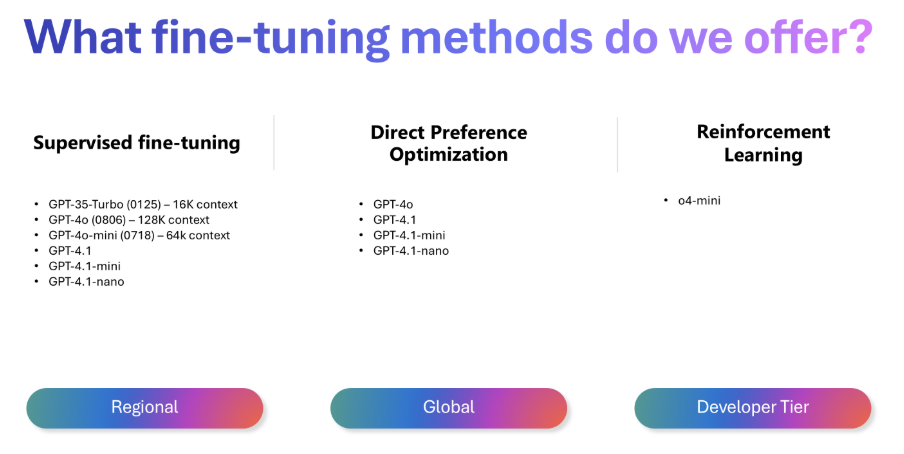

- Reinforcement Fine-Tuning (RFT) Upgrades: Promoted o4-mini RFT to general availability, o4-mini RFT enables models to learn from reward signals and optimize for complex objectives which is essential for agentic workflows. RFT is now fully API and Swagger ready, enabling users to fine-tune models using reinforcement learning techniques.

- More Global Training Options: Expanded support for Azure OpenAI model fine-tuning across any of the 26 supported regions, making it easier to deploy customized models worldwide. We’ve also promoted Global Training to generally available and added support for gpt-4o and gpt-4o-mini.

- Developer Tier for hosting/inferencing: Experiment faster with this cost-effective way to evaluate and test fine-tuned models before scaling to production, now promoted to generally available. Key features include:

- Deploy fine-tuned GPT-4.1 and GPT-4.1-mini models from any training region, including Global Training.

- Free hosting for 24 hours per deployment.

- Pay per token at the same rate as Global Standard, helping you budget your testing costs.

- Simultaneously evaluate multiple models to choose the best candidate for production.

- Evaluations Enhancements: Added several new capabilities: RFT Observability (Auto-Evals), Quick Evals, and Python Grader streamlining evals and debugging during fine-tuning, now in public preview.

- More Granular Control: Added the ability to copy fine-tuned models across Azure regions via REST API, enabling flexible multi-region deployment and added Pause & Resume capabilities, giving customers control to halt and resume fine-tuning jobs without losing progress.

Getting Started with Fine-Tuning in Azure AI Foundry

Azure AI Foundry makes it easy to begin fine-tuning advanced language models for your specific use case. Once you have your use case(s) figured out you can dive into:

- Data Preparation: Gather and curate high-quality, domain-specific datasets.

- Model Selection: Choose the right foundation model (e.g., GPT-4o, 4.1-nano).

- Training & Optimization: Use advanced techniques like Direct Preference Optimization (DPO), RFT, and distillation to enhance model performance.

- Deployment: Seamlessly deploy fine-tuned models with automatic scaling and monitoring.

- Iterate and evaluate: Fine-tuning is an iterative process—start with a baseline, measure performance, and refine your approach based on results to build a reliable signals loop.

Featured Hands-on Technical Content & Community Resources

Whether you’re just getting started or scaling production-grade agents, Azure offers a rich ecosystem of developer-first resources to support every stage of your fine-tuning workflow:

Code Samples

| Name | Description | Links |

| O4-mini RFT Code Sample | Dive into a hands-on example of fine-tuning a reasoning model (o4-mini) using RFT on the Countdown dataset. | GitHub Repo |

| RAFT Fine-tuning on Azure AI Foundry Code Sample | Explore a recipe for using Meta Llama 3.1 405B or OpenAI GPT-4o deployed on Azure AI to generate synthetic datasets with the RAFT method. | GitHub Repo |

| Fine-tuning gpt-oss-20B Code Sample | Fine-tune gpt‑oss‑20b using Managed Compute on Azure — available in preview and accessible via notebook. | GitHub Repo |

| Distill DeepSeek V3 into Phi-4-mini Code Sample | Distill knowledge from DeepSeek V3 into Phi-4-mini using Azure’s powerful AI stack. | GitHub Repo |

| AI Tour 2025: Efficient Model Customization Code Sample | Zava retail demo shows how Azure AI Foundry transforms retail with intelligence fine-tuning agents to deliver truly personalized experiences, precise answers, and cost-efficient innovation. This presentation and repo focuses on Distillation, RFT and RAFT approaches using Azure AI Foundry. | GitHub Repo |

| Models for Beginners Course | Free course on GitHub coming soon! Checkout the preview | GitHub Repo Preview |

Videos with Demos

Azure AI Show: There’s no reason not to fine-tune (YouTube Video)

Learn how to fine-tune foundation models in Azure AI Foundry for improved performance, lower costs, and agentic scenarios. Watch Alicia and Omkar break it down in this episode.

Model Mondays: Fine-tuning & Distillation (YouTube Video).

Dave Voutila shows how Azure AI Foundry makes it easy to fine-tune existing models and use distillation techniques without needing deep ML expertise.

Helpful Docs

- Fine-tune models with Azure AI Foundry (Comprehensive Guide)

- Reinforcement Fine-Tuning (RFT) Overview

- Developer Tier Details

- Evaluations Enhancements & Auto-Evals

Community

👋 Continue the conversation on Discord

About the Authors

Hi! I’m Jacques “Jack”, Microsoft Technical Trainer at Microsoft. I help learners and organizations adopt intelligent automation through Microsoft technologies. This blog reflects my experience guiding teams in building agentic AI solutions that are not only powerful but also secure, ethical, and scalable.

I’m Malena, I lead product marketing for fine-tuning at Microsoft. I help developers make Azure AI real by activating hands-on content and building community online and offline.

#SkilledByMTT

0 comments