Have you ever been frustrated by slow debugging in Visual Studio? While we work hard to bring you a fast debugging experience, there are a lot of complex knobs that can affect the performance of any given application. In this blog post I’ll walk you through some tips you can use to improve the performance of your debug sessions and include instructions for how you can provide feedback that will help us improve the areas that matter most to you.

What do you mean “debugging is slow”?

Before reading anything else, and indeed before you report an issue, it is important that we speak the same language.

Every time someone reports to us that debugging is taking too long and that we should make it faster, we always start by asking them what they mean, so we can narrow in on what advice to give and also on what area we should invest in making better. There are three distinct debugger experiences that can be slow for you:

- Startup: how long it takes once you’ve started debugging (though launch or attach) before you are able to use the application you are debugging

- Entering break state: how long it takes for Visual Studio’s UI to become responsive after you enter break state. Entering break state can be triggered by hitting a breakpoint, stepping, or using the “break all” command.

- **Application being debugged: **the application runs significantly slower when a debugger is attached than when you run it without a debugger (e.g. you launch it by choosing “Start without debugging”)

In the rest of this post we’ll dive deeper on each of those areas trying to offer insight and advice about what could be slowing things down in each case, what you can do to improve the performance, and help you actionably report the issue to us.

Slow Startup

This is the case where your app takes too long to start when you launch it or when you attach to a running process the attach operation takes too long to complete.

Reasons which may cause startup to be slow may include build and/or deploy time, symbol loading, debug heap being enabled, and function breakpoints – let’s look at each in turn.

Build and/or deploy

When you start debugging (F5), if there are any pending edits the debugger will trigger a compilation, build and deploy of the application which can take a long time. Once the compilation, build, and deploy is complete the debugger will proceed to launch the application and start debugging. You should make sure that it’s not one of these steps that is taking a significant amount of time. A good way to validate this is to see how long it takes to start your application by starting without debugging (Ctrl + F5).

Windows Debug Heap is enabled (C++ only)

Windows by default uses a debug heap that allocates memory differently when an application is launched under a native debugger. This can cause applications that need to allocate a large amount of memory to run more slowly (frequently applications need to allocate a lot of memory during startup). To disable the Windows debug heap, add _*NO_DEBUG*_HEAP=1 to the environment block in your C++ project settings.

Read more about the debug heap and how we’ve removed the need for this step in Visual Studio 2015.

Breakpoints

Visual Studio gives you the ability to set breakpoints based on function names rather than individual lines of source code. However, these can result in a significant performance hit when debugging as every time a module is loaded into the process the debugger has to search it to determine if it contains any matching functions.

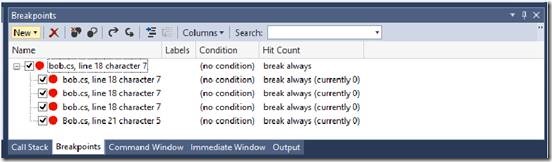

We have seen issues where traditional breakpoints become corrupted and are treated as function breakpoints which slows down debug startup. To determine if this is the case, look in your breakpoints window while debugging. If you see multiple copies of a breakpoint that should only be binding to a single location try deleting all of these from the Breakpoints window.

If you are using function breakpoints, you can improve performance by providing a fully qualified function name, meaning specify the function name in the format [Module]![namespace]

Symbol (.pdb) file loading

The debugger requires symbol (.pdb) files for various operations when debugging. At a very high level a symbol file is a record of how the compiler translated your source code into executable code. So in order to interact with source code (stepping over source, setting breakpoints) the debugger requires that symbol files be loaded. Additionally in other scenarios (e.g. when debugging native code) the debugger may require symbols in order to show you complete call stacks. You can determine if your application is loading a large number of symbol files by looking on the left side of the status bar which is at the very bottom of the Visual Studio window. Also if symbol loading is continuously occurring you may see the cancelable symbol dialog, which is another hint that symbol loading may be the causing slowness.

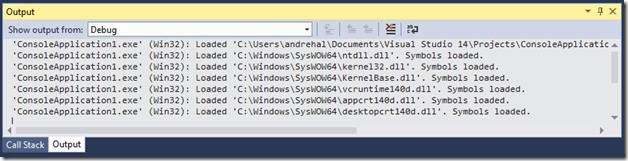

You can also look in the Output window, which will show you a record of all of the symbols that the debugger has loaded.

If based on the above information it appears your application is loading a significant number of symbols, then below are a few tips that you can try to improve your performance.

Note: if you’re not already familiar with how Visual Studio searches for symbol files, I would recommend first reading *this blog post on Visual Studio’s symbol settings.*

-

**Symbol Settings: **Frequently performance can be improved by modifying your symbol settings.

-

**Enable manual symbol loading: **This tells the debugger to only load symbols when you ask it to, or for binaries that you pre-specify.

- **Cache symbols on your local machine: **If you are loading symbols from a remote location try caching those symbols on a local machine.

-

**Turn off symbol servers: **Cache all of the symbols you need (or can find) from remote symbol servers and then disable them so you don’t continually search them.

-

Just My Code (.NET only): If you’ve disabled Just My Code (it’s enabled by default), re-enable it from Debug -> Options, check “Enable Just My Code”. When Just My Code is enabled, the debugger will not try to load symbols for the underlying framework. A few additional tips that relate to Just My Code include:

- **Do not Suppress JIT optimizations: **Visual Studio’s default settings will tell the .NET runtimes compiler to not optimize code when debugging. This means that modules loaded won’t be optimized, and therefore the debugger will try to load symbols for them. Assuming you are using a “Debug” build configuration for your application, the compiler won’t try to apply optimizations to those modules, so under Debug -> Options, change the default by unchecking Suppress JIT optimization on module load (Managed only). This will allow the runtime to optimize libraries you are using (e.g. JSON.NET) so the debugger won’t try to load symbols for them. *The thing to note about this, is if you debug a “Retail” build of your application, changing this setting can affect your debugging experience by optimizing away variables and inlining code *

- .NET Framework Source Stepping:* If you change the default and enable .NET Framework source stepping you should know that it also disables Just My Code and automatically sets a symbol server. Turn this off under Debug -> Options, and uncheck “Enable .NET Framework source stepping”. *Note: you will be prompted to disable this if you try to enable Just My Code while this is enabled.

If you’ve had an issue with slow symbol loading let us know by voting for the UserVoice suggestion to improve symbol loading in Visual Studio. Also leave a comment there letting us know whether you were debugging managed or native code and some details about your application including how many modules you were loading symbols for, your Just My Code setting, your symbol settings (are you using a symbol server, local cache, automatic or manual loading), and your Suppress JIT Optimizations setting.

Now that we have covered the known cases where starting debugging can be slow, let’s switch our attention to the second category: entering break state.

Slow entering break state (including stepping)

When you hit a breakpoint or step, the debugger enters what we call “break mode”. This causes the current process to pause its execution so that its current state can be analyzed. As part of entering break mode the debugger will automatically do many things depending on the windows that you have open. These include:

- Populating call stack information

- Evaluating expressions in any visible watch windows

- Refreshing the contents of any other windows that could have changed while the application was running

Let’s look at the impact of each one of those in turn, and what you can do about them.

Call stacks

In order to give you a complete call stack for where your application is stopped the debugger performs what is called a “call stack walk” where it starts at the current instruction pointer and “walks” backwards until it reaches the function the current thread originated in. In some cases doing this can be a relatively slow process which can affect how long it takes for Visual Studio to become responsive as you enter break state. The things you can try to determine if this is your issue are:

- Disable “Show Threads in Source” if it is enabled, and also close the Parallel Stacks Threads, Tasks, and GPU threads windows if it they are open. Those cause the debugger to walk the call stack for every thread in the process.

- Load symbols for all of the modules on your call stack. Especially when debugging x86 C++ applications, if you don’t have complete symbols the debugger has to use heuristics to walk the stack that can be expensive to calculate.

- A drastic move is to close any anything that shows a call stack, to eliminate the “call stack walk” completely. Besides the multi-threading windows mentioned above, close the Call Stack window, and additionally remove the Debug Location toolbar (right click on the toolbars at the top and uncheck it) as it also shows the current stack frame.

If you’ve had an issue with your debugging session being slow due to stack walking, let us know by voting for the UserVoice suggestion to improve call stack performance in Visual Studio. Also leave a comment there about what type of application you were debugging, what programming language, what architecture (ARM, x86, x64), what Windows were visible, what your symbol settings were, if your call stack window is open, and what call stack window options you had enabled (e.g. Show Parameter Values).

Populating the Watch windows when debugging managed code

If you debug managed (.NET) code you likely familiar with properties, which as you know are actually functions rather than just variables. So in order to retrieve the value of a property, the debugger must execute the function (called function evaluation). While most properties are simple they have the potential to execute time consuming algorithms. Occasionally you can run into a property that takes a long time to evaluate in one of the watch windows which affects how quickly Visual Studio becomes responsive. If you suspect you may be running into this, you can try the following tips:

- Clear any properties from the Watch windows, or hide the Watch windows (by closing them or bringing another window on top of them in the same docking area). The debugger only evaluates expressions in the windows when they are visible.

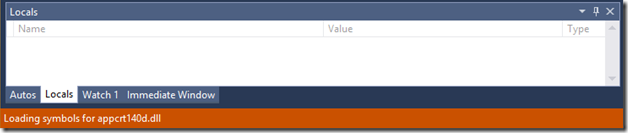

- Hide the Autos and Locals windows that automatically populate

- Alternately, disable “Enable property evaluation and other implicit function calls” under Debug -> Options.

If you’ve had an issue while stepping due to slow function evaluation let us know by voting for the UserVoice suggestion to improve function evaluation performance in Visual Studio. Also leave a comment there letting us know which version of Visual Studio you were using (e.g. 2013) and which window you were seeing the problem in.

Windows that refresh

Beyond the Watch and Call Stack windows that were called out above, the Breakpoints and Disassembly windows can be expensive to populate as well. You can determine if these are affecting your performance by hiding them (just like the Watch windows you only pay the cost to update these if they are visible).

If you determine that one of these windows was causing the problem, please let us know about it through Visual Studio’s Send a Smile feature or in a comment below. Now let’s turn our attention to the 3rd and last category of debugger slowness.

.NET Edit and Continue

One of the great productivity features available when debugging is edit and continue. However edit and continue does have restrictions on what types of edits can be applied (for example edits cannot be applied if another thread is executing that line of code). This means that when the debugger enters break state it checks to see if an edit is going to be allowed so it can provide a proper error message. In most cases the amount of work required to validate this is quite small, but occasionally the debugger can hit a case that this proves expensive to calculate so disabling edit and continue (Debug -> Options, and disable “Enable Edit and Continue”) will buy that time back.

It is on our backlog to modify the behavior of edit and continue to only perform the check for valid edits when you attempt to apply an edit.

Managed application runs much slower when debugging

Sometimes managed applications run much slower when debugging than without debugging, e.g. it takes a few seconds to run to a point in your code when not debugging, but takes significantly longer if you launch with debugging. There are two common causes for this behavior so let’s look at them in turn starting with the most common

Large number of exceptions

When debugging managed (.NET) code, every time an exception occurs, regardless of whether it is handled or not, the target application is paused (just like it hit a breakpoint) so the runtime can notify the debugger that an exception occurred. This is so the debugger can stop if you have the debugger set to break on the first chance exceptions, if not, the application is resumed. This means that if a significant number of exceptions occur it will significantly slow down how fast the application can execute.

You can tell if a large number of exceptions are occurring by looking in the Output window. If you see a lot of “A first chance exception of type…” or “Exception thrown…” messages in the Window this is likely your problem.

To diagnose this potential issue, you may need to disable “Just My Code”, as when it is enabled the first chance exception messages do not appear for exceptions occurring in “external code” but the notification overhead is still present. Once you’ve diagnosed whether first chance exceptions are your issue (or not), remember to turn on again “Just My Code” for all the other good reasons. Note we have greatly reduced the performance cost of first chance exceptions that occur outside your code (when Just My Code is enabled) in Visual Studio 2015, but the performance impact remains unchanged for exceptions in your code.

Executing large amounts of non-optimized code

When Suppress JIT optimization on module load (Managed only) is enabled, the runtime’s Just In Time (JIT) compiler will not apply any performance optimizations to modules that require JIT compilation. This means that the code in these binaries may run significantly slower in some situations. In most cases the framework on your machine has been pre-compiled to native images using NGen so this setting does not affect the framework. However if you are using a large number of 3rd party references (e.g. from NuGet) you can improve the speed of these libraries by going to Debug -> Options and disabling “Suppress JIT optimizations on module load”.

Assuming your projects are compiled “Debug”, the JIT compiler will not attempt to apply any optimizations regardless of this setting. This means that if you are working in a very large project, you can compile some of the projects you are not actively debugging Retail to improve that code’s performance as well. The downside to taking this approach in your code is, that it may be difficult to debug into the optimized code if you unexpectedly need to. For example variables are often optimized away so cannot be inspected and small functions and properties are inlined so breakpoints may never be hit and stepping can behave unexpectedly.

IntelliTrace [Managed Debugging on Visual Studio Ultimate Only]

IntelliTrace is a capability currently only in the Ultimate SKU. IntelliTrace helps developers be more productive with their everyday debugging, by offering a form of historical debugging. By default IntelliTrace collects select interesting events that you can use to understand what happened in your code after the code execution is long gone and is not on the stack anymore. This has low impact on your app’s execution time, but there is still some impact.

- If you see a large number of a particular event type in the IntelliTrace window and you are not interested in this type of event you can disable collection of that event from Tools -> Options -> IntelliTrace -> IntelliTrace events

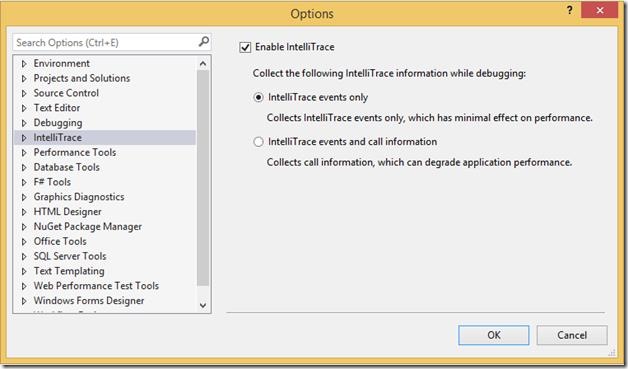

You can also turn on additional collection with IntelliTrace, and specifically you can collect all entry and exit points of all functions. While that makes debugging even easier the runtime cost can be significant. So if the performance impact while debugging is not acceptable to you, you can dial it back to collect Events only (and not “call information”).

- To reduce the performance impact of IntelliTrace, go to Tools ->Options ->IntelliTrace and select Collect Events Only setting. Note that this is the default.

If you are still having problems, please collect a performance trace and report the issue using the Visual Studio feedback features (VS 2013 instructions, VS 2015 instructions). Additionally you can vote for the UserVoice suggestion to improve IntelliTrace performance

Conclusion

In this post we looked at the situations in which debugging can be slow for you, and talked about possible ways to work around them. Additionally I provided some ways that you can give us feedback to help prioritize fixing the areas that slow debugging affects you the most. This was through a combination of several User Voice items (Improve symbol loading performance when debugging, Improve Call Stack walking performance when debugging, Improve function evaluation performance, and Improve IntelliTrace performance). Additionally you can always provide feedback below, through the Send a Smile feature in Visual Studio (if you do this please include a performance trace of your problem: VS 2013 instructions, VS 2015 instructions) , and in our MSDN forum.

Credit

Many thanks to Deesha Phalak, who put the majority of this content together. Deesha is an engineer on the Diagnostics team in Visual Studio

0 comments