In today’s fast-paced software development cycles, manual testing often becomes a significant bottleneck. Our team was facing a growing backlog of test cases that required repetitive manual execution—running the entire test suite every sprint. This consumed valuable time that could be better spent on exploratory testing and higher-value tasks.

We set out to solve this by leveraging Azure DevOps’ new MCP server integration with GitHub Copilot to automatically generate and run end-to-end tests using Playwright. This powerful combination has transformed our testing process:

- Faster test creation with AI-assisted code generation

- Broader test coverage across critical user flows

- Seamless CI/CD integration, allowing hundreds of tests to run automatically

- On-demand test execution directly from the Azure Test Plans experience (associating Playwright JS/TS tests with manual test cases is coming soon. Keep an eye on our release notes for the announcement.).

By automating our testing pipeline, we’ve significantly reduced manual effort, improved test reliability, and accelerated our release cycles. In this post, we’ll share how we did it.

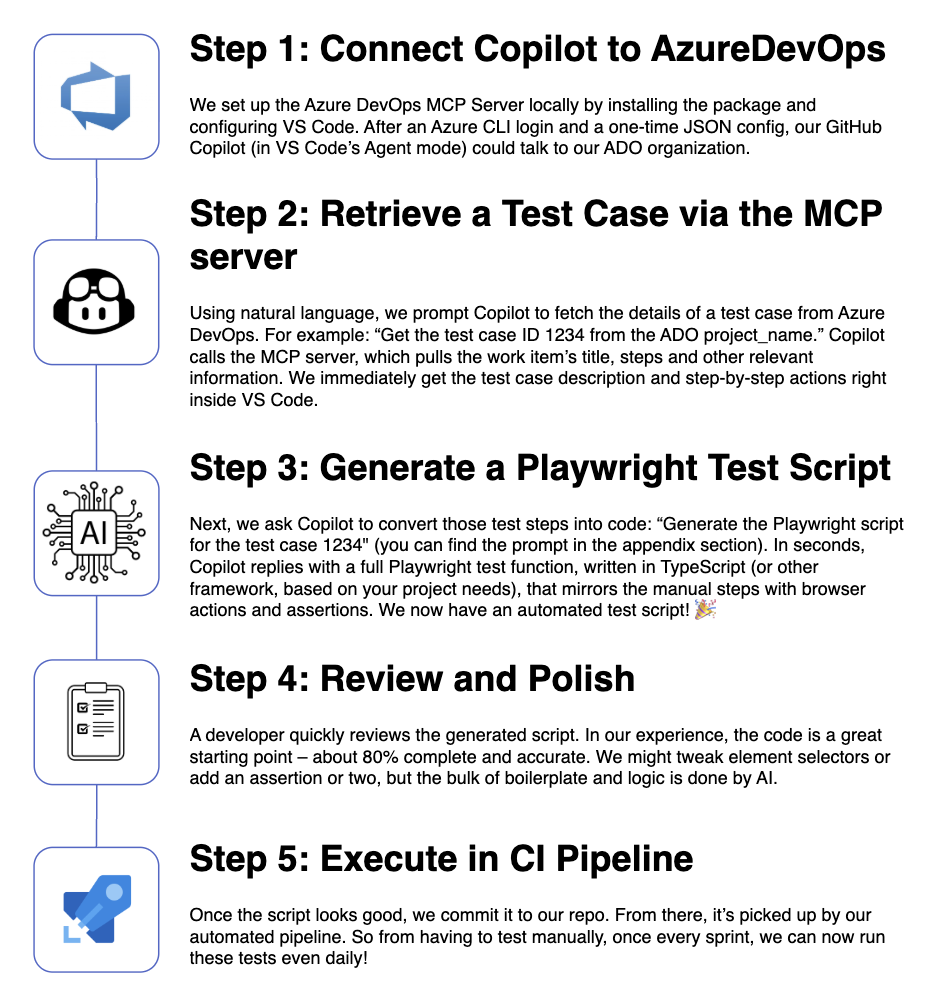

How We Turn Test Cases into Automated Scripts (Step-by-Step)

Enabling this AI-driven workflow required a few pieces to come together. Here’s how the process works from start to finish:

By following the above loop for each test case (and you can do it in bulk, by passing an entire Test Suite to GitHub Copilot), we gradually turned an entire manual test suite into an automated one (we have hundreds of test cases only for our own domain and over a thousand test cases for the entire project). The MCP server and Copilot essentially handled the heavy lifting of writing code, while our team oversaw the process and made minor adjustments. It felt almost like magic – describing a test in plain English and getting a runnable automated script in return!

Challenges and Lessons Learned

- Prompt is the king! Goes without saying – how you prompt the AI matters. A clear, specific prompt yields better results. In our case, breaking the task into two prompts (“fetch test case” then “generate script”) produced more reliable code than a single combined prompt. We also sometimes had to experiment with phrasing – e.g. using the exact wording “convert the above test case steps to Playwright script” worked better than a vaguer command. In addition to this, make sure to point the model to relevant code/files where you have existing tests. The more references you give, the more accurate the newly generated script it will be. It’s a bit of an art, but the more we used it, the more we developed a feel for what phrasing GitHub Copilot responds best to. Thankfully, our test case descriptions were usually detailed and structured, which made it easier for the AI to identify the sequence of actions.

- Quality of context: You’ll need to spend extra time on one of two things:

-

Either improve your test cases in Azure DevOps by writing clearer, more detailed steps,

-

Or spend more time fixing the generated scripts later.

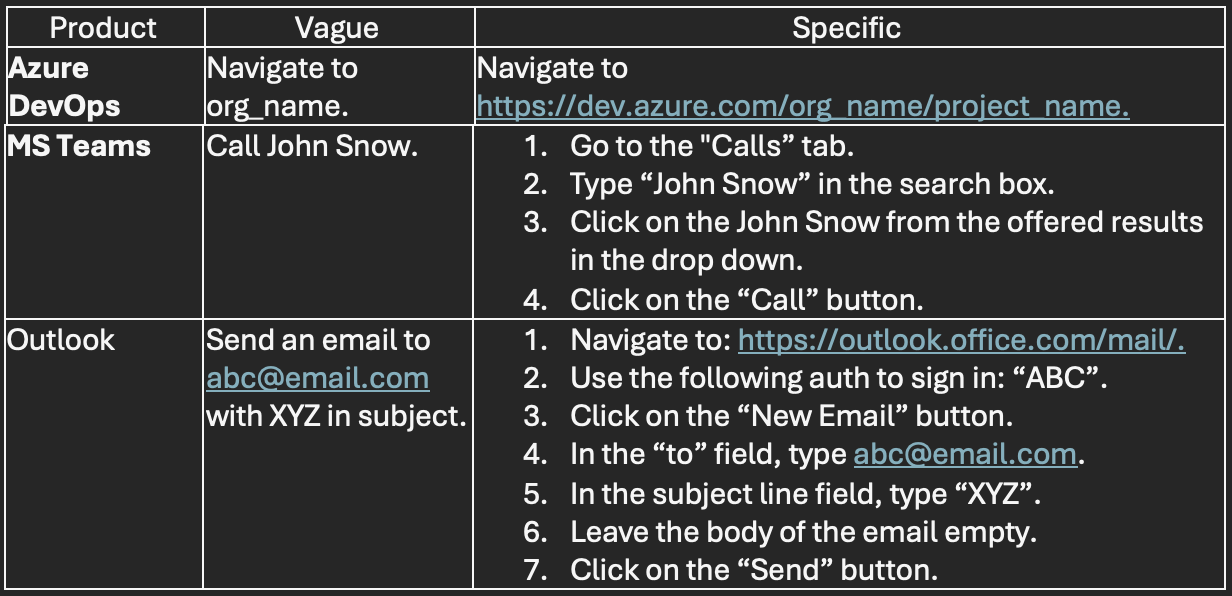

If you choose to improve the test cases, make sure they are specific. Some examples of vague and specific steps:

- Handling of Non-Textual Steps: Some test scenarios involve graphics or media (for example, “verify the chart looks correct” or checking an image). The current Copilot agent cannot interpret images or visual assertions – its domain is text. Our POCs confirmed that if a test step said “compare screenshot,” the AI would not magically do image comparison. The workaround is to adjust such steps to something verifiable via DOM or data (or handle those cases manually for now). In practice, this was a minor limitation – the vast majority of our test steps were things like “click this” or “enter that,” which AI handles well. But it’s good to be aware: for purely visual verifications, you’ll need to supplement with traditional methods, or use Playwright’s screenshot assertions with predefined baseline images.

Appendix

Prompts

Below you can find the 2 prompts that can help you get started. After you generate the scripts, you can tweak them until the point that you can execute them successfully locally. Once you are happy with the scripts, you can create an Azure Pipeline to execute them as part of it, on regular basis.

Make sure to tailor the prompts to your specific needs and context – this will help Copilot generate higher-quality scripts.

Prompt 1:

Get me the details of the test cases (do not action anything yet, just give me the details of each test case).

Test Information:

* ADO Organization: Org_Name

* Project: Project_Name

* Test Plan ID: Test_Plan_ID

* Test Suite ID: Test_Suite_ID

After Copilot gets the details for each test case, via the MCP server, use the following prompt:

Prompt 2:

Imagine you are an experienced Software Engineer helping me write high-quality Playwright test scripts in TypeScript based on the test cases I provided. Please go over the task twice to make sure the scripts are accurate and reliable. Avoid making things up and do no hallucinate. Use all the extra information outlined below, to write the best possible scripts, tailored for my project.

# Project Context

Look at the "Project_name" folder, to get more insights (if your project is quite large, use the below section to be more concrete and reference specific folders/files).

My project structure includes:

* Authentication helpers: //*Add/folder/path*

* Existing sample tests: //*Add/folder/path*

* Playwright config: //*Add/folder/path*

* Test Structure: //*Add/folder/path/test-1656280.spec.ts*

* The project’s UX components are in the following folder: //*Add/folder/path*.

# Test Structure Requirements

For each test, please follow this structure:

1. Clear test description using *'test.describe()'* blocks

2. Proper authentication setup before any page navigation

3. Robust selector strategies with multiple fallbacks

4. Detailed logging for debugging

5. Screenshot captures at key points for verification

6. Proper error handling with clear error messages

7. Appropriate timeouts and wait strategies

8. Verification/assertion steps that match the test case acceptance criteria

# Robustness Requirements

Each test should include:

1. Retry mechanisms for flaky UI elements

2. Multiple selector strategies to find elements

3. Explicit waits for network idle and page load states

4. Clear logging of each test step

5. Detailed error reporting and screenshots on failure

6. Handling of unexpected dialogs or notifications

7. Timeout handling with clear error messages

# Environmental Considerations

The tests will run in:

* CI/CD pipeline environments

* Headless mode by default

* Potentially with network latency

* Different viewport sizes

# Example Usage

Please provide a complete implementation with:

1. Helper functions for authentication and common operations

2. Full test implementation for each test case

3. Comments explaining complex logic

4. Guidance on test execution

# Authentication Approach

In order for the tests to be executed, we need to authenticate the application. Use the below auth approach:

//{you need to define the authentication steps – if this is already defined for your project, instruct Copilot how to use it. If your scenarios do not require auth, you can remove this part from the prompt.}

# Configuration Reference

For timeouts, screenshot settings, and other configuration options, please refer to:

//{Add a reference to a specific file, etc. for better context}

I want these tests to be maintainable, reliable, and provide clear feedback when they fail.

Even without prompt files and using only MCP playwright + Github copilot you can navigate and perform everything with chat.

I found your explanation to be highly insightful. Your emphasis on the critical role of well-defined prompts and clear communication with AI is particularly valuable. I would like to respectfully request the inclusion of a video within the blog post

Good stuff!! I’ve just started playing with this last week and it’s pretty impressive what it can do. I’m using it for creating Bicep templates for Azure infrastructure within Azure DevOps and for developing a custom Azure DevOps extension and build task (using GitHub for that). Having the Azure Verified Modules GitHub repository as reference for it has really helped.

Nice information about testing usung AI, i am using aws in my office, as per aws i have plan for automation AI testing.