RAG – Retrieval Augmented Generation – is by far one of the most common patterns today as it enables the creation of chatbots that can chat on your own data, as I described in my previous “Retrieval Augmented Generation with Azure SQL” blog post. As soon as you start to use it excitement grows, as it provides a sort of a magical experience. Experience that unfortunately stops the moment you try to ask it question that requires the ability to extract data from a structured source, like a database, as RAG is great when dealing with unstructured data, like text.

By integrating the RAG pattern alongside NL2SQL, which translates natural language queries into SQL code, your chatbot can significantly enhance its capabilities. It can now seamlessly choose between RAG and NL2SQL based on the query, or even combine both methods to handle complex questions that require more than one approach. This advanced integration allows the chatbot to effectively respond to a wide range of inquiries, taking its functionality to the next level.

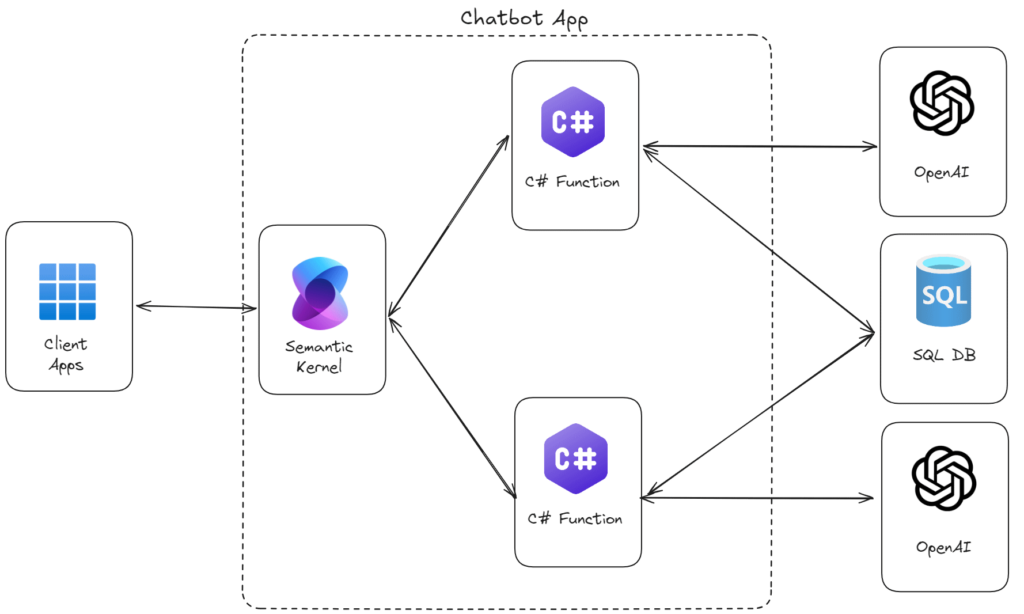

Semantic Kernel is the orchestrator library than enables the chatbot to be “intelligent” and figure out when to use what. For this chatbot, I’ll use a chat completion LLM like gpt-4 turbo. The high-level architecture is the following:

My own data

My sample chatbot will be using data stored in Azure SQL database about the SQL Konferenz conference I was in the last weeks. In the database there are stored details of all the sessions and to make the solution a bit more realistic, I decided to store some data in a regular relational format and some other in a JSON document:

Retrieval Augmented Generation

The RAG pattern is the simplest of the two pattern here, as thank to the vector support in Azure SQL, searching for similar session is as easy as writing a query using the new VECTOR_DISTANCE function as explained in the blog post on RAG mentioned before. The additional aspect is to allow the LLM to decide if and when to use the RAG pattern. Semantic Kernel comes to help here as doing that is as easy as decorating the method in doing similarity search with a cleat enough prompt:

[KernelFunction("GetSessionSimilarToTopic")]

[Description("Return a list of sessions at SQL Konferenz 2024 at that are similar to a specific topic or by a specific speaker name specified in the provided topic parameter. If no results are found, an empty list is returned. This function only return data from the SQL Konferenz 2024 conference.")]

public async Task<IEnumerable<Session>> GetSessionSimilarToTopic(string topic)

{

logger.LogInformation($"Searching for sessions related to '{topic}'");

DefaultTypeMap.MatchNamesWithUnderscores = true;

await using var connection = new SqlConnection(connectionString);

var sessions = await connection.QueryAsync<Session>("web.find_similar_sessions",

new { topic },

commandType: CommandType.StoredProcedure

);

return sessions;

}so when you ask a question like “is there any session on Azure SQL and AI?” you’ll see the chatbot “understanding” that it can use the method you have prepared for it to get the sessions that are similar to the asked topic:

Querying structured data

Once you find a session that interests you, such as the ‘Building AI Ready Applications’ session, you will likely want to know more. To do that, you will ask a question that requires a precise answer, which can only be found by running the right query in the database. For example, you may ask which track that session is in, and this can be answered only by running a query like:

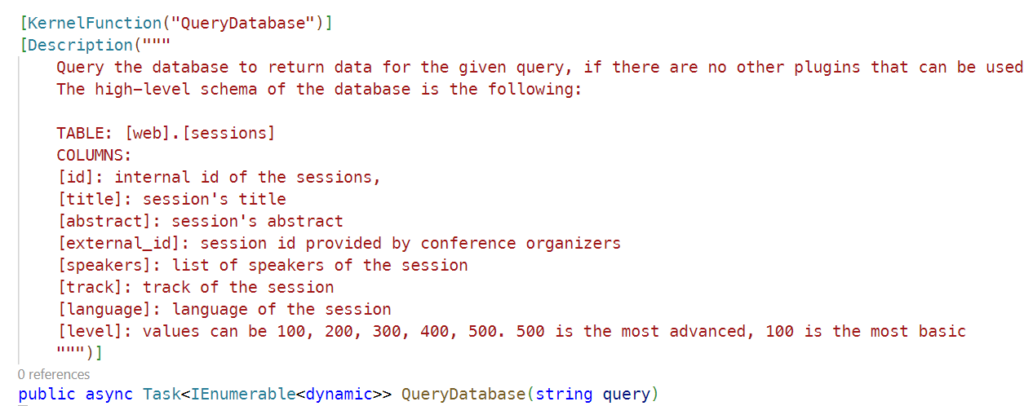

SELECT track FROM web.Sessions WHERE id = 123To make the chatbot able to execute such query, I have created a function that it can use that uses LLM behind the scenes to generate the correct SQL query. The function is decorated with a rich prompt that helps the LLM understand that is the high-level data structure I have in my database:

that helps the AI model to understand if and when this function can be used, based on the given question and context. Then, inside the function, as you can see in the source code, there is a more specific prompt that helps the LLM to generate the right T-SQL statement (even helping it to correctly cast data when needed, as JSON data type is still in preview and some implicit casting is not available yet), so that the query that is actually executed is correct with respect to the data model I have defined. In this example the query is going to be:

SELECT JSON_VALUE(details, '$.track') as track FROM web. Sessions WHERE Id = 49;as track is saved as a JSON property in the details column. Pretty impressive, right?

With this ability the chatbot is now able to answer pretty much all the questions you may want to ask. For example, if you ask “what are the other tracks?”, the generated query will be the following:

SELECT DISTINCT JSON_VALUE(details, '$.track') AS track FROM web.sessions;or even more complicated questions like “how many sessions there are per each language?” which will execute:

SELECT JSON_VALUE(details, '$.language') as language,

COUNT(*) as session_count

FROM web.sessions

GROUP BY JSON_VALUE(details, '$.language')

That is quite impressive, right? But, there’s more!

Is the chatbot *learning*?

Well…yes, in a certain sense. In my example, the system does not save the chat history, so it does not automatically persist what is learned in the database. That’s definitely something I’ll fix in future, given that the new JSON type makes save history in the database really straightforward, but for now it is good enough.

So, in which sense the chatbot is learning? Let’s try asking a question that will lead to generate a wrong query. For example, I can ask “are there sessions presented by italian speakers?”. In one of the memory I inject into the chatbot there is there information that I’m Italian, so the LLM decides to run the following SQL query:

SELECT * FROM [web].[sessions] WHERE [speakers] LIKE '%Italian%' OR [speakers] = 'Davide Mauri'which is translated to this T-SQL code:

SELECT *

FROM [web].[sessions]

WHERE JSON_QUERY(CAST([details] AS NVARCHAR(MAX)), '$.speakers') LIKE '%Italian%'

OR 'Davide Mauri' IN

SELECT value

FROM OPENJSON(CAST([details] AS NVARCHAR(MAX)), '$.speakers')that generates a syntax error, this one specifically:

Incorrect syntax near the keyword 'SELECT'.Thanks to Semantic Kernel integration and capabilities, the LLM is able to “understand” the error, the error message and call the function again, this time generating the correct T-SQL code, all without human intervention, finally coming up with the correct T-SQL query:

SELECT *

FROM [web].[sessions]

WHERE JSON_QUERY(CAST([details] AS NVARCHAR(MAX)), '$.speakers') LIKE '%Italian%'

OR JSON_QUERY(CAST([details] AS NVARCHAR(MAX)), '$.speakers') LIKE '%Davide Mauri%'and it returns the correct answer: “Yes, there is at least one session at SQL Konferenz 2024 that is presented by an Italian speaker, Davide Mauri. The session is titled “Azure SQL and SQL Server: All Things Developers” and it covers a range of new features for Azure SQL and SQL Server aimed at supporting developers to be more efficient and productive. The session will be in English and it’s on the track of Data Engineering with a level of 300, which is intermediate.”

That is truly impressive!

Conclusion

Using an orchestrator like Semantic Kernel in conjunction with RAG and NL2SQL can create chatbot that really have the “human” feeling and are capable to answer complex question over your data, giving you the option the build AI solutions that will make your users feel like there is something magic happening behind the scenes. And this is only the beginning. Future looks really interesting!

0 comments