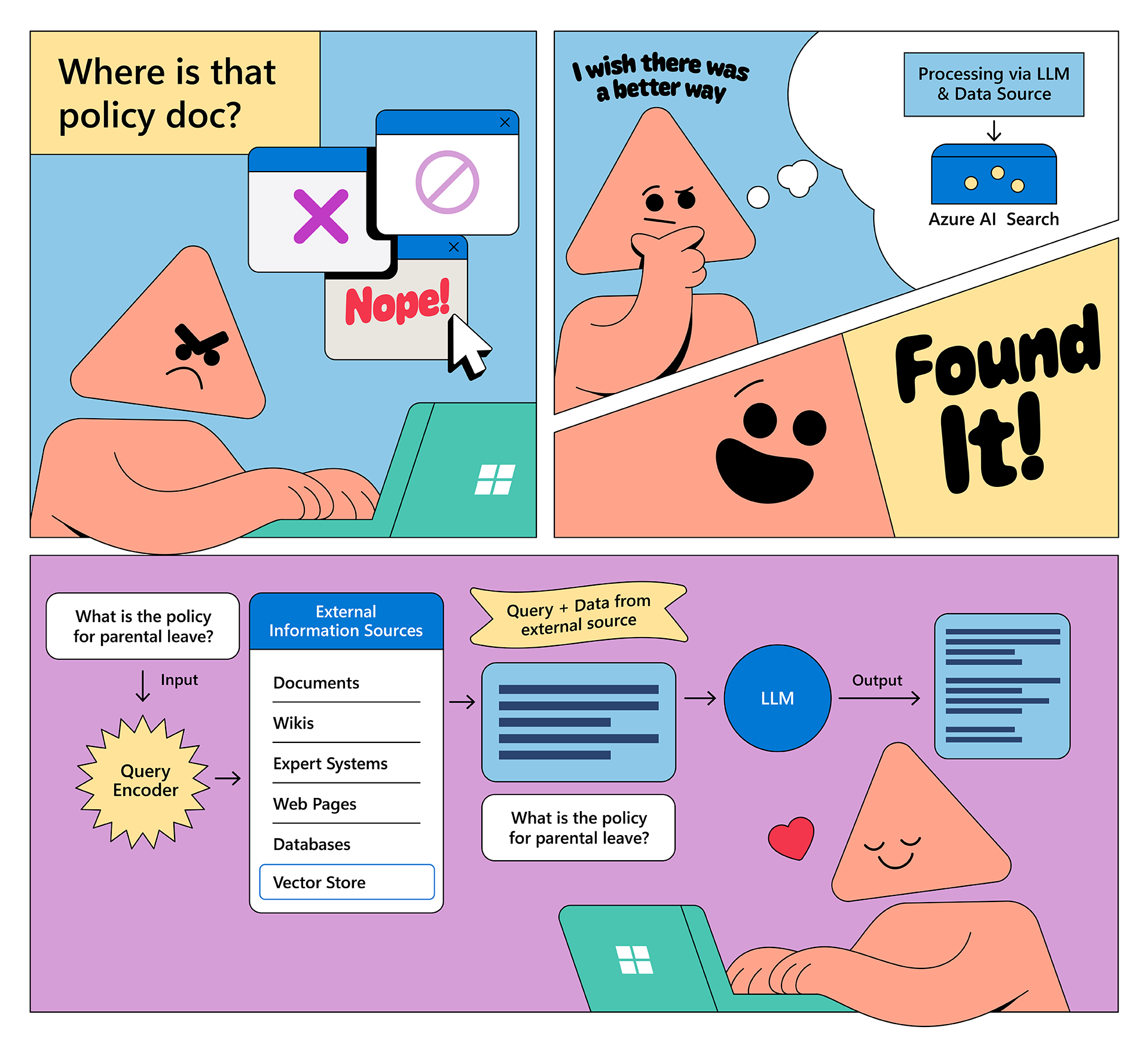

Do you find yourself drowning in company documentation, spending hours searching through SharePoint, knowledge bases, and contract repositories for one specific piece of information? You’re not alone. Organizations now have plenty of information – the challenge lies in finding the right information when you need it. We have built a packaged GitHub template that helps you harness AI to make YOUR data searchable and useful. This code sample will help you create your own ChatGPT-like enterprise search and chat experience, powered by Azure AI Search and large language models.

Use Cases

Consider these scenarios: A new employee asks, “What’s our parental leave policy?” and receives an instant, accurate response. A financial advisor preps for a client meeting by having a natural conversation with their documentation about emerging market funds. Legal teams ask questions like “What are the termination clauses?” and receive relevant excerpts instantly with links to source documents – no more manual searching through hundreds of pages.

Benefits

Unlike public ChatGPT, this solution keeps your sensitive data private. We designed this pattern for privacy-first, enterprise-grade deployments where you maintain control of your data. Financial services, manufacturing, government, and legal organizations already use this pattern to boost their employee productivity and customer service operations. Teams see faster response times, reduced operational costs, and employees who focus on high-value work instead of document searches.

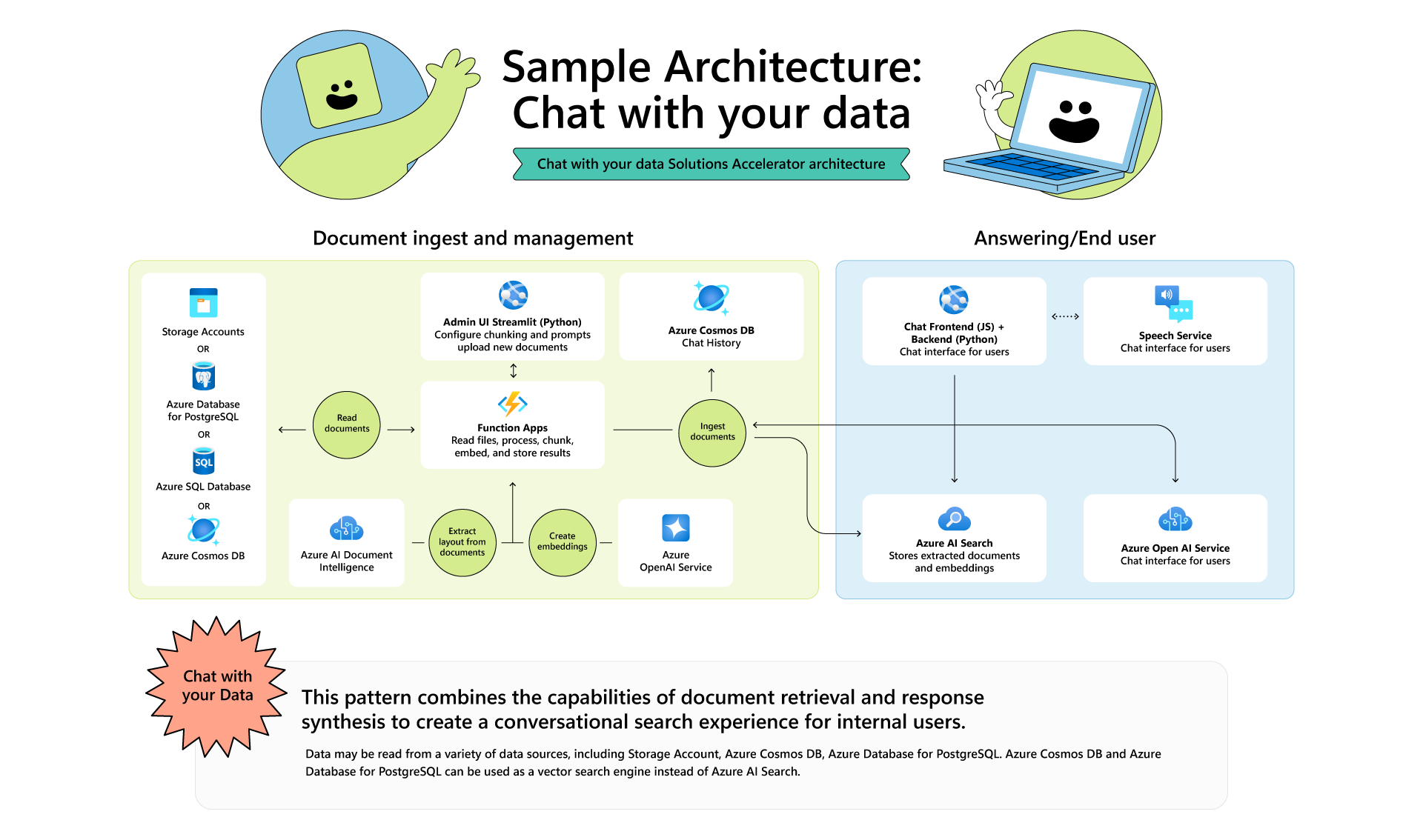

How It Actually Works: The Architecture

Our pattern uses RAG (Retrieval-Augmented Generation), which enables LLMs to retrieve and respond with relevant data from your data sources at inference time. Here’s how it works:

1. Document Ingestion and Processing: Function Apps ingest your documents (PDFs, Word files, etc.), chunk them, and process them into embeddings. Azure AI Document Intelligence extracts document layout while Azure OpenAI Service creates embeddings.

2. Chat History: Cosmos stores your questions and responses, letting you continue conversations where you left off.

3. Store Embeddings: Azure AI Search stores the extracted embeddings for retrieval.

4. Smart Retrieval: When you ask a question, the system finds the most relevant information chunks from Azure AI Search.

5. Response: Azure OpenAI Service transforms those chunks into human-friendly responses.

What You Can Build

Developers create powerful applications with this pattern across various domains. HR teams deploy intelligent bots that handle leave requests and benefits inquiries, providing step-by-step guidance and relevant document links. Legal teams speed up contract management with assistants that instantly surface similar clauses from thousands of past contracts. Customer support teams build systems that tap into their entire knowledge base – every manual, FAQ, and historical support ticket – enabling support agents to provide faster, more accurate responses to complex queries. These intelligent assistants understand context, provide documentation links, and guide users through complex processes.

Get Started

Find our complete solution on GitHub, including sample code, deployment scripts, and detailed documentation. Start with the basics and customize it for your needs.

Remember: We built this to make your organization’s knowledge accessible and useful – not just to create another chat interface. Now go build something awesome!

Want to connect? Join our active developer community in the GitHub repo’s issues section, where teams share their innovations with this pattern.

Happy coding!

0 comments

Be the first to start the discussion.