DevOps for Quantum Computing

DevOps for Quantum Computing

Azure Quantum with its Quantum Development Kit (QDK) makes it easy for quantum researchers and data scientists to start writing quantum algorithms backed by the reliability and scale of Azure. However, a quantum algorithm typically only represents part of a larger system. It also includes classical software components, leading to hybrid quantum systems. As consequence, the process for developing, deploying, monitoring, and evolving these systems with different roles involved becomes more complex. This blog post shows how DevOps practices need to adapt when software includes quantum components. Result is a repeatable, high-quality process for building, deploying, and monitoring hybrid quantum software.

Quantum influence on all aspects of DevOps

DevOps can be defined as “the union of people, process, and products to enable continuous delivery of value to our end users”. This definition gives a great structure for discussing how the quantum part influences all areas of DevOps.

- People – Designing, developing, and operating quantum algorithms requires skills that are different from those skills you require for classical components. For example, designers and developers typically include quantum information scientists and similar roles. Similarly, operations staff must be familiar with specialized target systems (optimization solvers, quantum hardware).

- Process – You typically develop classical and quantum components separately. At some stage, you need to integrate them to the overall solution. As consequence, you need to align the quantum and the classical DevOps activities.

- Products – You need to consider the lifecycle of the different execution environments. Specialized quantum computers are scarce resources. In general, they are operated as central resources and accessed by various classical clients.

Microsoft’s One Engineering System (1ES) team gives insights on how internal teams at Microsoft adopted a DevOps-driven culture. They published their learnings and approach to evolve such a culture through five steps that can be applied to any team. Many of them usable in a DevOps for Quantum Computing approach.

DevOps practices for hybrid quantum applications

Infrastructure as Code (IaC)

Like any other Azure environment, quantum workspaces and the classical environments can be automatically provisioned by deploying Azure Resource Manager templates. These JavaScript Object Notation (JSON) files contain definitions for the two target environments:

- The quantum environment contains all resources required for executing quantum jobs and storing input and output data: an Azure Quantum workspace connecting hardware providers and its associated Azure Storage account for storing job results after they are complete. This environment should be kept in its separate resource group. This allows separating the lifecycle of these resources from that of the classical resources.

- The classical environment contains all other Azure resources you need for executing the classical software components. Types of resources are highly dependent on the selected compute model and the integration model. You would often recreate this environment with each deployment.

You can store and version both templates in a code repository (for example, Azure Repos or GitHub repositories).

Continuous Integration (CI)

With hybrid quantum applications, Continuous Integration remains an important part of DevOps. As soon as you are ready and commit your code to the repository, it needs to be automatically tested and integrated into other parts of the software. For the pure classical parts of the application, best practices for testing remain in place. The Microsoft Azure Well-Architected Framework also gives some general guidance on CI best practices.

The quantum components and their integration require special treatment. The components themselves have their own execution environment. In addition, you need to test the classical code that manages quantum components (i.e., submit and monitor jobs at the quantum workspace).

Automated Testing

Testing of quantum components includes following activities:

- Unit tests implemented via test projects and executed on a quantum simulator.

- Estimation of resources required to run the job later on quantum hardware.

- Tests executed on quantum hardware – potentially the target production environment.

For testing purposes, you can either submit the quantum jobs via the classical components used in production, or by components (for example CLI scripts) specially written for automated testing.

Testing of the quantum components has following requirements:

- You should carefully choose the execution environment for tests. You can efficiently execute many tests on a quantum simulator (where there is access to the full state vector of qubit registers). However, you need quantum hardware for those tests that exhibit the probabilistic behavior.

- You need to run tests that cover these probabilistic portions of algorithms multiple times to make sure that a successful test will stay successful on subsequent runs.

- Results of program execution must be validated based on its nature, often using quantum-specific tools and tricks (some described in part 1 and part 2 of previous blogs titled “Inside the Quantum Katas”). Tests must validate, if results represent valid solutions and honor existing constraints.

During integration, the quantum components are bundled with the classical components. The result represents the deployment artifact that gets installed on the classical environment during subsequent steps.

Continuous Delivery (CD)

Continuous Delivery involves building, testing, configuring, and deploying the application. If your organization has a DevOps culture that supports it, you can automate these steps up to the deployment in production environments.

Configuring and deployment of the application involves following steps:

- Provisioning of target environments

- You can automate the environment provisioning by deploying the ARM templates stored in the repository.

- It is not necessary to reprovision the quantum components every time the code changes. As the quantum code doesn’t persist on these components (classical components submit the jobs on demand), you should reprovision these components only on special occasions (for example a full, clean deployment).

- You can reprovision the classical components with every code change. To minimize disruption during deployment, you can implement a staged approach, and use features offered by Azure compute services for high availability.

- Configuration of target environments

- As the classical components need to have permissions to submit quantum jobs, you should define managed identities on the classical components (for example, the job orchestrating Azure Function). With managed identities, you can properly restrict access to the quantum resources to only those components that need to access them for job orchestration purpose.

- Grant the classical components access to the quantum workspace, so that they can submit and monitor quantum jobs. Add a contributor role assignment to the quantum workspace for the managed identity.

- Shipping of application artifacts to the target environments

- Shipping involves deploying the classical application package (that includes the quantum job artifacts) to the chosen compute service.

Application Monitoring

Once the classical component submitted a quantum job, it must monitor the status of the job. The quantum workspace provides an API to query the status. The classical component can log the quantum status via custom events in Application Insights. This integrates status information into all the other application logging to be analyzed and visualized with Azure Monitor.

The move to the cloud can help evolving the operation model and reshape the relationship of operations with the development teams. Microsoft published the challenges the team faced during this evolution, their journey and the results they have seen by redefining IT monitoring.

Putting everything together

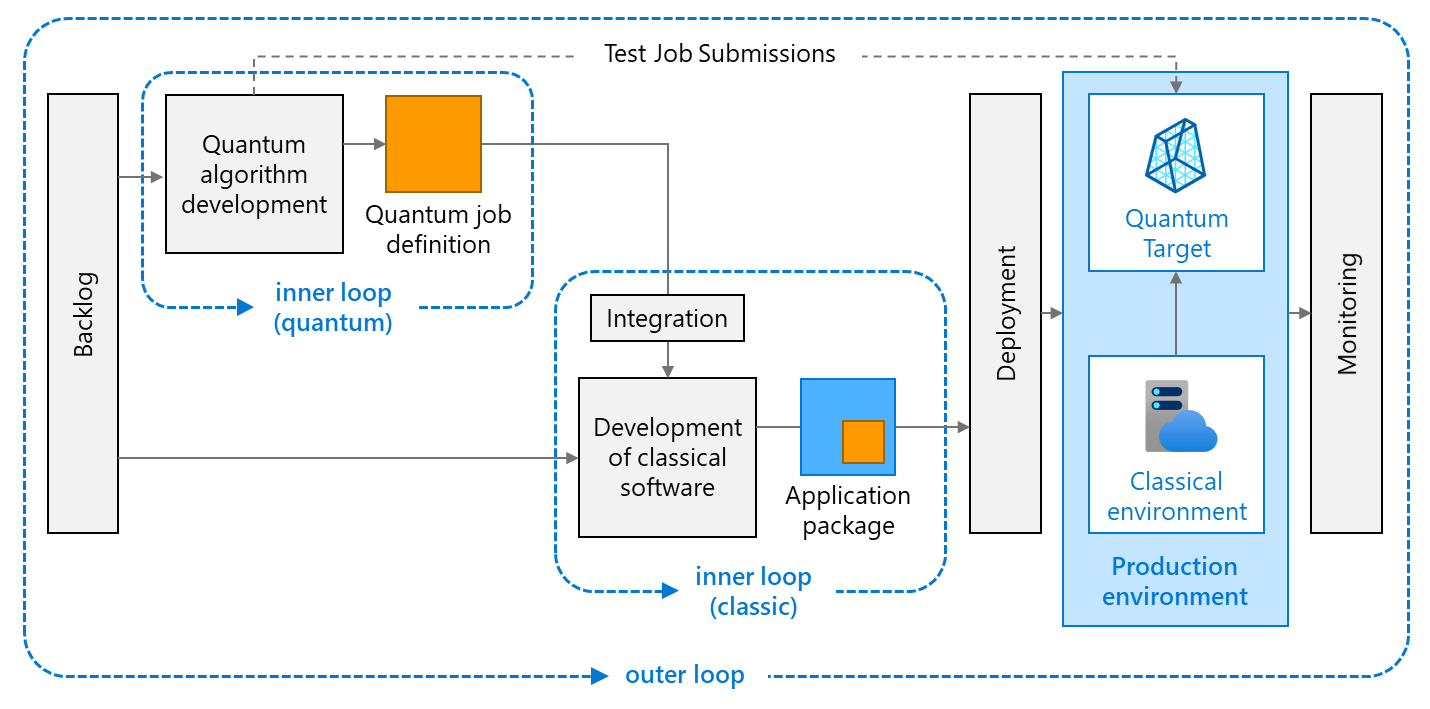

In DevOps, we typically distinguish between the inner and outer loop.

The outer DevOps loop

The outer loop involves activities typically associated with a full DevOps-cycle:

- Managing work items in a product backlog.

- Developing and testing software artifacts (via inner loops).

- Centrally building and deployment of these artifacts, which includes provisioning of the target environment.

- Monitoring the running application. Results might lead to new work items in the backlog.

The inner DevOps loop

The inner loop is the iterative process a (quantum or classical) developer performs when writing, building, testing code. It takes place on IT systems that an individual developer owns or holds responsibility for (for example, the developer machine). In DevOps for Quantum Computing there can be two inner loops.

For quantum components, the inner loop involves following activities enabled by the Quantum Development Kit. It is typically experts in quantum algorithm development who perform these activities. Typical roles include quantum engineers, quantum architects, and similar roles:

- Write quantum code

- Use libraries to keep code high level

- Integrate with classical software

- Run quantum code in simulation

- Estimate resources

- Run (test) code on quantum hardware

The last three steps are specific to quantum computing. Because quantum hardware is a scarce resource, developers typically don’t own their own hardware or have exclusive access. Instead, they use centrally operated hardware – in most cases they access production hardware to test their development artifacts.

The inner loop for classical components includes typical development steps for building, running, and debugging code in a development environment. In context of hybrid quantum applications, there is an extra step of integrating the quantum components into the classical components. Developers don’t need special quantum computing skills. You can typically implement the integration with classical programming skills.

Conclusion

As quantum computing continues to evolve and more powerful hardware becomes available. As consequence, developing quantum algorithm leaves its explorative ground. Developers need a solid approach to ensure a repeatable, high-quality process for building, deploying, and monitoring hybrid quantum applications. In this context, DevOps for Quantum Computing involves extending existing DevOps principles to support development of software consisting of classical and quantum parts.

Light

Light Dark

Dark

0 comments