DacFx Public Model Tutorial

Recently there has been an increasing interest in extending the capabilities of SSDT and DacFx. The walkthrough guides for creating new build and deployment contributors and database unit test conditions are a useful start in exploring the tools, but they only scratch the surface of what’s possible. They also don’t really show the best practices for developers when building and debugging extensions such as deployment contributors. In this article we’ll fix that by covering the key concepts behind DacFx extensions, solve real customer issues and highlight best practices. All of the code in this tutorial is available at https://github.com/Microsoft/DACExtensions.

What is the public model?

The key to most extensibility is the public model API. Dacpacs and SSDT projects both model a database’s schema. The public model API lets you access that model programmatically. You can load, query and manipulate the schema to do whatever you’d like. Most scenarios will rely on some level of querying the model and examining the objects that describe the database.

The public model API is loosely typed: the TSqlModel contains loosely typed TSqlObjects that represent all the elements in your schema. Each object will have some Properties that describe its state, and Relationships to other objects in the model. Whether the object you’re looking at is a Table, View, Index or anything else, they’re all represented by the one TSqlObject class.

Of course if everything is a TSqlObject, how can you tell Tables and Views apart? How can you even know what properties and relationships a Table has? That’s where the strongly-typed metadata classes come in. The majority of classes in the model API are actually metadata classes – you’ll see Table, View, etc. Each class has a number of fields that list the Properties and Relationships for that type of object. To lookup Tables in the model you pass in the Table.TypeClass to GetObjects, and then only tables are returned. To get the Columns for a table, you ask for relationships and pass in the Columns relationship class. If all this seems complicated, the code examples should make it clearer. The important thing to note is that you’ll pass in these metadata descriptions whenever you query the model.

Scenario: using the public model API

OK, let’s get started with using the public model! We’ll show the basics of loading, reading, adding to and saving the model. All the code in this example is in the SampleConsoleApp\ModelEndToEnd.cs sample, and can be run by specifying “RunEndToEnd” when running the SampleConsoleApp application.

Loading a model

Loading a model is really simple – either point to the location of an existing Dacpac, or create an empty model and add scripts to it.

1: // Load from a dacpac

2: using (TSqlModel modelFromDacpac = new TSqlModel("mydb.dacpac"))

3: {

4: // Note: models are disposable, always have a “using” statement or

5: // some other way of disposing them

6: }

7:

8: // Creating a new SQL Server 2012 model and adding some scripts to it

9: using (TSqlModel model =

10: new TSqlModel(SqlServerVersion.Sql110, new TSqlModelOptions { }))

11: {

12: string[] scripts = new[]

13: {

14: "CREATE TABLE t1 (c1 NVARCHAR(30) NOT NULL)",

15: "CREATE TABLE t2 (c2 INT NOT NULL)"

16: };

17: foreach (string script in scripts)

18: {

19: model.AddObjects(script);

20: }

21: }

Notes:

All the database options that you can specify in an SSDT project can be defined using TSqlModelOptions when creating a new model.

The samples show how to copy options from an existing model to a new model if you need to do this.

When adding objects to the model there are certain properties such as how they treat ANSI nulls and QuotedIdentifiers that can be defined.

Reading a Table, its properties and relationships

Reading top level types such as Tables and Views is easy, as shown below. Top level types are any type that could be defined independently in TSQL – Tables, Views, but also things like a Primary Key Constraint since this can be specified in an ALTER TABLE statement.

1: private static void ReadTheModel(TSqlModel model)

2: {

3: // This will get all tables. Note the use of Table.TypeClass!

4: var tables = model.GetObjects(DacQueryScopes.Default, Table.TypeClass).ToList();

5:

6: // Look up a specific table by ID. Note that if no schema is defined when creating

7: // an element the default "dbo" schema is used

8: var t1 = model.GetObjects(Table.TypeClass,

9: new ObjectIdentifier("dbo", "t1"), DacQueryScopes.Default).FirstOrDefault();

10:

11: // Get a the column referenced by this table, and query its length

12: TSqlObject column = t1.GetReferenced(Table.Columns)

13: .First(col => col.Name.Parts[2].Equals("c1"));

14:

15: int columnLength = column.GetProperty<int>(Column.Length);

16: Console.WriteLine("Column c1 has length {0}", columnLength);

17:

18:

19: // Verify the ColumnType of this column. This can help indicate which

20: // properties will return meaningful values.

21: // For instance since Column.Collation is only available on a simple column,

22: // and Column.Persisted is only on computed columns

23: ColumnType columnType = column.GetMetadata<ColumnType>(Column.ColumnType);

24: Console.WriteLine("Column c1 is of type '{0}'", columnType);

25: }

Relationships

To examine something like a specific Column for a table, you need to first look up the relevant table and then get referenced columns. Note how the Table.Columns metadata relationship is used to find columns for the table.

Properties

The example below shows how the Column.Length metadata property is used to get the length of a column.

If you know the return type for a given property you can use generics to cast to that type. In the example below “Length” is cast to an int. Properties usually have simple return types, such as int, bool, string. Some int properties actually map to Enum values – for example DataCompressionOption.CompressionLevel maps to the CompressionLevel enumeration – and you can cast directly to that Enum type when getting the property. Note that if the property is not found on that object, a default value for that may be returned instead.

Metadata

Finally, a very small number of types in the model have actual “Metadata” properties. These are useful when a type can actually represent conceptually similar things, where each has different properties. A Column can be a regular column, a computed column or a ColumnSet, and what properties are relevant for the column will vary depending on the ColumnType.

Notes:

DacQueryScopes can be quite important. It specifies what kind of objects you want to search for. Depending on the scope you pass in, different types of objects can be returned:

What are you looking for? |

Correct query scope |

The objects you defined in this dacpac |

UserDefined, All |

Built in types (for example SQL data types like nvarchar) |

BuiltIn, Default, All |

Referenced objects added using composite projects in SSDT (“Same Database” references) |

SameDatabase , All |

System objects from master.dacpac |

System, All |

You may notice that “different database” references aren’t on this list. That’s because they’re not really useful for anything other than validating the model, and you can never have a TSqlObject that describes them. The only time you’ll get to see any information about them is when querying what types of things an object references, and there’s a special call with an external query scope that’ll include some information about them.

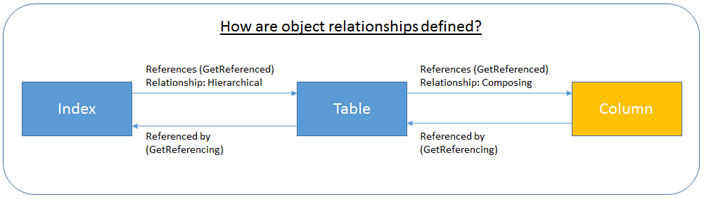

GetReferenced is only one of several methods to traverse relationships in the model, depending on the type of relationship. See the example below showing how a Table relates to an index and a column differently:

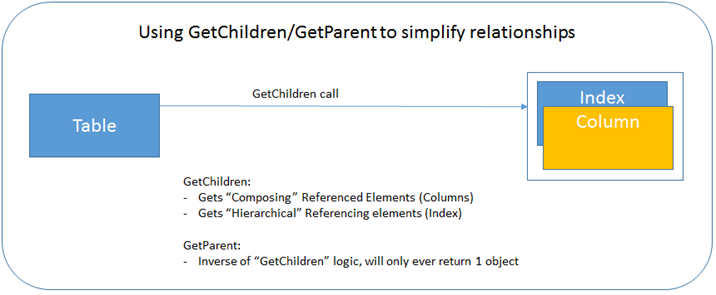

To simplify this a little in the public model, we added GetChildren and GetParent methods. In this case you shouldn’t need to understand which object has a reference to the other, or what the relationship is. It will just return all the objects that are logical children of a Table:

Some relationships have properties associated with them. For instance the relationship between a table constraint and the columns that it refers to has an Ascending property. These properties are queryable using ModelRelationshipInstance.GetProperty<T>.

Saving a dacpac

The public model supports building dacpacs and even updating the model inside an existing dacpac. Unfortunately the API does not fully support everything that an SSDT project supports. This may change in the future, but for now the feature support is as follows:

Feature |

Supported? |

Refactor log |

Yes |

Deployment contributors |

Yes |

Pre / Post deployment script |

No |

References |

No |

CLR objects |

No |

XML Schema Collection |

No |

1: // save the model to a new .dacpac. Note that the PackageOptions

2: // can be used to specify RefactorLog and contributors to include

3: DacPackageExtensions.BuildPackage(

4: dacpacPath,

5: model,

6: new PackageMetadata { Name = "MyPackageName",

7: Description = "This is usually ignored", Version = "1.0" },

8: new PackageOptions());

9:

10: // You can update the model in a dacpac and save it back.

11: using (TSqlModel modelFromDacpac = new TSqlModel(dacpacPath))

12: {

13: modelFromDacpac.AddObjects("CREATE VIEW V1 AS SELECT * FROM T1");

14:

15: using (DacPackage dacPackage = DacPackage.Load(dacpacPath,

16: DacSchemaModelStorageType.Memory,

17: FileAccess.ReadWrite))

18: {

19: DacPackageExtensions.UpdateModel(dacPackage, modelFromDacpac, null);

20: }

21: }

Scenario: filtering developer schemas

A real example raised in the forums was how to filter out objects for specific schemas. For example a user may have a “dev” or “test” schema that is populated with some data used during testing. However these should never be deployed to the production environment. The question is, how can you achieve this without using separate projects for the “dev” and “test” schema elements? Two general solutions come to mind here, each with different benefits and drawbacks. We’ll outline both approaches and show the key code required to solve this problem. For full code examples we recommend going to the samples solution and debugging into the sample application and unit tests. That’s really the best way to learn what’s going on here.

Our sample data

Here’s the sample data we’ll use for this scenario. It’s very simple – just a few schemas, tables and views we want to work with. Our goal is to start with a dacpac that includes all of these schema objects, and ensure that what’s deployed to a database only includes objects in the “prod” schema.

1: string[] SampleScripts = new string[]

2: {

3: // Prod

4: "CREATE SCHEMA [prod]",

5: "CREATE TABLE [prod].[t1] (c1 INT NOT NULL PRIMARY KEY)",

6: "CREATE VIEW [prod].[v1] AS SELECT c1 FROM [prod].[t1]",

7:

8: // Dev

9: "CREATE SCHEMA [dev]",

10: "CREATE TABLE [dev].[t2] (c2 INT NOT NULL PRIMARY KEY)",

11: "CREATE VIEW [dev].[v2] AS SELECT c2 FROM [dev].[t2]",

12:

13: // Test - include reference to production table to highlight errors

14: // if filtering breaks references

15: "CREATE SCHEMA [test]",

16: "CREATE VIEW [test].[v3] AS SELECT c1 FROM [prod].[t1]",

17: };

18:

19: // Create a package containing the sample scripts

20: string devPackagePath = GetFilePathInCurrentDirectory("dev.dacpac");

21: var scripts = SampleScripts;

22: using (TSqlModel model =

23: new TSqlModel(SqlServerVersion.Sql110, new TSqlModelOptions()))

24: {

25: AddScriptsToModel(model, scripts);

26:

27: DacPackageExtensions.BuildPackage(devPackagePath, model, new PackageMetadata());

28:

29: Console.WriteLine("Objects found in original package: '" + devPackagePath + "'");

30: PrintTablesViewsAndSchemas(model);

31: }

32:

Solution 1: Filtering the model and creating a new dacpac

The first solution assumes that whenever you build your project, you’d like to output two dacpacs: a “production” dacpac that doesn’t contain the “dev” or “test” schemas, and a “dev” dacpac that contains all objects. “production” would be used when deploying to a production database, while the “dev” dacpac is used during development.

Let’s look at the key steps required to do this. All the code in this example is in the SampleConsoleApp\ModelFilterExample.cs sample, and can be run by specifying “FilterModel” when running the SampleConsoleApp application. There are also unit tests for this in the “SampleTests\TestFiltering.cs” file.

Filtering the model and building a new dacpac

Let’s create a simple “IFilter” interface that takes in a set of TSqlObjects and performs some action. We’ll write a schema filter and apply it to all the objects in our model, then save it to a dacpac. The basic process is as follows:

1: public void CreateFilteredDacpac(string dacpacPath, string filteredDacpacPath)

2: {

3: DisposableList disposables = new DisposableList();

4: try

5: {

6: // Load a model from the dacpac.

7: TSqlModel model = disposables.Add(

8: new TSqlModel(dacpacPath, DacSchemaModelStorageType.Memory));

9:

10: // Filter the objects and copy them to a new model.

11: TSqlModel filteredModel = disposables.Add(CreateFilteredModel(model));

12:

13: // Create a new dacpac using the new model.

14: DacPackageExtensions.BuildPackage(

15: filteredDacpacPath,

16: filteredModel,

17: new PackageMetadata(),

18: new PackageOptions());

19: }

20: finally

21: {

22: disposables.Dispose();

23: }

24: }

And the filter works by examining the first part of the TSqlObject.Name property. ObjectIdentifiers describe the name. The internal part of the name always starts with the schema. Even the name describes a reference to an external object (for example to master DB or a different database) the external parts of the name are in a separate property. This makes it easy to write a schema-based filter. Here’s a simplified version (again look at the sample files for a fully fleshed out example):

1: public interface IFilter

2: {

3: IEnumerable<TSqlObject> Filter(IEnumerable<TSqlObject> tSqlObjects);

4: }

5:

6: public class SchemaBasedFilter : IFilter

7: {

8: private HashSet<string> _schemaNames;

9:

10: public SchemaBasedFilter(IList<string> schemaNames)

11: {

12: _schemaNames = new HashSet<string>(schemaNames);

13: }

14:

15: public IEnumerable<TSqlObject> Filter(IEnumerable<TSqlObject> tSqlObjects)

16: {

17: // Only return objects that pass the “ShouldInclude” test.

18: return tSqlObjects.Where(o => ShouldInclude(o));

19: }

20:

21: private bool ShouldInclude(TSqlObject tsqlObject)

22: {

23: bool found = false;

24: ObjectIdentifier id = tsqlObject.Name;

25: if (id.HasName && id.Parts.Count >= 1)

26: {

27: string schemaName = id.Parts[0];

28: found = _schemaNames.Contains(schemaName,

29: StringComparer.OrdinalIgnoreCase);

30: }

31:

32: // If the object had one of the filtered schema names, we exclude it

33: return !found;

34: }

35: }

36:

Finally, there’s the CreateFilteredModel method that reads all objects from the current model and copies only objects that pass the filter into a new model:

1: // Full ModelFilterer code including class init can be viewed

2: // in the samples project.

3: public class ModelFilterer

4: {

5: private IList<IFilter> _filters;

6:

7: public TSqlModel CreateFilteredModel(TSqlModel model)

8: {

9: TSqlModelOptions options = model.CloneModelOptions();

10: TSqlModel filteredModel = new TSqlModel(model.Version, options);

11:

12: IEnumerable<TSqlObject> allObjects = model.GetObjects(QueryScopes);

13: IFilter allFilters = new CompositeFilter(_filters);

14: foreach (TSqlObject tsqlObject in allFilters.Filter(allObjects))

15: {

16: string script;

17: if (tsqlObject.TryGetScript(out script))

18: {

19: // Some objects such as the DatabaseOptions can't be scripted out.

20: filteredModel.AddObjects(script);

21: }

22: }

23:

24: return filteredModel;

25: }

26: }

Notes:

The schema name comparison currently uses a simple string comparison. Ideally it would compare based on the SQL Database Collation for the model by using SqlString objects for comparison. This is the kind of feature we may add in future releases, but you could also write this yourself fairly easily.

Updating the model in the existing dacpac

The API also supports updating the model inside and existing dacpac. This might be useful if you have other resources such as pre and post deployment scripts inside a dacpac. The public API doesn’t have support for including these when building a dacpac yet, so the best solution would be to copy the dacpac file and then update the model inside it. There’s a unit test in TestFiltering.cs that shows how this is done. The API call is really simple:

1: public void UpdateDacpacModelWithFilter(string dacpacPath)

2: {

3: DisposableList disposables = new DisposableList();

4:

5: try

6: {

7: TSqlModel model = disposables.Add(

8: new TSqlModel(dacpacPath, DacSchemaModelStorageType.Memory));

9: TSqlModel filteredModel = disposables.Add(CreateFilteredModel(model));

10:

11: // Note that the package must be opened in ReadWrite mode –

12: // this will fail if this isn't specified

13: DacPackage package = disposables.Add(

14: DacPackage.Load(dacpacPath,

15: DacSchemaModelStorageType.Memory, FileAccess.ReadWrite));

16: package.UpdateModel(filteredModel, new PackageMetadata());

17: }

18: finally

19: {

20: disposables.Dispose();

21: }

22: }

Deploying the filtered Dacpac

Deploying a Dacpac is really simple using the DacServices API. DacServices supports publishing Dacpacs, creating Dacpacs from a database, and a number of other useful features. To actually deploy our filtered Dacpac to production (or in this example, to localdb) we’d just do as follows:

1: private void PublishProductionDacpac(string productionPackagePath)

2: {

3: string extractedPackagePath = GetFilePathInCurrentDirectory("extracted.dacpac");

4: using (DacPackage package =

5: DacPackage.Load(productionPackagePath, DacSchemaModelStorageType.Memory))

6: {

7: Console.WriteLine("Deploying the production dacpac to 'ProductionDB'");

8: DacServices services =

9: new DacServices("Server=(localdb)\\v11.0;Integrated Security=true;");

10: services.Deploy(package, "ProductionDB");

11: }

12: }

Solution 2: Filtering at deployment time

So filtering objects in a dacpac is one option, but what if you want to avoid the need to create a new dacpac? Isn’t there a way to just change things when you’re actually deploying the dacpac? That’s exactly what we’ll show you next by creating a custom Deployment Plan Modifier contributor that runs during the deployment pipeline. These are covered in a separate walkthrough but this example will show you how to specify the contributors to run at deployment time rather than when building a project.

As usual the full code for this example is in the samples. To see how this example works look at the SamplesTests\TestFiltering.cs unit test class. The “TestFilterPlanWhenPublishing” unit test runs this end to end. In this case a unit test was used since it avoided the need to install the sample to the extensions directory before running the sample code (see Best Practices for more information).

Creating a filtering deployment contributor class

A basic contributor class just requires an Export attribute and to extend the DeploymentPlanModifier class. Here’s a “Hello World” contributor and how to add it to the deployment:

1: [ExportDeploymentPlanModifier(PlanFilterer.PlanFiltererContributorId, "1.0.0.0")]

2: public class PlanFilterer : DeploymentPlanModifier

3: {

4: public const string PlanFiltererContributorId = "Public.Dac.Samples.PlanFilterer";

5: protected override void OnExecute(DeploymentPlanContributorContext context)

6: {

7: base.PublishMessage(new ExtensibilityError("Hello world!", Severity.Message));

8: }

9: }

10:

11: public void DeployWithContributor()

12: {

13: // assume the dacpac exists

14: DacServices services =

15: new DacServices("Server=(localdb)\\v11.0;Integrated Security=true;");

16:

17: string productionDbName = "ProductionDB";

18: using (DacPackage package =

19: DacPackage.Load(existingPackagePath, DacSchemaModelStorageType.Memory))

20: {

21: // Deploy the dacpac with an additional "filter" contributor.

22: DacDeployOptions options = new DacDeployOptions()

23: {

24: AdditionalDeploymentContributors = PlanFilterer.PlanFiltererContributorId

25: };

26:

27: services.Deploy(

28: package,

29: productionDbName,

30: upgradeExisting: true,

31: options: options);

32: }

33: }

Note that this doesn’t cover actual installation of the contributor DLL – that’s covered under the Best Practices section later in the document.

Reading and filtering the deployment plan steps

During deployment a number of different objects are available to a contributor. In this case the Deployment Plan is the most interesting thing. It describes each step in the deployment, and contributors can add new steps and remove or replace existing steps. For this example, what we need is to block any CreateElementSteps that mention the schemas to be filtered. Understanding what step you need to examine might not be immediately obvious – in this case you could probably guess, but sometimes the best thing to do would be writing a dummy contributor that steps through a plan and then debugging a deployment, or writing the step type and contents to a file. That lets you understand the precise types to work with.

Here’s the code that actually filters out steps. We’re reusing the filter code from the 1st scenario since the logic is all the same. The only difference is that each step has only 1 object, so we’ll apply our filter and if no objects are left afterwards, we know that the step should be removed.

1: private IFilter _filter;

2:

3: protected override void OnExecute(DeploymentPlanContributorContext context)

4: {

5: // Initialize filter options based on contributor arguments

6: InitializeFilter(context.Arguments);

7:

8: DeploymentStep next = context.PlanHandle.Head;

9: while (next != null)

10: {

11: DeploymentStep current = next;

12: next = current.Next;

13:

14: CreateElementStep createStep = current as CreateElementStep;

15: if (createStep != null && ShouldFilter(createStep))

16: {

17: base.Remove(context.PlanHandle, createStep);

18: }

19: }

20: }

21:

22: private bool ShouldFilter(CreateElementStep createStep)

23: {

24: TSqlObject createdObject = createStep.SourceElement;

25: return !_filter.Filter(new[] {createdObject}).Any();

26: }

Notes:

We’ve skipped a number of steps here, most importantly how the filter is actually initialized. It’s fairly simple code and if you debug through the example you’ll see exactly how this works

While writing this example, we added an “Initialize” method to the IFilter interface. This doesn’t look right on an interface, so in a real-world example we’d probably change this to be an abstract “Filter” class with an empty default implementation of the Initialize method, or simply use a Factory pattern for creating the filters instead.

Configuring the contributor at deployment time, and running the deployment

Now that we’ve written the sample contributor, let’s see how it would be used during deployment:

1: // Note: deploying to (localdb)\v11.0 here, which is the default LocalDB instance

2: // for SQL Server 2012. You may have a different instance on your machine,

3: // if you run into any problems then look online for LocalDb help

4: DacServices services =

5: new DacServices("Server=(localdb)\\v11.0;Integrated Security=true;");

6:

7: string productionDbName = "ProductionDB";

8: using (DacPackage package =

9: DacPackage.Load(existingPackagePath, DacSchemaModelStorageType.Memory))

10: {

11: // Deploy the dacpac with an additional "filter" contributor.

12: DacDeployOptions options = new DacDeployOptions();

13: options.AdditionalDeploymentContributors = PlanFilterer.PlanFiltererContributorId;

14:

15: // Specify the filter to use and what arguments it needs.

16: // Note that this is a little limited by having to pass string-based arguments.

17: // This could be worked around by serializing arguments to a file and passing

18: // the file path to the contributor if you need to do anything advanced.

19: options.AdditionalDeploymentContributorArguments =

20: PlanFilterer.BuildPlanFiltererArgumentString(

21: "SchemaBasedFilter",

22: new Dictionary<string, string>()

23: {

24: {"Schema1", "dev"},

25: {"Schema2", "test"},

26: });

27:

28: // Run the deployment with the options as specified

29: services.Deploy(package,

30: productionDbName,

31: upgradeExisting: true,

32: options: options);

33: }

And that’s that! Now you have the ability to filter by schema when deploying a dacpac.

Building on this example

Follow up scenarios you could try for yourself:

Scenario |

Hint (how to do it) |

Extract a dacpac from a database and filter out some objects. For example filter out all Users and Logins so that later you could replace them with new ones. |

Use the DacServices API to extract the dacpac, then run the ModelFilterer on it with a new “FilterObjectType” filter |

Implement a more relaxed “Block on Table Loss” function instead of the current “Block on possible Data Loss”. This is another real-world example, a team wanted to allow columns to be dropped, but wanted to block the deployment if tables were removed. |

Write a DeploymentPlanModifier contributor that looked at the ModelComparisonResult in the deployment context, and block if there are any tables in the list of elements to be dropped. If there are, block deployment by publishing an error message with severity “Error”. |

Implement a more relaxed “Drop Objects not in source” option that doesn’t drop elements in a “reserved” schema. Another real world example. |

Note that you will need a recent release of DacFx to make this work as there was a bug in the previous version. In addition to filtering CreateElementSteps, you would also filter DropElementSteps and AlterElementSteps if they relate to the reserved schema. |

Learnings and best practices

Testing deployment contributors

The walkthrough guides discuss how to install a contributor so that your Visual Studio projects can make use of them. That’s great in a way, but it’s really not what you want to use during testing. It’s too cumbersome to copy the DLL each time you run it, and if you actually open Visual Studio to test it, you’ll need to shut it down every time you want to change your contributor code.

The best way to test contributors is to write unit tests and reference your contributor DLL and the DacFx DLLs. To be picked up during deployment the contributor code must be in a DLL file (not and executable), and that must either be under the standard DacFx extensions directory on your machine or else be in the same directory as the “Microsoft.Data.Tools.Schema.Sql.dll” file. If you are writing unit tests, the 2nd option has one really powerful benefit. Unit tests usually copy all referenced DLLs to the same location and that means that if your unit test references the DacFx DLLs and your contributor DLL, you can easily run tests without needing to copy the contributor code into the extensions directory. When the test is run, both will be in the same location and hence the DacFx extension manager will find your contributor.

The deployment plan filtering example uses this approach and it makes it really easy to make changes to the contributor and verify that everything works.

Conclusion

Extending DacFx can help solve common issues that your team runs into. Extensions can be really powerful– the APIs are intended to let you do everything our tools can do internally. We’re not quite there yet, but we’ll be updating the current APIs and adding new ones in the future, so stay tuned!

Hopefully after reading this tutorial you’ll take a chance to think about an issue you’ve had that SSDT/DacFx doesn’t solve for you right now, and if you could solve it yourself. If you’d like to share your solution with others, think about publishing it online or adding it to the samples project https://dacsamples.codeplex.com/.