Is it ok to use nested Parallel.For loops?

Every now and then, I get this question: “is it ok to use nested Parallel.For loops?” The short answer is “yes.” As is often the case, the longer answer is, well, longer.

Typically when folks ask this question, they’re concerned about one of two things. First, they’re concerned that each nested loop will assume it “owns the machine” and will thus try to use all of the cores for itself, e.g. if their code was running on an eight-core machine, they’re concerned that the outer loop would run eight of the nested loops in parallel, each of which would use eight threads, such that there would be 64 threads all churning on the inner loop. Second, they’re concerned that the outer loop’s threads will end up blocking waiting for the inner parallel loops to finish.

Let me start by waving my hands a bit and saying that, for the most part, you don’t need to worry about such things, in that Parallel.For was designed to accommodate this usage pattern. To understand why, it helps to have a high-level understanding for how Parallel.For is implemented. Parallel.For doesn’t just queue MaxDegreeOfParallelism tasks and block waiting for them all to complete; that would be a viable implementation if we could assume that the parallel loop is the only thing doing work on the box, but we can’t assume that, in part because of the question that spawned this blog post. Instead, Parallel.For begins by creating just one task. When that task is executed, it’ll first queue a replica of itself, and will then enlist in the processing of the loop; at this point, it’s the only task processing the loop. The loop will be processed serially until the underlying scheduler decides to spare a thread to process the queued replica. At that point, the replica task will be executed: it’ll first queue a replica of itself, and will then enlist in the processing of the loop. Starting to see a pattern? In effect, Parallel.For makes sure there’s always an additional task hanging around that will join into the loop’s processing if another thread becomes available. If no additional threads become available, when the loop’s processing has completed, that additional task will be canceled. Further, the first task the loop creates is actually run synchronously (using Task.RunSynchronously).

What can we infer from this hand-wavy description of how Parallel.For achieves parallelism? Because the first task the loop creates is run synchronously, and because the loop only spreads itself out to additional threads when the underlying scheduler gives it more threads, if the underlying scheduler has no threads to share (because they’re all occupied processing, say, other iterations of the outer parallel loop), the Parallel.For will be content to complete on just the thread that invoked it. Going back to the original question, if I have a nested set of Parallel.For loops, the outer loop can spread itself out across available threads, and the inner loops can mostly complete on just the thread used by the outer loop to invoke the inner loop.

We can see this with a small code example:

using System; using System.Threading; using System.Threading.Tasks;

class Program { static void Main() { const int OUTER_ITERS = 2000; const int INNER_ITERS = 2000;

Parallel.For(0, OUTER_ITERS, i => { int innerConcurrencyLevel = 0; int innerConcurrencyLevelSum = 0; Parallel.For(0, INNER_ITERS, j => { Interlocked.Increment(ref innerConcurrencyLevel); for (int spin = 0; spin < 50000; spin++); Interlocked.Add(ref innerConcurrencyLevelSum, Volatile.Read(ref innerConcurrencyLevel)); for (int spin = 0; spin < 50000; spin++); Interlocked.Decrement(ref innerConcurrencyLevel); }); Console.Write(“{0}, “, Math.Round(innerConcurrencyLevelSum / (double)INNER_ITERS)); }); } }

Here I have nested parallel for loops. The inner loop’s body keeps track of how many other iterations of this loop are currently running, and outputs the average for the loop to the console. Here’s what I see when I run this on my quad-core:

2, 2, 1, 2, 1, 2, 2, 1, 1, 2, 2, 1, 2, 1, 2, 1, 1, 1, 2, 2, 1, 2, 2, 2, 2, 2, 2, 1, 2, 2, 2, 2, 1, 2, 2, 2, 2, 2, 1, 2, 1, 2, 1, 1, 2, 1, 2, 2, 1, 2, 1, 1, 1, 2 , 1, 2, 2, 1, 2, 1, 2, 1, 2, 1, 2, 2, 1, 1, 2, 1, 1, 1, 2, 2, 1, 1, 1, 2, 1, 2, 1, 2, 1, 2, 1, 2, 1, 1, 2, 2, 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 , 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 , 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 , 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 , 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1 , 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, … // and on and on

Note that while there are some “2”s sprinkled in there (in particular towards the beginning as processing was getting going), for the most part each inner loop was running with a concurrency level close to 1.

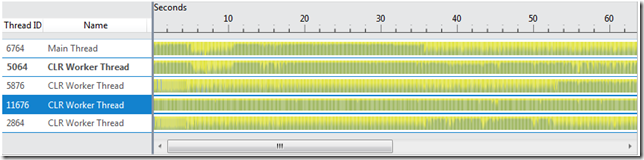

We can see this as well by using the Concurrency Visualizer in Visual Studio 11 Beta. I tweaked the above example to remove the concurrency-level tracking code (since that introduces unnecessary I/O and synchronization for the purposes of this experiment), and here’s what I see:

For the purposes of this screenshot, I hid some things the Concurrency Visualizer was showing me (e.g. the GPU and disk I/O channels) so that the important results here really pop. The key things to see are that 1) only five threads are being used in the processing of this loop (not 16 as we might expect if, on my quad-core, each inner parallel loop was utilizing four threads), and 2) there’s no red here, meaning there’s little unnecessary blocking happening.

Now, I’m not saying that there’s no way to mess with this logic; I’m sure you could come up with a case. I’m also not saying that I advocate using nested parallel loops; if it’s possible to easily transform your code into one that uses a single parallel loop, that’s likely going to result in better performance, as you’ll be minimizing the number of parallel loops you invoke (each of which has some overhead) and you’ll be avoiding any potential problems that could arise from corner cases. What I am saying is that we designed Parallel.For (and Parallel.ForEach, of course) to behave decently well in these cases, so that if you need to use nested parallel loops, you should feel comfortable in doing so. This is particularly important from a composability perspective. If you implement a method in a library that uses a parallel loop, a consumer of your library should be able to invoke your method from within a parallel loop and not be overly thoughtful about how your library method was implemented. This is also important for non-nested cases, for other cases where multiple parallel loops may be run in parallel. For example, with ASP.NET, we want to make sure the system behaves well if the processing of a request involves a parallel loop; many requests may be processed in parallel, and the parallel loop for any given request should not dominate the system and starve the processing of other requests.

I hope that helps.

Light

Light Dark

Dark

0 comments