Infrastructure as Code – On demand GPU clusters with Terraform & Jenkins

Background

Developing robust algorithms for self-driving cars requires sourcing event data from over 10 billion hours of recorded driving time. But even with 10 billion-plus hours, it can be challenging to capture rare yet critical edge cases like weather events or collisions at scale. Luckily, these rare events can often be simulated effectively.

Cognata, a startup that develops an autonomous car simulator uses patented computer vision and deep learning algorithms to automatically generate a city-wide simulation that includes buildings, roads, lane marks, traffic signs, and even trees and bushes.

Simulating driving event data, however, requires expensive GPU and compute resources that vary depending on a given event. In working with Cognata, the big obstacle was going to be automation and creating on-demand resources in Azure.

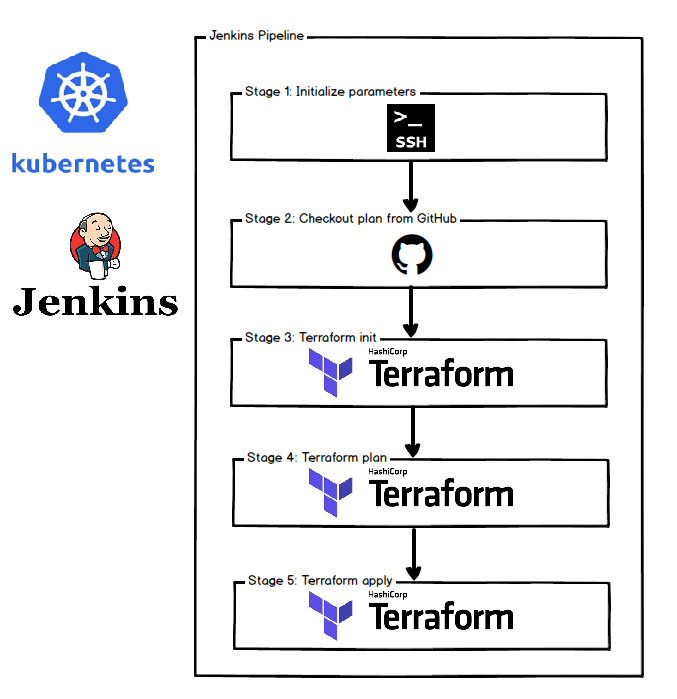

In this code story, we’ll describe how we were able to provision custom GPU rendering clusters on demand with a Jenkins pipeline and Terraform.

The Challenges

Cognata required a scalable architecture to render its simulations across individual customers and events using GPU. To support this need, we first investigated using Docker containers and Kubernetes. However, at the time of this post, nvidia-docker does not officially support X server and OpenGL support is a beta feature. As an alternative to container orchestration, we architected a scalable solution using a GPU Azure Virtual Machine Scale Sets (VMSS) cluster and a custom application installation script for on-demand deployment.

The complex changesets must be applied to the infrastructure with minimal human interaction and must support building, changing, and versioning infrastructure safely and efficiently.

The solution

On-demand Infrastructure as Code

Cognata’s applications and services are deployed in a Kubernetes cluster and the rendering application is deployed in GPU Virtual machines clusters. To apply “Infrastructure as code” methodology, we decided to use Terraform and Jenkins.

Terraform allows us to provision, deprovision, and orchestrate immutable infrastructure in a declarative manner; meanwhile, Jenkins pipelines offers delivery process rather than an “opinionated” process and allows us to analyze and optimize the process.

Jenkins offered two big advantages:

- The pipelines can be triggered via the HTTP API on demand.

- Jenkins in Kubernetes is very easy. We used the Jenkins Helm chart:

$ helm install --name jenkins stable/jenkins

Deploying and Destroying resources

Creating on-demand resource required us to create detailed plans and specification of our resources. These plans are stored in a git repository such as GitHub or BitBucket to maintain versioning and continuity.

In our case, the plans included a Virtual Machines Scale Sets (VMSS) with GPU and an installation script of Cognata’s application. The VMSS was created from a predefined image that included all the drivers and prerequisites of the application.

Once we have the plans in the git repository, we need to run the workflow in the Jenkins pipeline to deploy the resources.

Starting the workflow can be triggered from an HTTP request to the Jenkins pipeline.

http://JENKINS_SERVER_ADDRESS/job/YOUR_JOB_NAME/buildWithParam

After the pipeline was triggered, the plans were pulled from the git repository and the deploying process is started using Terraform.

Terraform works in 3 stages:

- Init – The

terraform initcommand is used to initialize a working directory containing Terraform configuration files. - Plan – The

terraform plancommand is used to create an execution plan. - Apply – The

terraform applycommand is used to apply the changes required to reach the desired state of the configuration, or the pre-determined set of actions generated by aterraform planexecution plan.

Once the plan was executed and Terraform saved the state results in files, we needed to upload the result files to a persistent storage such as Azure Blob storage . This step would later enable us to download the state files and destroy the resources that we created after the business process was completed, and to actually create on-demand clusters.

The flow of the solution is described in the following image:

Terraform client

To invoke Terraform commands in the Jenkins pipeline, we created a small Docker container with Terraform and Azure CLI with the following Dockerfile.

FROM azuresdk/azure-cli-python:hotfix-2.0.41

ARG tf_version="0.11.7"

RUN apk update && apk upgrade && apk add ca-certificates && update-ca-certificates &&

apk add --no-cache --update curl unzip

RUN curl https://releases.hashicorp.com/terraform/${tf_version}/terraform_${tf_version}_linux_amd64.zip -o terraform_${tf_version}_linux_amd64.zip &&

unzip terraform_${tf_version}_linux_amd64.zip -d /usr/local/bin &&

mkdir -p /opt/workspace &&

rm /var/cache/apk/*

WORKDIR /opt/workspace

ENV TF_IN_AUTOMATION somevalue

Conclusion and Reuse

With the adoption of driverless cars, 10 billion-plus hours of recorded driving time just isn’t viable. Cognata’s complex simulations rendering for multiple autonomous car manufacturers can become costly and inefficient. Our Jenkins pipeline and Terraform solution enabled Cognata to dynamically scale GPU resources for their simulations, making it easier to serve their customers while saving significant cost in compute resources. Using these technologies we were able to automate the deployment and maintenance of Cognata’s Azure VMSS GPU clusters and simulation logic.

Our joint solution is adaptable to any workload that requires:

- Provisioning Azure resources using Terraform.

- Using the Jenkins DevOps process to provision on-demand resources in Azure and in Kubernetes.

Resources

Cover photo by Andres Alagon on Unsplash

Light

Light Dark

Dark

0 comments