Creating Speech-Driven Applications with Cognitive Services and LUIS

Ever wonder what it’s like to have Jarvis, the assistant from Iron Man, help you build an application? With recent advances in machine learning and speech recognition, this is now possible. We have been assisting several partners to embrace conversation as a platform and to take advantage of Microsoft’s APIs for cognitive services. Recently, we collaborated with Huma.AI, a San Francisco-based startup, which is combining artificial intelligence, design, and rapid prototyping in new and exciting ways.

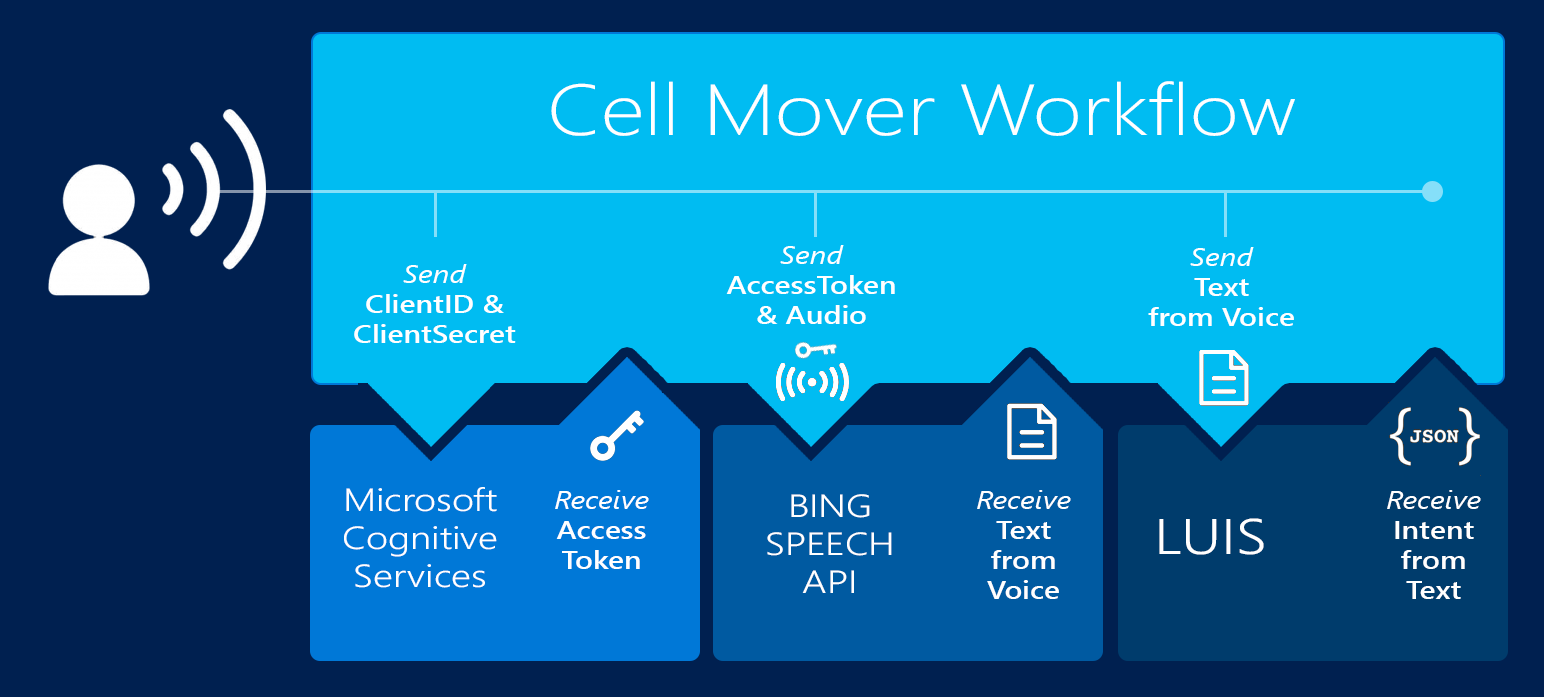

In this case study, we will walk through a generalized sample project we created to help Huma.AI and others to use Microsoft Cognitive Services’ speech to text service to interpret a user’s voice commands, and Language Understanding Intelligent Service (LUIS) to determine a user’s intents. We will also discuss how we leveraged a new service still in beta called CRIS (Custom Recognition Intelligent Service) to enhance the speech recognition experience by building a custom language model and custom speech to text interpreter for domain specific vocabulary.

The Problem

Huma.AI was using API.AI for both its speech to text and text to intent capabilities. However, the company started to experience limitations of API.AI. Speech to text is a critical part of their product strategy, and Huma.AI wanted a solution that was best-of-breed. Microsoft Cognitive Services’ speech to text service combined with CRIS proved to be the most accurate and customizable solution with which to integrate. Huma.AI was able to migrate all its existing intents in just two days, and it proved more accurate and easier to train than API.AI.

The startup decided to work with Microsoft to switch over to Microsoft Cognitive Services for speech to text, and LUIS for text to intent. Companies attempting to perform non-trivial tasks through voice commands, however, quickly ran into a complexity problem when trying to quantify intent. For instance, in our CellPainter sample, you can say the following commands to paint or modify the UI:

- Add a blue cell in 14.

- Make 14 green.

- Move it up.

- Put a red cell in 12.

- Move it down.

The challenge here is that users may express the same idea or intent in multiple different ways. With any robust speech interaction model, we must consider all possible natural language commands. This complication makes the problem more than just “speech to text,” but rather “speech to intent.”

Overview of the Solution

To support Huma.AI and other partners attempting to pioneer the next wave of speech-driven applications, we created a generalized problem statement and simplified its requirements down to an application we called CellPainter. This application updates its interface based on the user’s voice commands to demonstrate how we handled the intent of manipulating colored cells in a grid. Huma.AI was then able to take the sample project and integrate the Cognitive Services Speech API and the LUIS API into its own application.

Implementation

Microsoft Cognitive Services are a set of pre-trained and pre-built libraries for specific but common machine learning tasks developed by Microsoft Research. Using these services, developers can easily integrate features like facial, speech, and image recognition into their applications.

The CellPainter application is built using the following:

- RecorderJS to record audio

- Every call to the Cognitive Service Speech API requires you to pass through a JWT access token as part of the speech request header. Before you can do this, you need to acquire an access token with your subscription key.

- The Cognitive Service Speech API to recognize a user’s voice commands.

- LUIS to predict a user’s intentions, which are interpreted and used to update cells in a web user interface

Speech to Text

Since Huma.AI is built with Node.js and React, we decided to make CellPainter with Node.js, which allows us to demonstrate integration with Cognitive Service Speech APIs in JavaScript.

- Sign up for Microsoft Cognitive Services

- Get your keys for the Speech API

With the API key clientSecret, we acquire an access token for all subsequent speech API requests:

var request = require('request');

...

request.post({

url: 'https://oxford-speech.cloudapp.net/token/issueToken',

form: {

'grant_type': 'client_credentials',

'client_id': encodeURIComponent(clientId),

'client_secret': encodeURIComponent(clientSecret),

'scope': 'https://speech.platform.bing.com'

}

}

Then, with the access token and the audio data, we make a POST request to the Speech API endpoint:

request.post({

url: 'https://speech.platform.bing.com/recognize',

qs: {

'scenarios': 'ulm',

'appid': <APPID>,

'locale': 'en-US',

'device.os': 'wp7',

'version': '3.0',

'format': 'json',

'requestid': '1d4b6030-9099-11e0-91e4-0800200c9a66',

'instanceid': '1d4b6030-9099-11e0-91e4-0800200c9a66'

},

body: waveData,

headers: {

'Authorization': 'Bearer ' + accessToken,

'Content-Type': 'audio/wav; samplerate=16000',

'Content-Length' : waveData.length

}

}

Text to Intent

Now that we have converted the user’s spoken voice command into text using the Speech API, we are ready to determine a quantifiable intent from it using machine learning.

Train the Model

Before we can use LUIS to determine intent, we need to create a new LUIS application to train a model for this use case by populating it with utterances, entities, and intents. Utterances are words the users might say. Entities are subjects you wish to identify within the utterances. Intents are the user’s goals identified from the utterance.

For example:

- Utterance: “move the red cell left”

- Identified entities: [“CellColor”: “red”, “direction”: “left”]

- Identified intent: “intent”: “MoveCell”

Train with Data

- Create an account with LUIS. Log into LUIS and create a new application. To learn more about how to use LUIS to train data for an application, you can watch their tutorial video.

- Add entities and train the model to identify this newly created entity. For example: “CellColor”, “position”, “direction”

- Add intents and train the model. If no intents are recognized, LUIS will return “none.” For example: “AddCell”, “MoveCell”, “delete”, “None”

- Add many utterances to train the model. With each utterance added, LUIS will attempt to identify the relevant entities and intent.

- If LUIS does not identify the intent correctly, you can train it by selecting the correct intent from the list of dropdowns.

- If the entities are not identified correctly, you can train it by clicking and highlighting the word in the utterance, then select the correct entity that matches the highlighted word. For example: For utterance “add a red cell,” the identified intent should be “AddCell” and the word “red” LUIS should highlight it with the entity “CellColor.”

Training, Publishing, and Feedback Loops

To improve the accuracy of the model, continue to add more utterances with variations in style and format to the LUIS application to improve identification of entities and intent. To test the model, publish the application to expose an HTTP endpoint.

Submitting the query “add a blue cell to three,” we receive the following JSON response:

{

"query": "add a blue cell to three",

"intents": [

{

"intent": "AddCell",

"score": 0.992903054,

"actions": [

{

"triggered": true,

"name": "AddCell",

"parameters": []

}

]

},

...

"entities": [

{

"entity": "three",

"type": "builtin.number",

"startIndex": 19,

"endIndex": 23,

"score": 0.951857269

},

{

"entity": "blue",

"type": "CellColor",

"startIndex": 6,

"endIndex": 9,

"score": 0.9930215

},

{

"entity": "three",

"type": "position",

"startIndex": 19,

"endIndex": 23,

"score": 0.898235142

},

{

"entity": "cell",

"type": "CellType",

"startIndex": 11,

"endIndex": 14,

"score": 0.9981711

}

],

...

Each query made to the HTTP endpoint is tracked by LUIS. LUIS maintains the history of all the queries and the predicted intents and entities. This method helps us identify queries that ended in poor predictions. We can then feed those bad queries as utterances into our LUIS model and train it to identify the correct intents and entities instead. The next time a user utters a query with the same pattern, the model will correctly predict the intents and entities.

Adding Intents to the App

Now that we have identified quantifiable intent for painting or manipulating cells from the user’s original spoken commands, we can use the intents and entities returned in the prior section to perform the appropriate actions within the application.

For example, if the JSON result returned from the LUIS application HTTP endpoint has identified the intent as the “AddCell” intent, then we look for the position and color entities in the JSON result for adding a cell.

var entities = resp["entities"];

if ( resp["intents"][0]["intent"] === "AddCell" ) {

// WE WANT TO ADD A CELL, SO LOOK FOR POSITION AND COLOR

for ( var i = 0; i < entities.length; i++ ) {

if ( entities[i]["type"] === "CellColor" ) {

addColor = entities[i]["entity"];

}

if ( entities[i]["type"] === "builtin.number" ) {

addPosition = entities[i]["entity"];

}

}

...

addCell(addPosition, addColor);

...

}

Custom Language Model

With any natural language, certain words and phrases are specific or unique to a particular domain or context. For example, if users say “add a login button called foo,” then the already-trained model may interpret the command as “add a login button called food.” This error occurs because a model trained broadly on conversational phrases has most likely come across the word “food” far more frequently than the software-specific term “foo.” As a result, the model attempted to self-correct what it considered a statistically more accurate result. In such cases, we can enhance the baseline language model by adding words that are relevant to our context to derive a custom language model. We can do this by creating a CRIS application, then train the custom language model by feeding it with the specific words and phrases expected within the application’s context. For the CellPainter example, you can import cris.json to train the custom language model on additional words, which we used to improve our results.

The End-to-End App

Installation

Clone the CellPainter repo and then install dependencies:

git clone https://github.com/ritazh/speech-to-text-demo.git

cd speech-to-text-demo

npm i

Run the application then hit http://localhost:3000 in your browser:

node app.js

Get your keys for Cognitive Services’ Speech API and LUIS and plug them into the application:

- Follow the steps in the Cognitive Services documentation to create your LUIS app, then get your LUIS application ID and subscription key.

- To get the same trained LUIS app for painting cells, import ours using cellmover.json.

- To get the same context trained by CRIS, upload cris.json to create the same custom language model.

Opportunities for Reuse

That’s it! What we’ve done here is leveraged pre-trained speech to text machine learning tasks developed by Microsoft Research to bring natural language processing to our application.

We have also demonstrated that we can train our language model with CRIS to customize word recognition for vocabulary specific to an application’s context. Despite the complexity of users’ intents, we were able to train LUIS to help us derive variations of ways to convey intent by providing common ones we already anticipated. We’ve applied the same architecture in many customer and partner use cases.

Our solution for CellPainter is on GitHub, which can serve as an example of how to enable natural language commands in your own application.

Light

Light Dark

Dark

2 comments

Hi Rita & Rick,

Who can I use this with Skype bot application?

Once you have created your LUIS app and your CRIS app, you can call those endpoints from your bot application.